Did your solution is working on filebeat 8.4.3?

Did I properly tried to force destination index via @metadata _index?

filebeat[15854]: 2022-12-29T10:30:30.992+0100 DEBUG [processors] map[file.line:210 file.name:processing/processors.go] Publish event: {

"@timestamp": "2022-12-29T09:30:30.992Z",

"@metadata": {

"beat": "filebeat",

"type": "_doc",

"version": "8.4.3",

"_id": "0ed422b9bbf338abf372400bb348e2ac669fe22a",

"op_type": "index",

"_index": "ok-app1-write"

},

"test": {

"machine": {

"description": "MF Operator sp.zoo NEW_NAME2",

"name": "3"

},

"prefix": "122000"

},

"ecs": {

"version": "8.0.0"

},

"log": {

"file": {

"path": "/opt/some_file.csv"

}

},

"message": "122000\t3\tMF Operator sp.zoo NEW_NAME2",

"app": "app1"

} {"ecs.version": "1.6.0"}

filebeat.yml

- type: log

enabled: true

paths:

- /opt/*.csv

fields:

app: app1

close_eof: true

fields_under_root: true

processors:

- dissect:

tokenizer: "%{prefix} %{machine.name} %{machine.description}"

field: "message"

target_prefix: "test"

- fingerprint:

fields: ["test.machine.name" , "test.prefix"]

target_field: '@metadata._id'

method: "sha1"

- add_fields:

target: '@metadata'

fields:

op_type: "index"

_index: "ok-app1-write"

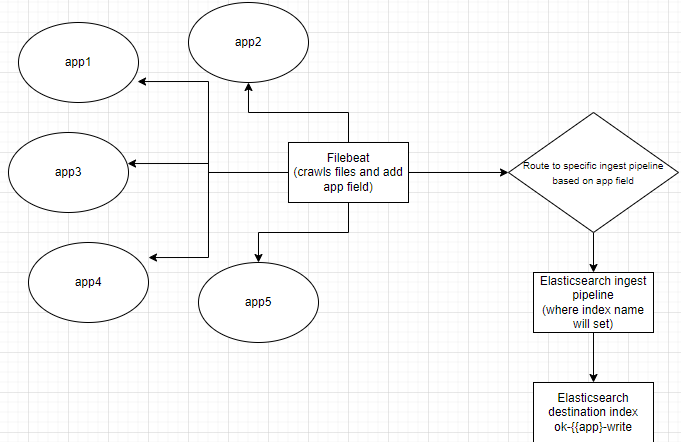

Now i'm reciving error (maybe because filebeat tries sent data to filebeat-* datastream)

2022-12-29T10:44:32.344+0100 WARN [elasticsearch] map[file.line:429 file.name:elasticsearch/client.go] Cannot index event publisher.Event{Content:beat.Event{Timestamp:time.Date(2022, time.December, 29, 10, 44, 31, 327927250, time.Local), Meta:{"_id":"0ed422b9bbf338abf372400bb348e2ac669fe22a","_index":"ok-app1-write","op_type":"index"}, Fields:{"app":"app1","ecs":{"version":"8.0.0"},"test":{"machine":{"description":"MF Operator sp.zoo NEW_NAME3","name":"3"},"prefix":"122000"},"log":{"file":{"path":"/opt/some_file.csv"}},"message":"122000\t3\tMF Operator sp.zoo NEW_NAME3"}, Private:file.State{Id:"native::942404-64768", PrevId:"", Finished:false, Fileinfo:(*os.fileStat)(0xc000a9d6c0), Source:"/opt/some_file.csv", Offset:213, Timestamp:time.Date(2022, time.December, 29, 10, 44, 31, 323541297, time.Local), TTL:-1, Type:"log", Meta:map[string]string(nil), FileStateOS:file.StateOS{Inode:0xe6144, Device:0xfd00}, IdentifierName:"native"}, TimeSeries:false}, Flags:0x1, Cache:publisher.EventCache{m:mapstr.M(nil)}} (status=400): {"type":"illegal_argument_exception","reason":"only write ops with an op_type of create are allowed in data streams"}, dropping event! {"ecs.version": "1.6.0"}

My enviroment has been upgraded to 8.4.3 (filebeat & elasticsearch)

ok-app1-write is an write alias to real indicle

PUT ok-app1-000001

{

"aliases": {

"ok-app1-write": {

"is_write_index": true

}

}

}