Scott,

IMHO This is not a queuing problem it is a throughput problem.

Adding a disk queue is not going to help, that is for network interruptions / store and forward. You might get a momentary pop, but that won't solve the main throughput issue. Here are the docs anyways.

tl;dr I don't think you are going to get where you want to go with a single Filebeat with UDP, if you only have a single UDP endpoint I would recommend Logstash.

I did some testing with UDP and TCP and FIlebeat, I think there is a throughput ceiling I am running into that is less than 8-10Mbs you will need (3K message / sec at 3K per message), I am not sure you are going to get there.

Yup I think you are right Filebeat is limited on the ingest side (and perhaps processing) ... which after some research I think I have better understanding why.

I have other Filebeats shipping ~5K EPS but those messages are about 400-500b so that ends up back around that 2-2.5MBs.

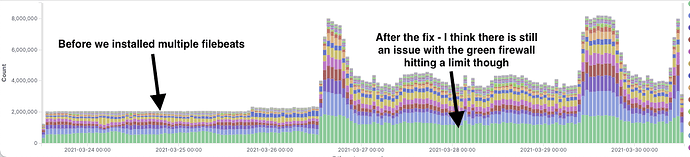

The way I scale higher is multiple beats in parallel, how to do that with UDP I am unclear, not a network guy.

Filebeat historically was meant to be a lightweight shipper distributed across many edge devices / not a "Fat Pipe" shipper, now it is becoming a cloud / network endpoint (FW etc), I have been told there have been discussion around scaling input (+ entire processing chain) at some point but today scaling is via multiple beats.

I think you may need to horizontally scale Filebeat (more beats, again not sure how with UDP) OR ... or use Logstash which is probably a more to be a scalable pipe today.

A properly sized Logstash with UDP input and correct config (multiple workers which in logstash is the full pipeline) to the Logstash output would probably work much better.

Logstash has a long history of forwarding / processing syslog, FW logs etc.

Good Hunting...let us know where you end up.

Max UDP througput for a single Filebeat now with the Big FW.

Max UDP througput for a single Filebeat now with the Big FW.