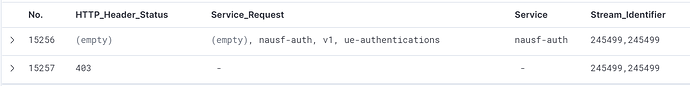

I need to copy contents of “Service” field from No. 15256 into the :path field of No.15257 if “Stream_Identifier” of both lines in 15256 and 15257 are matching.

Kindly suggest how to get this done in the conf file of logstash or any other way to achieve the same

Please refer to the image/snippet attached for the above query.

Also given below is my logstash conf file for ref

input {

file {

path => "/home/student/test-csv/test-http2-1.csv"

start_position => "beginning"

sincedb_path => "/dev/null"

}

}

filter {

csv {

separator => ","

skip_header => "true"

columns => ["Time","No.","Source","Destination","Protocol","Length","HTTP_Header_Status","Stream_Identifier","method",":path","String value","Key","Info"]

}

date {

match => [ "Time", "yyyy-MM-dd HH:mm:ss.SSSSSS" ]

target => "@timestamp"

timezone => "UTC"

}

mutate {

copy => { ":path" => "Service_Request" }

}

mutate {

split => { ":path" => "/" }

add_field => { "Service" => "%{[:path][1]}" }

remove_field => ["message","host","path","@version"]

}

aggregate {

task_id => "%{Stream_Identifier}"

code => '

p = event.get("Service")

if p

map["Service"] = p

elsif map["Service"]

event.set("Service", map["Service"])

end

'

}

mutate {

remove_field => [":path"]

}

}

output {

Elasticsearch {

hosts => "http://localhost:9200"

index => "demo-http-csv-split-2"

}

stdout { codec => rubydebug }

}