Hello

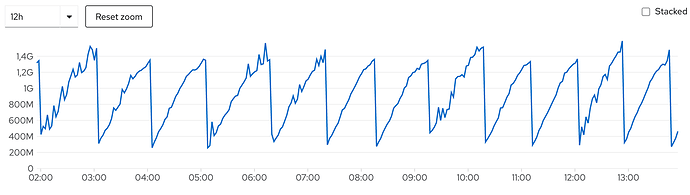

Heartbeat 8.1.0 has a memory leak with enabled Kubernetes autodiscovery

and Openshift kills the container on memory limit reached.

We have the issue on all Openshift nodes.

Heartbeat Version: 8.1.0

Openshift Version: 4.9+

Operating System: Official Docker Image

Steps to Reproduce:

heartbeat.yml

heartbeat.config.monitors:

path: /usr/share/heartbeat/monitors.d/*.yml

reload.enabled: true

reload.period: 5s

logging.level: info

logging.metrics.enabled: false

monitoring.enabled: false

heartbeat.autodiscover:

providers:

- type: kubernetes

resource: pod

scope: cluster

node: ${NODE_NAME}

include_annotations: ["co.elastic.monitor.default.httpcheck"]

templates:

- condition:

contains:

kubernetes.annotations.co.elastic.monitor.default/httpcheck: "true"

config:

- type: http

id: "${data.kubernetes.container.name}"

name: Http Check

hosts: ["${data.host}:${data.port}"]

schedule: "@every 5s"

timeout: 1s

- type: kubernetes

resource: pod

scope: cluster

node: ${NODE_NAME}

include_annotations: ["co.elastic.monitor.default.tcpcheck"]

templates:

- condition:

contains:

kubernetes.annotations.co.elastic.monitor.default/tcpcheck: "true"

config:

- type: tcp

id: "${data.kubernetes.container.id}"

name: "[TCP Check] Pod"

hosts: ["${data.host}:${data.port}"]

schedule: "@every 5s"

timeout: 1s

tags: ["${data.kubernetes.namespace}","${data.kubernetes.pod.name}","${data.kubernetes.container.name}"]

- type: kubernetes

resource: service

scope: cluster

node: ${NODE_NAME}

include_annotations: ["co.elastic.monitor.default.tcpcheck"]

templates:

- condition:

contains:

kubernetes.annotations.co.elastic.monitor.default/tcpcheck: "true"

config:

- type: tcp

id: "${data.kubernetes.service.uid}"

name: "[TCP Check] Service"

hosts: ["${data.host}:${data.port}"]

schedule: "@every 5s"

timeout: 1s

tags: ["${data.kubernetes.namespace}","${data.kubernetes.service.name}"]

# Autodiscover pods

- type: kubernetes

resource: pod

scope: cluster

node: ${NODE_NAME}

hints.enabled: true

# Autodiscover services

- type: kubernetes

resource: service

scope: cluster

node: ${NODE_NAME}

hints.enabled: true

processors:

- add_cloud_metadata:

- add_observer_metadata:

cache.ttl: 5m

geo:

name: ${NODE_NAME}

location: 50.110924, 8.682127

continent_name: Europe

country_iso_code: DEU

region_name: Hesse

queue.disk:

max_size: 1GB

output.elasticsearch:

enabled: true

hosts: ['https://elasticsearch-data-headless:9200']

username: "elastic"

password: "pass"

ssl.enabled: true

ssl.certificate_authorities: /etc/pki/elastic-heartbeat/ca.crt

ssl.certificate: "/etc/pki/elastic-heartbeat/tls.crt"

ssl.key: "/etc/pki/elastic-heartbeat/tls.key"

ssl.verification_mode: full

hearbeat-deployment.yml

kind: Deployment

apiVersion: apps/v1

metadata:

namespace: elastic-stack

labels:

app.kubernetes.io/component: heartbeat

app.kubernetes.io/managed-by: ansible

app.kubernetes.io/name: heartbeat

app.kubernetes.io/part-of: elastic-stack

app.kubernetes.io/version: 8.1.0

spec:

replicas: 2

selector:

matchLabels:

app.kubernetes.io/name: heartbeat

template:

metadata:

labels:

app.kubernetes.io/component: heartbeat

app.kubernetes.io/managed-by: ansible

app.kubernetes.io/name: heartbeat

app.kubernetes.io/part-of: elastic-stack

app.kubernetes.io/version: 8.1.0

spec:

restartPolicy: Always

serviceAccountName: heartbeat

hostNetwork: true

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/logging

operator: Exists

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app.kubernetes.io/name

operator: In

values:

- heartbeat

namespaces:

- elastic-stack

topologyKey: kubernetes.io/hostname

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app.kubernetes.io/name

operator: In

values:

- heartbeat

namespaces:

- elastic-stack

topologyKey: topology.kubernetes.io/zone

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 1000

runAsGroup: 1000

fsGroup: 1000

containers:

- resources:

limits:

cpu: '2'

memory: 1536Mi

requests:

cpu: 100m

memory: 128Mi

terminationMessagePath: /dev/termination-log

name: heartbeat

env:

- name: NODE_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

securityContext:

capabilities:

add:

- CAP_NET_RAW

imagePullPolicy: IfNotPresent

volumeMounts:

- name: elastic-heartbeat

mountPath: /etc/pki/elastic-heartbeat

- name: heartbeat

readOnly: true

mountPath: /etc/heartbeat.yml

subPath: heartbeat.yml

- name: heartbeat-monitors

readOnly: true

mountPath: /usr/share/heartbeat/monitors.d

terminationMessagePolicy: File

image: 'docker.elastic.co/beats/heartbeat:8.1.0'

args:

- '-c'

- /etc/heartbeat.yml

- '-e'

serviceAccount: heartbeat

volumes:

- name: heartbeat

configMap:

name: heartbeat

- name: elastic-heartbeat

secret:

secretName: elastic-heartbeat

- name: heartbeat-monitors

configMap:

name: heartbeat-monitors

dnsPolicy: ClusterFirstWithHostNet

tolerations:

- key: node-role.kubernetes.io/logging

operator: Exists

effect: NoSchedule

priorityClassName: openshift-user-critical