In my logstash I have this to handle different logfiles with different index names:

output {

elasticsearch {

hosts => "https://elasticsearch:9200"

index => "%{[fields][logtype]}-%{[@metadata][version]}-%{+YYYY.MM}"

document_type => "%{[@metadata][type]}"

cacert => "/usr/share/logstash/config/certs/ca/ca.crt"

user => user

#logstash_internal

password => password

}

}

This works for all sorts of beats etc.

xewriter uses tcp input, and I've tried getting that to name the indexes properly as well, but so far without luck.

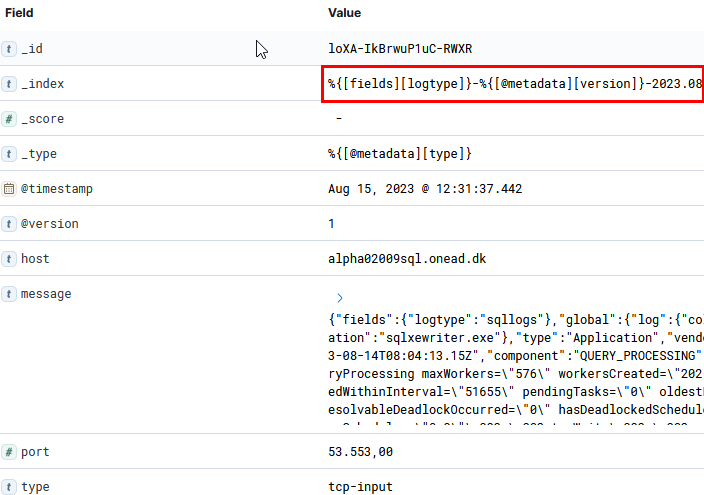

The index name is not filled in, instead the index looks like this:

How do I get this to name them correctly?

As this is the only tcp-input I have I even tried using mutate to set the fields:

filter {

if "tcp-input" in [type] {

mutate {

add_field => {

"fields.logtype" => "sqllogs"

}

}

}

}

And that made the 'fields.logtype' but it still didn't give the index a proper name

Do I need to manipulate @metadata and version as well?