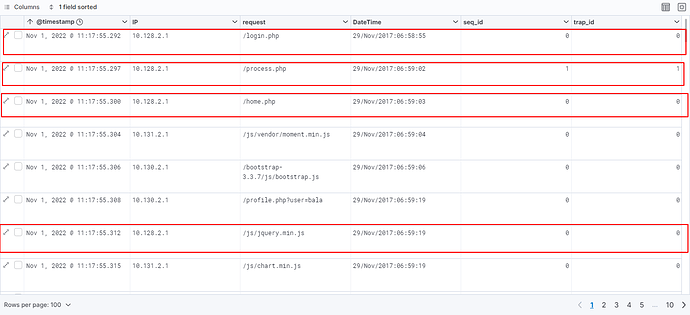

Problem: I want to increment trap_id on every process.php request. but in my case, trap_id is always 0. I dont know why. Please help me

My log file is in csv format. I am trying to fetch the periodical logs of a single user(i.e IP). I am trying to group the requests made in between login and logout request. My log file looks like below

10.128.2.1,29/Nov/2017:06:58:55,GET /home.php HTTP/1.1,200

10.128.2.1,29/Nov/2017:06:58:55,GET /login.php HTTP/1.1,200

10.128.2.1,29/Nov/2017:06:59:02,POST /process.php HTTP/1.1,302

10.128.2.1,29/Nov/2017:06:59:03,GET /about.php HTTP/1.1,200

10.128.2.1,29/Nov/2017:07:05:53,GET /logout.php HTTP/1.1,302

I want to add two more fields in log.conf file.

- seq_id - to find if page is requested when logged-in

- trap_id - category_id

NOTE: whenever process.php is requested, seq_id = 1 and trap_id++ to be done, and trap_id for consequent requests is same id as given to process.php. Whenever logout.php is requeseted, seq_id = 0

I am expecting the below field values when I visualize in kibana.

seq_id trap_id

/home.php - 0 0

/login.php - 0 0

/process.php - 1 1

/about.php - 1 1

/logout.php - 1 1

/home.php - 0 0

/home.php - 0 0

/login.php - 0 0

/process.php - 1 2

/about.php - 1 2

/logout.php - 1 2

I have also added my log.conf file

input {

file {

path => "path_to_log/log.csv"

start_position => "beginning"

}

}

filter {

csv {

separator => ","

skip_header => "true"

columns => ["IP","DateTime","URL","Status"]

}

grok {

match => {

"URL" => ["%{WORD:method} %{DATA:request} HTTP/%{NUMBER:httpversion}"]

}

}

grok {

match => {

"DateTime" => ["%{DATA:Date}\:%{TIME:Time}"]

}

}

grok {

match => {

"Date" => ["%{MONTHDAY:day}/%{MONTH:month}/%{YEAR:year}"]

}

}

grok {

match => {

"Time" => ["%{HOUR:hour}:%{MINUTE:minute}:%{SECOND:second}"]

}

}

ruby {

init => '@trap_id = 0'

code => 'event.set("seq_id", 0)'

}

if [request] == "/process.php" {

mutate { add_field => ["label", "1"] }

ruby {

code => '

@trap_id += 1

event.set("seq_id", 1)

event.set("trap_id", @trap_id)

'

}

mutate { convert => { "trap_id" => "integer" } }

} else if [request] == "/logout.php" {

mutate { add_field => ["label", "2"] }

ruby {

code => '

event.set("trap_id", @trap_id)

event.set("seq_id", 0)

'

}

mutate { convert => { "trap_id" => "integer" } }

} else {

mutate { add_field => ["label", "0"] }

ruby {

code => '

if event.get("seq_id").to_i == 1

event.set("trap_id", @trap_id)

else

@trap_id = 0

event.set("trap_id", @trap_id)

end

'

}

mutate { convert => { "trap_id" => "integer" } }

}

}

output {

stdout { codec => rubydebug }

elasticsearch {

hosts => ["http://localhost:9200"]

index => "logdb2"

user => "elastic"

password => "my_elastic_pass"

}

}