I installed Rally on the same host with ES.

user@oleg-elastic1:~/.rally/logs$ esrally --version

esrally 2.0.1

Elastic Search Version:

** elasticsearch-7.8.1**

running command:

esrally --track=pmc --track-params="bulk_size:2000,bulk_indexing_clients:16" --target-hosts=localhost:9200 --pipeline=benchmark-only

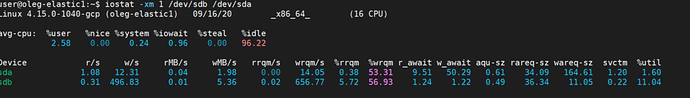

6.10 0.00 2.12 7.47 0.00 84.31

Device r/s w/s rMB/s wMB/s rrqm/s wrqm/s %rrqm %wrqm r_await w_await aqu-sz rareq-sz wareq-sz svctm %util

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sdb 0.00 3775.00 0.00 42.52 0.00 4674.00 0.00 55.32 0.00 1.90 6.27 0.00 11.53 0.25 95.20

load statistics are the same:

iowait - 5.10

MBs - 42

w/s - 3500

Traceback (most recent call last):

File "/home/user/.local/lib/python3.8/site-packages/esrally/async_connection.py", line 130, in perform_request

raw_data = yield from response.text()

File "/home/user/.local/lib/python3.8/site-packages/async_timeout/init.py", line 45, in exit

self._do_exit(exc_type)

File "/home/user/.local/lib/python3.8/site-packages/async_timeout/init.py", line 92, in _do_exit

raise asyncio.TimeoutError

asyncio.exceptions.TimeoutError

2020-09-16 11:16:25,190 -not-actor-/PID:26645 elasticsearch WARNING POST http://localhost:9200/_bulk [status:N/A request:60.060s]

Traceback (most recent call last):

File "/home/user/.local/lib/python3.8/site-packages/esrally/async_connection.py", line 129, in perform_request

response = yield from self.session.request(method, url, data=body, headers=headers, timeout=request_timeout)

File "/home/user/.local/lib/python3.8/site-packages/aiohttp/client.py", line 504, in _request

await resp.start(conn)

File "/home/user/.local/lib/python3.8/site-packages/aiohttp/client_reqrep.py", line 847, in start

message, payload = await self._protocol.read() # type: ignore # noqa

File "/home/user/.local/lib/python3.8/site-packages/aiohttp/streams.py", line 591, in read

await self._waiter

asyncio.exceptions.CancelledError

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/user/.local/lib/python3.8/site-packages/esrally/async_connection.py", line 130, in perform_request

raw_data = yield from response.text()

File "/home/user/.local/lib/python3.8/site-packages/async_timeout/init.py", line 45, in exit

self._do_exit(exc_type)

File "/home/user/.local/lib/python3.8/site-packages/async_timeout/init.py", line 92, in _do_exit

raise asyncio.TimeoutError

asyncio.exceptions.TimeoutError

2020-09-16 11:16:30,101 -not-actor-/PID:26644 elasticsearch WARNING POST http://localhost:9200/_bulk [status:N/A request:60.008s]

Traceback (most recent call last):

File "/home/user/.local/lib/python3.8/site-packages/esrally/async_connection.py", line 129, in perform_request

response = yield from self.session.request(method, url, data=body, headers=headers, timeout=request_timeout)

File "/home/user/.local/lib/python3.8/site-packages/aiohttp/client.py", line 504, in _request

await resp.start(conn)

File "/home/user/.local/lib/python3.8/site-packages/aiohttp/client_reqrep.py", line 847, in start

message, payload = await self._protocol.read() # type: ignore # noqa

File "/home/user/.local/lib/python3.8/site-packages/aiohttp/streams.py", line 591, in read

await self._waiter

asyncio.exceptions.CancelledError

running the command you send yesterday:

esrally --pipeline=benchmark-only --track=eventdata --track-repository=eventdata --challenge=bulk-update --track-params=bulk_size:10000,bulk_indexing_clients:16 --target-hosts=localhost:9200 --client-options="timeout:240" --kill-running-processes

logs are without exceptions.

performance is the same:

avg-cpu: %user %nice %system %iowait %steal %idle

3.88 0.00 0.89 5.79 0.00 89.45

Device r/s w/s rMB/s wMB/s rrqm/s wrqm/s %rrqm %wrqm r_await w_await aqu-sz rareq-sz wareq-sz svctm %util

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sdb 0.00 3804.00 0.00 41.15 0.00 5383.00 0.00 58.59 0.00 0.85 2.33 0.00 11.08 0.21 78.00

load statistics are the same:

iowait - 5.10

MBs - 42

w/s - 3500

how this can be?

Is there something I need to check?

, right? I checked the network - between machines it is 4 Gb /s it is way, way more bandwidth that I generate.

, right? I checked the network - between machines it is 4 Gb /s it is way, way more bandwidth that I generate.