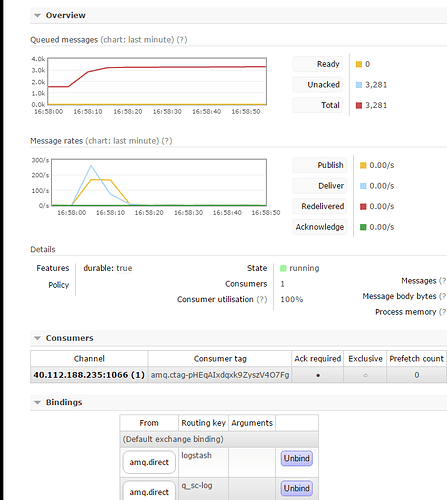

After sometime logstash stop reading data from RabbitMQ.

Gives out no error, just halts. Messages stack-up in unacknowledged mode in RabbitMQ

Messages rates can go upto 40-50 messages per second.

My config for losgtash is:

input {

rabbitmq {

host => "xxxxxxx"

user => "xxxxxxx"

password => "xxxxxxx"

port => 5672

exchange => "amq.direct"

vhost => "xxxxx"

queue => "xxxxx"

durable => true

auto_delete => false

type => "xxxxxx"

threads => 2

}

}

filter {

if [type] == "xxxxxxx" {

grok {

match => [ "message", "%{TIMESTAMP_ISO8601:time_stamp} %{DATA:deviceId} %{DATA:deviceName} - \s*%{GREEDYDATA:message}" ]

overwrite => [ "message" ]

}

date {

match => [ "time_stamp", "ISO8601", "YYYY-MM-dd HH:mm:ssZ" ]

remove_field => [ "time_stamp" ]

}

if("<" in [message] and ">" in [message]) {

drop{}

}

}

}

output {

if [type] == "xxxxxxx" {

elasticsearch {

hosts => [ "xxxxxxxxx:9200" ]

index => "xxxxxxx-%{+YYYY.MM.dd}"

}

stdout { }

}

}

Any help will be greatly appreciated

even I added 16 worker threads but still problem is same.

If I restart logstash it will work for few hours (I will loose some messages also) but again problem remains same.

Remove some complexity to debug this.

- Drop

threads so 1.

- Drop all filters.

- Replace the elasticsearch output with e.g. a file output that just dumps the messages.

You can do this in a separate Logstash instance. Does it help? If yes, reintroduce feature by feature until it breaks again.

1 Like

Hey Magnus,

Thank you very much responding so quick.

and thank you for the advice.

Ya I followed the steps you mentioned.. and Voila!! I found that after removing elasticsearch from output the halting problem solved.

But now the question is how can I resolve this. I need to use elasticsearch as database.

Any advice ??

Regards

Srikanth

Have you looked for anything interesting in the Logstash log? What if you crank up the log level with --verbose or possible even --debug? What about the Elasticsearch log?

Hi Magnus, Happy Thanks Giving...

Replying you after long time.

Ya I tried to find out the problem but didnt find any appropriate answer. After some reading I realized that its something related to elasticsearch bulk API.

Basically I deployed all logstash instances in one VM and elasticsearch instance in other VM. Now I deployed the elasticsearch and logstash(s) together in one VM. Now its working fine.. Until now it didnt halt..

But still looking for an optimal architecture/hierarchy.

Thank you..