Hi my conf is below:

input {

file {

path => "/Users/yangyan/Desktop/log_file/test.log"

start_position => "beginning"

type => "json"

codec => json

}

}

filter {

grok{

match => { "message" => "%{SYSLOGBASE} %{GREEDYDATA:message}" }

overwrite => [ "message" ]

}

json {

source => "message"

}

mutate{

add_field => { "[purchase][source]" => "pSource" }

}

prune {

whitelist_names => [ "timestamp", "app_id", "date", "member_id", "locale", "pSource"]

}

}

output {

elasticsearch { }

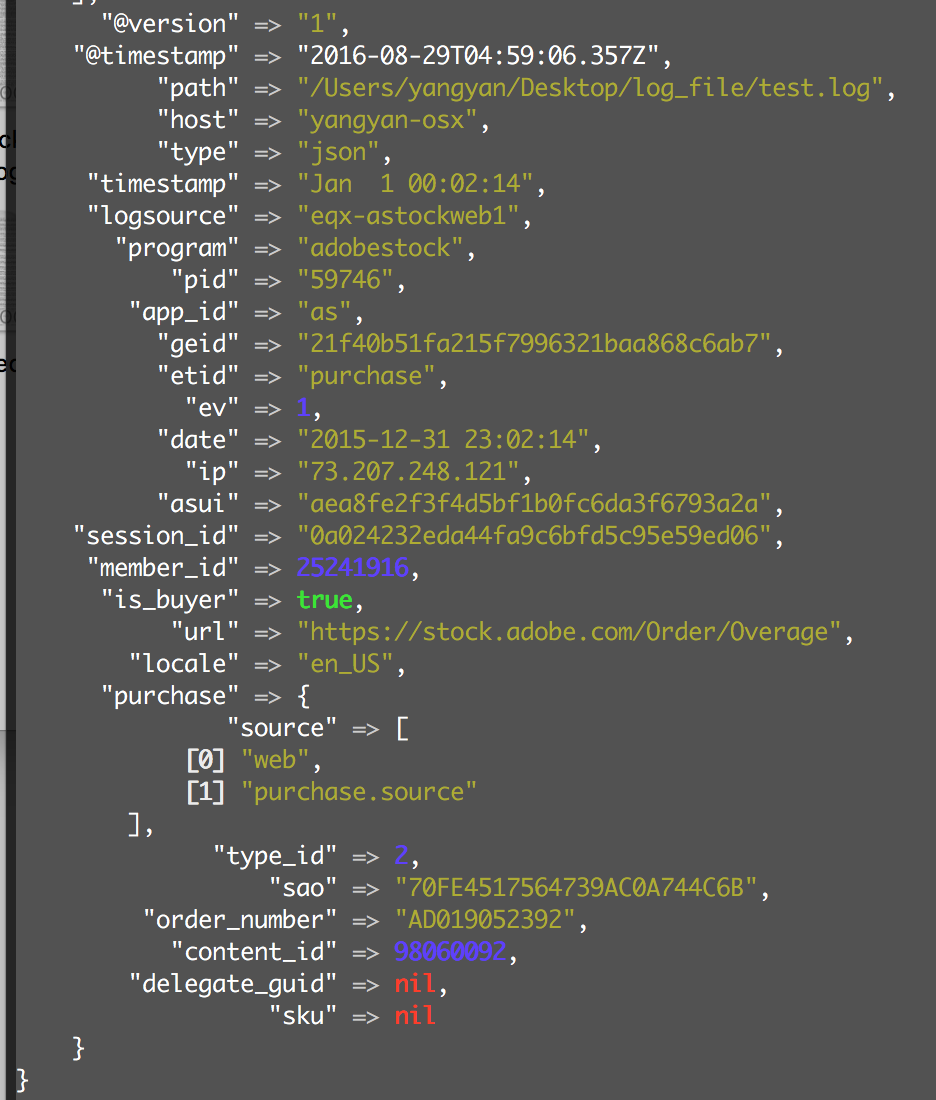

stdout { codec => rubydebug }

}

THE original log file is like this:

Jan 1 00:01:18 eqx-astockweb1 adobestock[59741]: {"app_id":"as","geid":"61dc639d38fb5dc98e6d65a5c5903d1e","etid":"purchase","ev":1,"date":"2015-12-31 23:01:18","mt":1451602878.69,"ip":"10.1.8.37","asui":"84e48f408d265e2f8ef36f26f75bdbcb","session_id":"2193804e13f5a640afb1a1b80613281f","member_id":-1,"is_buyer":false,"url":"https://stock.adobe.com/Callback/JEM/Provisioning","locale":"en_US","purchase":{"source":"jem","type_id":3,"sao":"323C16AD55DFE19B0A744C37","order_number":null,"content_id":null,"delegate_guid":null,"sku":"65260923"}}

THE goal is to parse out only the bolded fields. And if possible, convert date to Date type. (currently everything is default to String, since they are parsed out of json)

Pls kindly let me know!