Hello everyone,

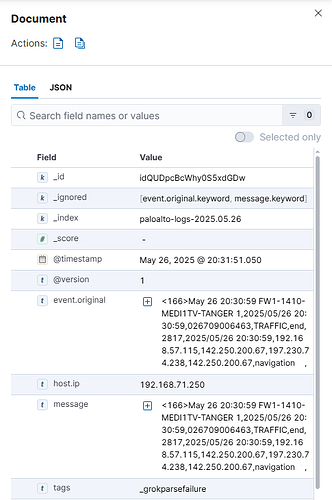

I'm having a problem collecting logs from my Palo Alto firewall to my Elastic stack (Elasticsearch, Logstash, Kibana).

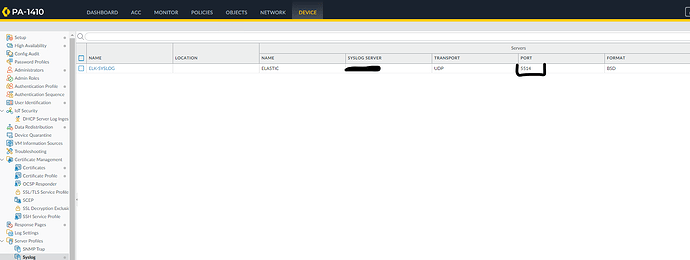

I configured the Palo Alto firewall to send logs in Syslog format to Logstash (see image 1).

I configured the /etc/logstash/conf.d/pa.conf file on the elastic server in the following form to retrieve the logs : input {

udp {

port => 514

type => "paloalto"

}

}

filter {

if [type] == "paloalto" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:timestamp} %{HOSTNAME:firewall} %{DATA:process}: %{GREEDYDATA:log_message}" }

}

date {

match => [ "timestamp", "MMM dd HH:mm:ss" ]

target => "@timestamp"

}

}

}

output {

if [type] == "paloalto" {

elasticsearch {

hosts => ["A.B.C.D:9200"]

index => "paloalto-logs-%{+YYYY.MM.dd}"

}

}

}

The ports are open, but despite this, no Palo Alto logs appear in Elasticsearch/Kibana. Thanks in advance for your help!