DWbank

January 10, 2020, 4:29pm

1

I am using kv to parse my fields due to the fields change depends on the logs that are sent from the siem.

kv {

value_split => “=’”

field_split => “’ “

which work fine but the very first field and the last. due to the extra characters. How do I remove them

DWbank

January 10, 2020, 4:37pm

2

It did not let me post the log trying again

Badger

January 10, 2020, 5:05pm

3

You can use mutate+gsub to trim leading or trailing characters

"someField", "^.", ""

will remove a leading character

"someField", "..$", ""

will remove two trailing characters.

DWbank

January 10, 2020, 5:59pm

4

I am totally a newbie on this. I am not so worried about the entryid field but the device field is required here is my logstash.conf file I commented the gsub lines out after they did not change anything for me.

I am not sure those fields are defined yet and for the devices field what comes out is

Tag IPgoesHERE and the value is />

input {

tcp {

port => 5142

type => "ossim-events"

codec => json {

charset => "CP1252"

}

}

syslog {

type => "syslog"

}

}

filter {

mutate {

# gsub => [

# "someField", "^.",""

# "someField", "..$",""

# ]

add_field => { "Agent_IP" => "%{host}"}

}

################### ALIENVAULT OSSIM Logs ###########################

if [type] == "ossim-events" {

kv {

value_split => "='"

field_split => "' "

}

}

}

output {

# stdout { }

elasticsearch {

hosts => ["localhost:9200"]

Thank you!!

Badger

January 10, 2020, 6:41pm

5

After the kv, what is the name of the field that you want to trim?

DWbank

January 10, 2020, 6:44pm

6

That's the problem the field is defined as the IP of the device. Being the KV splits the field via "' " and the last record ends "'/>" it messes up.

I did find out the first entry is defined as ID which is fine. So its only the last one that is a problem.

I hopes this helps

Badger

January 10, 2020, 7:12pm

7

You are not answering the question. What is the name of the field that you want to trim.

DWbank

January 12, 2020, 10:58pm

8

Device is the last field. I am not sure I understand though, because I was on the understanding that the KV defines all the fields. Being KV does not define device because it does not end as expected that field does not exist.

Thank you

Badger

January 12, 2020, 11:31pm

9

Again you are not answering my question.

DWbank

January 13, 2020, 12:40pm

10

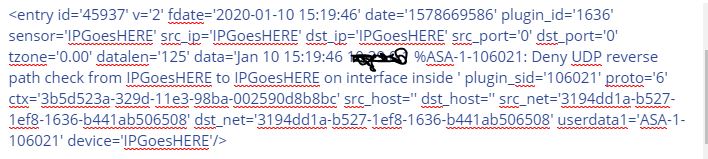

The field changes on every log based on the IP address. The value is /> I showed you the field in the image that is black. its an IP address.

DWbank

January 13, 2020, 12:54pm

11

The field is the value that is suppose to be device. so if the last entry is device='10.10.10.10'/>

I think I know what needs to happen

gsub => ["message", "/>", " />"] but that does not seem to work.

DWbank

January 14, 2020, 7:47pm

12

@Badger

So when I add the

mutate {

gsub => ["message", "/>", ""]

logs stop processing and when I go to htop there is a java process that is running at 200+% CPU Normal is in the 60% range

So I thought about it and I did

mutate {

gsub => ["message", "-", "~"]

just as a test and it worked completely fine changing all dashes to tilde

here is a complete message unmodified, not with the ~

<entry id='30330' v='2' fdate='2020-01-14 19:36:16' date='1579030576' plugin_id='1686' sensor='10.2.1.2' src_ip='10.20.5.12' dst_ip='0.0.0.0' src_port='0' dst_port='0' tzone='0.00' datalen='153' data='Jan 14 19:36:16 MAINSERVER-1 Vpxa: info vpxa[2099663] [Originator@6876 sub=vpxLro opID=HB-host-124550@45884-6f9ee44f-1b] [VpxLRO] -- FINISH lro-183201 ' plugin_sid='20' proto='6' ctx='3b5d523a-329d-11e3-98ba-002590d8b8bc' src_host='90d8b8bc-cbce-11e7-9b24-0025991acb2a' dst_host='' src_net='e3c4c510-6b6e-281e-ee4e-12d131ebba47' dst_net='' userdata1='Vpxa' userdata2='2099663' userdata3='FINISH lro' userdata4='Originator@6876' userdata5='vpxLro' userdata6='HB-host-124550@45884-6f9ee44f-1b' userdata7='VpxLRO' idm_host_src='mainserver-1' device='10.20.5.12'/>

I am not sure when I mess with the < /> the gsub does not work

I have also tried

grok {

match => { "message" => "%{IP:device}...$" }}

which has not worked but I am guessing my syntax is not correct. This also stops the logging.

Any help would be great

Badger

January 14, 2020, 8:17pm

13

DWbank:

mutate { gsub => [ "message", "<entry ", "", "message", "/>$", "" ] }

kv { source => "message" field_split => " " value_split => "=" trim_key => " " }

works fine for me.

"device" => "10.20.5.12",

"fdate" => "2020-01-14 19:36:16",

"src_ip" => "10.20.5.12",

"userdata7" => "VpxLRO",

"userdata4" => "Originator@6876",

"idm_host_src" => "mainserver-1",

"userdata2" => "2099663",

"userdata3" => "FINISH lro",

"proto" => "6",

"data" => "Jan 14 19:36:16 MAINSERVER-1 Vpxa: info vpxa[2099663] [Originator@6876 sub=vpxLro opID=HB-host-124550@45884-6f9ee44f-1b] [VpxLRO] -- FINISH lro-183201 ",

"userdata5" => "vpxLro",

...

1 Like

DWbank

January 15, 2020, 12:59pm

14

I am beginning to think there is something wrong with my system. This conf works but does not get me what I want.

input {

tcp {

port => 5142

type => "ossim-events"

codec => json {

charset => "CP1252"

}

}

}

filter {

################### ALIENVAULT OSSIM Logs ###########################

if [type] == "ossim-events" {

kv {

source => "message"

field_split => "' "

value_split => "='"

}

}

}

output {

elasticsearch {

hosts => ["localhost:9200"]

user => username

password => password

}

}

This does not. Logs completely stop

input {

tcp {

port => 5142

type => "ossim-events"

codec => json {

charset => "CP1252"

}

}

}

filter {

mutate {gsub => [ "message", "<entry ", "", "message", "/>$", "" ] }

################### ALIENVAULT OSSIM Logs ###########################

if [type] == "ossim-events" {

kv {

source => "message"

field_split => " "

value_split => "="

trim_key => " "

}

}

}

output {

elasticsearch {

hosts => ["localhost:9200"]

user => username

password => password

}

}

If there is anything else I can look at. I am thinking its not conf related.

I added file and path with rubydebug I have a record it does parse correctly.

DWbank

January 15, 2020, 3:58pm

15

@Badger

Thank you that worked, I had to create a new indices and all new policies, I am not sure if that index was corrupt or what.

system

February 12, 2020, 3:58pm

16

This topic was automatically closed 28 days after the last reply. New replies are no longer allowed.