I want to do replace @timestamp in kibana / logstash, want to change to the time attribute in my logfile ,, what should i do?

thank you

Use the Logstash date filter. If you search this forum you should be able to find lots of examples as this its a very common question.

Where do I put the codes?

sorry, Because I just got to know the elk stack ..

See the Logstash configuration examples for inspiration and try to ask a more specific question (for example, we can't give you specific help without knowing what your log looks like).

https://www.elastic.co/guide/en/logstash/current/config-examples.html

code in logstash.conf

Display in Discover kibana menu

Attribute "AssociationTime" to be replaced to timestamp

i try, but can not Replace @timestamp in kibana / logstash with time attribute of my log

Please help and guidance

Filters are evaluated in the order listed in the configuration file so the date filter must follow the csv filter.

Is this Code correct?

date {

match ==> [ "AssociationTime", "dd/mm/yyyy hh:mm" ]

}

No, use dd/MM/yyyy HH:mm. See the docs.

I use this code in logstash.conf, but still timestamp is time to import csv file ,, so not from time attribute "AssociationTime"

date {

match ==> [ “AssociationTime”, “dd/MM/yyyy HH:mm” ]

}

Show us an example event. Use a stdout { codec => rubydebug } output or copy/paste from Kibana's JSON tab.

Expand an example event using the little triangle to the left of the timestamp and you'll find a JSON tab.

Does your filter really look like this?

date {

match ==> [ “AssociationTime”, “dd/MM/yyyy HH:mm” ]

}

==> should be =>.

Yes, I have tried, but still not succeed

Post your configuration so we can try it out. Use copy/paste. Do not post a screenshot.

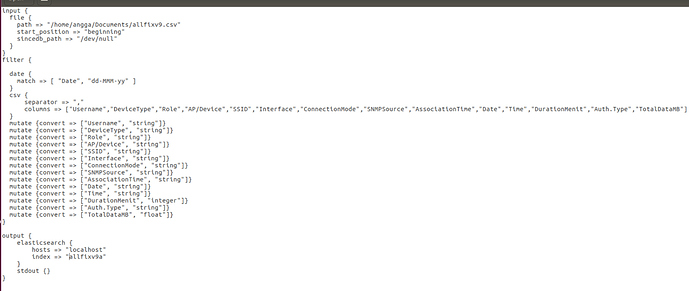

my configuration

input {

file {

path => "/home/angga/Documents/allfixv12.csv"

start_position => "beginning"

sincedb_path => "/dev/null"

}

}

filter {

date {

match => [ "AssociationTime", "dd/MM/yyyy HH:mm" ]

target => "@timestamp"

timezone => "Asia/Jakarta"

}

csv {

separator => ","

columns => ["Username","DeviceType","Role","AP/Device","SSID","Interface","ConnectionMode","SNMPSource","AssociationTime","Date","Time","DurationMenit","Auth.Type","TotalDataMB"]

}

mutate {convert => ["Username", "string"]}

mutate {convert => ["DeviceType", "string"]}

mutate {convert => ["Role", "string"]}

mutate {convert => ["AP/Device", "string"]}

mutate {convert => ["SSID", "string"]}

mutate {convert => ["Interface", "string"]}

mutate {convert => ["ConnectionMode", "string"]}

mutate {convert => ["SNMPSource", "string"]}

mutate {convert => ["Date", "string"]}

mutate {convert => ["Time", "string"]}

mutate {convert => ["DurationMenit", "integer"]}

mutate {convert => ["Auth.Type", "string"]}

mutate {convert => ["TotalDataMB", "float"]}

}

output {

elasticsearch {

hosts => "localhost"

index => "testajaah"

}

stdout { codec => rubydebug }

}

Filters are applied in order, so you must place the date filter after the csv filter as the field otherwise is not available.

This is it?

input {

file {

path => "/home/angga/Documents/allfixv12.csv"

start_position => "beginning"

sincedb_path => “/dev/null”

}

}

filter {

csv {

separator => ","

columns => [“Username”,“DeviceType”,“Role”,“AP/Device”,“SSID”,“Interface”,“ConnectionMode”,“SNMPSource”,“AssociationTime”,“Date”,“Time”,“DurationMenit”,“Auth.Type”,“TotalDataMB”]

}

date {

match => [ “AssociationTime”, “dd/MM/yyyy HH:mm” ]

target => "@timestamp"

timezone => “Asia/Jakarta”

}

mutate {convert => [“Username”, “string”]}

mutate {convert => [“DeviceType”, “string”]}

mutate {convert => [“Role”, “string”]}

mutate {convert => [“AP/Device”, “string”]}

mutate {convert => [“SSID”, “string”]}

mutate {convert => [“Interface”, “string”]}

mutate {convert => [“ConnectionMode”, “string”]}

mutate {convert => [“SNMPSource”, “string”]}

mutate {convert => [“Date”, “string”]}

mutate {convert => [“Time”, “string”]}

mutate {convert => [“DurationMenit”, “integer”]}

mutate {convert => [“Auth.Type”, “string”]}

mutate {convert => [“TotalDataMB”, “float”]}

}

output {

elasticsearch {

hosts => "localhost"

index => “testajaah”

}

stdout { codec => rubydebug }

}

Why don't you try it? But yes, this looks better.