I have question why there are no connectors available for DBs like mongoDB, SQLite etc to capture audit logs while they are availble for SQL server, mysql?? and if there is no connector avaible to capture audit logs of mongoDB in elastic integrations then how can i do that??

You need to build something like a python script, to extract those logs and then you could send it directly to Elasticsearch or save to a file and read it with Elastic Agent.

Can you please share any resource on this or any community repo for .py scripts?

Unfortunately I do not have any information about this.

Also, I don't think that the Community Edtion of MongoDB provides any auditing log, I know that Atlas has audit log and you can read the MongoDB documentation on how to extract those logs.

Elastic Agent has the MongoDB | Documentation integration for collecting logs and metrics from mongodb. Does this integration not meet your use case? As for SQLite, there is a Logstash plugin Sqlite input plugin | Logstash Reference [8.12] | Elastic.

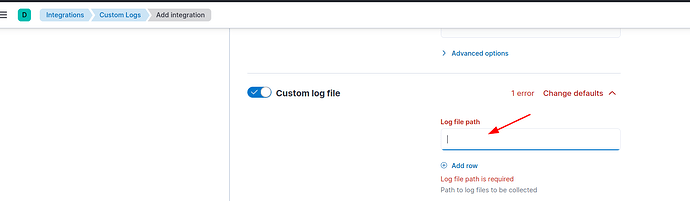

Another alternative would be to use the Custom Logs integration Custom Logs | Documentation or collect directly through Filebeat. Then, parsing can be done with ingest pipeline.

This topic was automatically closed 28 days after the last reply. New replies are no longer allowed.