I have a Logstash setup with 4 nodes that collects logs from several OpenShift (OCP) clusters. However, all of the logs from these OCP clusters use the same pipeline. Lately, the logs have been coming in intermittently (not consistently). Could you please help me identify where the issue might be? Thank you.

Welcome back to the community!

Intermittent data usually points to input or pipeline bottlenecks. Check Logstash logs for backpressure warnings, pipeline worker usage, and queue size. Also verify network/connectivity between OCP clusters and all 4 Logstash nodes. Testing each node individually can help isolate where the drop happens.

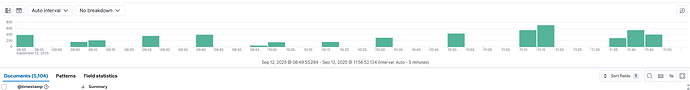

Hello Rafa, I checked and found something odd here. I checked the original raw logs, and the logs were always running (there was a new log every minute). I cannot attach the original logs because they are transaction logs and confidential. Then, these are logs from Filebeat, where Filebeat did not read any logs for about 5 minutes, so it stopped. What should I do? Thank you.

Hi,

What you’re seeing is normal behavior from Filebeat. The message “File is inactive. Closing because close_inactive of 5m0s reached” means Filebeat closed the harvester since the file wasn’t updated for 5 minutes. When new data arrives, Filebeat will reopen the file and continue reading.

If logs are written every minute but Filebeat still marks the file as inactive, you may need to adjust the close_inactive and scan_frequency settings in your Filebeat config to better match your log write pattern.

Also confirm if logs are being rotated or written to a different file path, as Filebeat will treat new files separately.

How are you getting the logs? Filebeat and then send to Logstash?

Can you share both filebeat configuration and your logstash pipeline?

Also, did you check on Logstash logs if there is any error logs?