I would like to convert the following to a geo_point type

"location": {

"properties": {

"lat": {

"type": "float"

},

"lon": {

"type": "float"

}

}

}

The issue that I am running into is that the field source.geo.location is not of type geo_point. I would like to modify the location mapping to look like this

"location": {

"type": "geo_point"

"properties": {

"lat": {

"type": "float"

},

"lon": {

"type": "float"

}

}

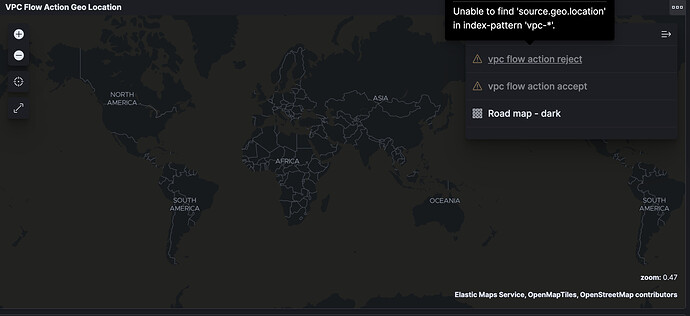

I have reviewed this issue here: Unable to find source.geo.location in index pattern logstash-*, but it doesn't answer my question. I am using the preconfigured elastic common schema from the aws module. This particular issue is related to the vpc flow logs visualization. I have attached a screen shot for extra clarity.

My attempt at fixing the problem

PUT /vpc-7.10.2-2021.03.05/_mapping

{

"mappings" : {

"properties": {

"source.geo.location": {

"type": "geo_point"

}

}

}

}

this just produced

{

"error" : {

"root_cause" : [

{

"type" : "mapper_parsing_exception",

"reason" : "Root mapping definition has unsupported parameters: [mappings : {properties={source.geo.location={type=geo_point}}}]"

}

],

"type" : "mapper_parsing_exception",

"reason" : "Root mapping definition has unsupported parameters: [mappings : {properties={source.geo.location={type=geo_point}}}]"

},

"status" : 400

}

This doesn't work either

PUT /vpc-7.10.2-2021.03.05/_mapping

{

"mappings" : {

"properties": {

"location": {

"type": "geo_point"

}

}

}

}

This does not help either

PUT /vpc-7.10.2-2021.03.05/_mapping

{

"properties": {

"location": {

"type": "geo_point"

}

}

}

This one doesn't do it either

PUT /vpc-*/_mapping

{

"source": {

"geo":{

"location":{

"properties":{

"type": "geo_point"

}

}

}

}

}