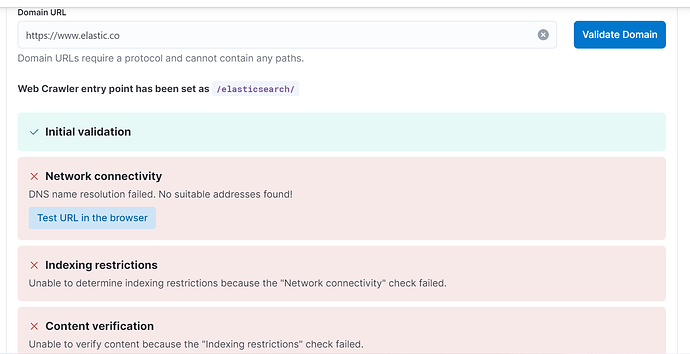

While trying to validate the domain we are getting "DNS name resolution failed. No suitable addresses found!" and we are able to access URL from browser.

we have standalone Elasticsearch running on windows and Enterprisesearch configured on Linux VM.

We are able to crawl internal URL's but not public, we also tried adding below configs to enterprise search. please suggest possible solutions to resolve this.

connector.crawler.http.proxy.host: xxxxxxxxxxxxxx

connector.crawler.http.proxy.port: 8080

connector.crawler.http.proxy.protocol: https

connector.crawler.security.dns.allow_private_networks_access: true

connector.crawler.security.dns.allow_loopback_access: true

crawler.http.proxy.host: xxxxxxxxxxx

crawler.http.proxy.port: 8080

crawler.http.proxy.protocol: https

crawler.security.dns.allow_loopback_access: true

crawler.security.dns.allow_private_networks_access: true