My elasticsearch version = 8.10.4

My filebeat version : 8.7

Also these are outputs :

filebeat test output

elasticsearch: https://172.10.110.29:9200...

parse url... OK

connection...

parse host... OK

dns lookup... OK

addresses: 172.10.110.29

dial up... OK

TLS...

security: server's certificate chain verification is enabled

handshake... OK

TLS version: TLSv1.3

dial up... OK

talk to server... OK

version: 8.10.4

curl -XGET "https://172.10.110.29:9200" -u elastic --cacert /etc/filebeat/certs/http_ca.crt

Enter host password for user 'elastic':

{

"name" : "elstack",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "3lZ748BgSiG2KRijMrorgQ",

"version" : {

"number" : "8.10.4",

"build_flavor" : "default",

"build_type" : "deb",

"build_hash" : "b4a62ac808e886ff032700c391f45f1408b2538c",

"build_date" : "2023-10-11T22:04:35.506990650Z",

"build_snapshot" : false,

"lucene_version" : "9.7.0",

"minimum_wire_compatibility_version" : "7.17.0",

"minimum_index_compatibility_version" : "7.0.0"

},

"tagline" : "You Know, for Search"

}

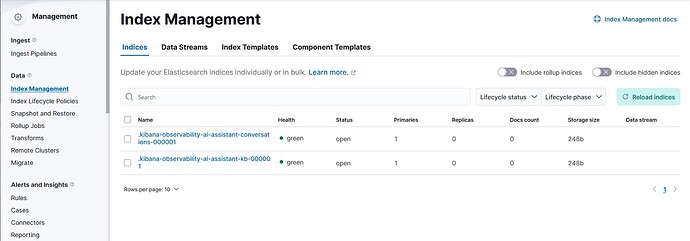

and my filebeat service is running on the server but it does not create an index on the elasticsearch

This is part of my filebeat log :

{"log.level":"warn","@timestamp":"2023-11-04T13:34:11.144+0330","log.logger":"add_cloud_metadata","log.origin":{"file.name":"add_cloud_metadata/provider_aws_ec2.go","file.line":81},"message":"read token request for getting IMDSv2 token returns empty: Put \"http://169.254.169.254/latest/api/token\": context deadline exceeded (Client.Timeout exceeded while awaiting headers). No token in the metadata request will be used.","service.name":"filebeat","ecs.version":"1.6.0"}

{"log.level":"warn","@timestamp":"2023-11-04T13:34:11.146+0330","log.logger":"cfgwarn","log.origin":{"file.name":"tlscommon/config.go","file.line":102},"message":"DEPRECATED: Treating the CommonName field on X.509 certificates as a host name when no Subject Alternative Names are present is going to be removed. Please update your certificates if needed. Will be removed in version: 8.0.0","service.name":"filebeat","ecs.version":"1.6.0"}

{"log.level":"info","@timestamp":"2023-11-04T13:34:11.146+0330","log.logger":"esclientleg","log.origin":{"file.name":"eslegclient/connection.go","file.line":108},"message":"elasticsearch url: https://172.10.110.29:9200","service.name":"filebeat","ecs.version":"1.6.0"}

{"log.level":"info","@timestamp":"2023-11-04T13:34:11.168+0330","log.logger":"tls","log.origin":{"file.name":"tlscommon/tls_config.go","file.line":162},"message":"'ca_trusted_fingerprint' set, looking for matching fingerprints","service.name":"filebeat","ecs.version":"1.6.0"}

{"log.level":"info","@timestamp":"2023-11-04T13:34:11.168+0330","log.logger":"tls","log.origin":{"file.name":"tlscommon/tls_config.go","file.line":173},"message":"CA certificate matching 'ca_trusted_fingerprint' found, adding it to 'certificate_authorities'","service.name":"filebeat","ecs.version":"1.6.0"}

{"log.level":"info","@timestamp":"2023-11-04T13:34:11.190+0330","log.logger":"tls","log.origin":{"file.name":"tlscommon/tls_config.go","file.line":162},"message":"'ca_trusted_fingerprint' set, looking for matching fingerprints","service.name":"filebeat","ecs.version":"1.6.0"}

{"log.level":"info","@timestamp":"2023-11-04T13:34:11.191+0330","log.logger":"tls","log.origin":{"file.name":"tlscommon/tls_config.go","file.line":173},"message":"CA certificate matching 'ca_trusted_fingerprint' found, adding it to 'certificate_authorities'","service.name":"filebeat","ecs.version":"1.6.0"}

{"log.level":"info","@timestamp":"2023-11-04T13:34:11.194+0330","log.logger":"esclientleg","log.origin":{"file.name":"eslegclient/connection.go","file.line":291},"message":"Attempting to connect to Elasticsearch version 8.10.4","service.name":"filebeat","ecs.version":"1.6.0"}