Regardless of the traffic, we profiled a single query against two nodes result in the same situation.

Here is the query,

POST myindex/_search

{

"_source": false,

"profile":true,

"docvalue_fields": [

"v_spu_id",

"brand_store_sn",

"goods_cate_id_1",

"goods_cate_id_2",

"goods_cate_id_3",

"product_tags_pdc",

"product_tags_ptp",

"product_tags_vde",

"product_tags_usual_ptp",

"spu_vip_self",

"goods_inner_cate_id_3"

],

"stored_fields": "_none_",

"from": 0,

"size": 270,

"track_total_hits": true,

"query": {

"function_score": {

"query": {

"bool": {

"filter": [

{

"bool": {

"should": [

{

"bool": {

"must": [

{

"terms": {

"product_props": [

"学前儿童_V",

"幼儿_V",

"男女童_V",

"儿童_V",

"学龄前儿童_V",

"中童_V",

"低龄儿童_V",

"学童_V",

"大童_V",

"小男童_V",

"女童_V",

"婴童_V",

"中大童_V",

"早期儿童_V",

"小童_V"

]

}

},

{

"terms": {

"product_props": [

"贵妇_V",

"中年妇女_V",

"轻熟女_V",

"女宝宝_V",

"少女_V",

"母女_V",

"闺女_V",

"淑女_V",

"年青人女性_V",

"仙女_V",

"女友_V",

"女王_V",

"女_V",

"年青人女士_V",

"仕女_V",

"女童_V",

"年青人女子_V",

"熟女_V",

"年青人女人_V",

"ol_V",

"少妇_V",

"家庭主妇_V"

]

}

},

{

"terms": {

"product_all": [

"A_帆布鞋_V",

"A_雪地鞋_V",

"A_工装靴_V",

"A_旱冰鞋_V",

"A_冰刀鞋_V",

"A_皮靴_V",

"A_篮球鞋_V",

"A_鞋_V",

"A_羽毛球鞋_V",

"A_皮鞋_V",

"A_小白鞋_V",

"A_网球鞋_V",

"A_运动鞋_V",

"A_旗鞋_V",

"A_乐福鞋_V",

"A_饼干鞋_V",

"A_舞蹈鞋_V",

"A_马靴_V",

"A_棉拖_V",

"A_玛丽珍鞋_V",

"A_棒球鞋_V",

"A_解放鞋_V",

"A_越野鞋_V",

"A_一脚蹬_V",

"A_便鞋_V",

"A_乒乓球鞋_V",

"A_凉拖_V",

"A_短靴_V",

"A_人字拖_V",

"A_徒步鞋_V",

"A_正装鞋_V",

"A_硫化鞋_V",

"A_秋鞋_V",

"A_钉鞋_V",

"A_阿甘鞋_V",

"A_雨鞋_V",

"A_猫爪鞋_V",

"A_马丁靴_V",

"A_军靴_V",

"A_单靴_V",

"A_切尔西靴_V",

"A_溯溪鞋_V",

"A_登山鞋_V",

"A_舞鞋_V",

"A_松糕鞋_V",

"A_蛙鞋_V",

"A_小黑鞋_V",

"A_胶鞋_V",

"A_罗马鞋_V",

"A_长靴_V",

"A_袜靴_V",

"A_网鞋_V",

"A_水靴_V",

"A_健步鞋_V",

"A_战靴_V",

"A_滑板鞋_V",

"A_冰鞋_V",

"A_僧侣鞋_V",

"A_冰球鞋_V",

"A_军鞋_V",

"A_德比鞋_V",

"A_马丁鞋_V",

"A_单鞋_V",

"A_洛克鞋_V",

"A_板鞋_V",

"A_布鞋_V",

"A_波鞋_V",

"A_球鞋_V",

"A_瓢鞋_V",

"A_凉鞋_V",

"A_保暖鞋_V",

"A_豆豆鞋_V",

"A_洞沿鞋_V",

"A_棉鞋_V",

"A_雪地靴_V",

"A_灯鞋_V",

"A_熊猫鞋_V",

"A_草鞋_V",

"A_气垫鞋_V",

"A_靴_V",

"A_跑步鞋_V",

"A_勃肯鞋_V",

"A_拖鞋_V",

"A_足球鞋_V",

"A_婚鞋_V",

"A_渔夫鞋_V",

"A_椰子鞋_V",

"A_跳跃鞋_V",

"A_排球鞋_V",

"A_袜鞋_V"

]

}

},

{

"match_all": {}

}

]

}

}

]

}

}

]

}

},

"functions": [

{

"field_value_factor": {

"field": "static_score_9",

"factor": 1,

"missing": 1

}

}

],

"score_mode": "first",

"boost_mode": "replace"

}

}

}

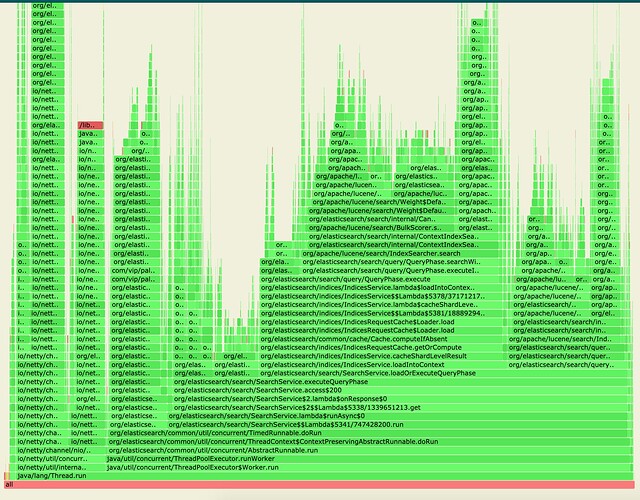

Pls click the link to check out the output.

profile result, the right one is the healthy one

As we can see from the link, the left one's TermInSetQuery are taking much much more time than the right one. It looks like the problematic node is broken inside and can't go back to normal any more.