Hi,

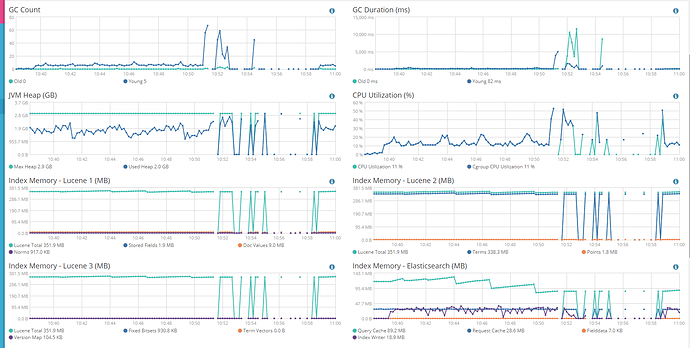

We recently deployed new Search functionality and day after moving things to Production, our ES server blacks out every other day. I was able to replicate the scenario in our QA servers now.

While indexing 30k records and searching at the same time, server turns to RED. I have to restart the service again or wait for 10-12 mins.

I allocated 3 GB for JVM Heap in out QA server but Production server has 20GB for JVM. Not sure how to resolve the issue. Any help is greatly appreciated.

Information from log files:

[2018-08-26T11:15:17,449][INFO ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235248] overhead, spent [586ms] collecting in the last [1.4s]

[2018-08-26T11:15:18,603][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235249] overhead, spent [670ms] collecting in the last [1.1s]

[2018-08-26T11:16:01,873][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][old][1235250][136] duration [42.4s], collections [2]/[43.3s], total [42.4s]/[10.1m], memory [2.5gb]->[1.3gb]/[2.9gb], all_pools {[young] [2.9mb]->[13.9mb]/[532.5mb]}{[survivor] [65.6mb]->[0b]/[66.5mb]}{[old] [1.9gb]->[1.3gb]/[2.3gb]}

[2018-08-26T11:16:01,873][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235250] overhead, spent [42.9s] collecting in the last [43.3s]

[2018-08-26T11:16:01,907][ERROR][o.e.x.m.c.i.IndexStatsCollector] [vtamsweb2] collector [index-stats-collector] timed out when collecting data

[2018-08-26T11:16:11,198][INFO ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235259] overhead, spent [262ms] collecting in the last [1s]

[2018-08-26T11:16:14,595][INFO ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235262] overhead, spent [350ms] collecting in the last [1.2s]

[2018-08-26T11:16:16,688][INFO ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235264] overhead, spent [271ms] collecting in the last [1s]

[2018-08-26T11:16:18,825][INFO ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235266] overhead, spent [278ms] collecting in the last [1s]

[2018-08-26T11:16:23,537][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235267] overhead, spent [3.9s] collecting in the last [4.7s]

[2018-08-26T11:16:25,830][INFO ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235269] overhead, spent [308ms] collecting in the last [1.2s]

[2018-08-26T11:16:29,341][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235270] overhead, spent [3.3s] collecting in the last [3.5s]

[2018-08-26T11:16:35,378][ERROR][o.e.x.m.c.i.IndexStatsCollector] [vtamsweb2] collector [index-stats-collector] timed out when collecting data

[2018-08-26T11:16:35,800][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235273] overhead, spent [3.5s] collecting in the last [4.4s]

[2018-08-26T11:16:36,814][INFO ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235274] overhead, spent [274ms] collecting in the last [1s]

[2018-08-26T11:16:40,621][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235275] overhead, spent [3.4s] collecting in the last [3.8s]

[2018-08-26T11:16:45,254][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235276] overhead, spent [4s] collecting in the last [4.6s]

[2018-08-26T11:16:45,691][ERROR][o.e.x.m.c.c.ClusterStatsCollector] [vtamsweb2] collector [cluster-stats-collector] timed out when collecting data

[2018-08-26T11:16:49,749][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235277] overhead, spent [4s] collecting in the last [4.4s]

[2018-08-26T11:16:54,102][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235278] overhead, spent [3.9s] collecting in the last [4.3s]

[2018-08-26T11:16:58,845][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235279] overhead, spent [4.2s] collecting in the last [4.7s]

[2018-08-26T11:17:03,510][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235280] overhead, spent [4.1s] collecting in the last [4.6s]

[2018-08-26T11:17:08,638][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235282] overhead, spent [3.6s] collecting in the last [3.7s]

[2018-08-26T11:17:13,138][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235284] overhead, spent [3.4s] collecting in the last [3.5s]

[2018-08-26T11:17:18,837][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235286] overhead, spent [3.6s] collecting in the last [4.4s]

[2018-08-26T11:17:23,993][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235288] overhead, spent [3.6s] collecting in the last [4.1s]

[2018-08-26T11:17:29,117][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235289] overhead, spent [4.3s] collecting in the last [5.1s]

[2018-08-26T11:17:34,097][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235290] overhead, spent [4.1s] collecting in the last [4.9s]

[2018-08-26T11:17:39,364][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235291] overhead, spent [4.4s] collecting in the last [5.2s]

[2018-08-26T11:17:44,131][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235292] overhead, spent [4.2s] collecting in the last [4.7s]

[2018-08-26T11:17:49,878][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235294] overhead, spent [3.7s] collecting in the last [4.5s]

[2018-08-26T11:17:55,494][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235296] overhead, spent [4s] collecting in the last [4.5s]

[2018-08-26T11:18:00,503][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235298] overhead, spent [3.2s] collecting in the last [3.9s]

[2018-08-26T11:18:06,063][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235300] overhead, spent [3.8s] collecting in the last [4.4s]

[2018-08-26T11:18:07,389][INFO ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235301] overhead, spent [410ms] collecting in the last [1s]

[2018-08-26T11:18:11,483][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235302] overhead, spent [3.8s] collecting in the last [4.3s]

[2018-08-26T11:18:16,199][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235303] overhead, spent [4.1s] collecting in the last [4.7s]

[2018-08-26T11:18:20,183][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235304] overhead, spent [3.6s] collecting in the last [3.9s]

[2018-08-26T11:18:24,100][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235305] overhead, spent [3.2s] collecting in the last [3.9s]

[2018-08-26T11:18:25,146][INFO ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235306] overhead, spent [467ms] collecting in the last [1s]

[2018-08-26T11:18:28,267][WARN ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235307] overhead, spent [2.8s] collecting in the last [3.1s]

[2018-08-26T11:18:29,375][INFO ][o.e.m.j.JvmGcMonitorService] [vtamsweb2] [gc][1235308] overhead, spent [434ms] collecting in the last [1.1s]