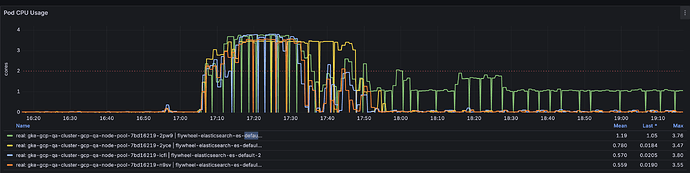

We are running an Elasticsearch 9.1.2 cluster with four nodes that is displaying some erratic behavior when under indexing load. Initially when we started indexing data, all four nodes (each a separate Kubernetes pod) were actively indexing. However, for the last hour-plus, only the master node (green line) is very active CPU-wise, while the non-master nodes are idle.

In the process that is trying to perform the indexing, I am seeing near-constant read timeout exceptions like this:

Traceback (most recent call last):

File "/venv/lib/python3.12/site-packages/urllib3/connectionpool.py", line 468, in _make_request

six.raise_from(e, None)

File "<string>", line 3, in raise_from

File "/venv/lib/python3.12/site-packages/urllib3/connectionpool.py", line 463, in _make_request

httplib_response = conn.getresponse()

^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/http/client.py", line 1430, in getresponse

response.begin()

File "/usr/local/lib/python3.12/http/client.py", line 331, in begin

version, status, reason = self._read_status()

^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/http/client.py", line 292, in _read_status

line = str(self.fp.readline(_MAXLINE + 1), "iso-8859-1")

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/socket.py", line 720, in readinto

return self._sock.recv_into(b)

^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/ssl.py", line 1251, in recv_into

return self.read(nbytes, buffer)

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/ssl.py", line 1103, in read

return self._sslobj.read(len, buffer)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

TimeoutError: The read operation timed out

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/venv/lib/python3.12/site-packages/elastic_transport/_node/_http_urllib3.py", line 167, in perform_request

response = self.pool.urlopen(

^^^^^^^^^^^^^^^^^^

File "/venv/lib/python3.12/site-packages/urllib3/connectionpool.py", line 802, in urlopen

retries = retries.increment(

^^^^^^^^^^^^^^^^^^

File "/venv/lib/python3.12/site-packages/urllib3/util/retry.py", line 527, in increment

raise six.reraise(type(error), error, _stacktrace)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/venv/lib/python3.12/site-packages/urllib3/packages/six.py", line 770, in reraise

raise value

File "/venv/lib/python3.12/site-packages/urllib3/connectionpool.py", line 716, in urlopen

httplib_response = self._make_request(

^^^^^^^^^^^^^^^^^^^

File "/venv/lib/python3.12/site-packages/urllib3/connectionpool.py", line 470, in _make_request

self._raise_timeout(err=e, url=url, timeout_value=read_timeout)

File "/venv/lib/python3.12/site-packages/urllib3/connectionpool.py", line 358, in _raise_timeout

raise ReadTimeoutError(

urllib3.exceptions.ReadTimeoutError: HTTPSConnectionPool(host='flywheel-elasticsearch-es-http', port=9200): Read timed out. (read timeout=300)

We are using version 9.1.0 of the python-elasticsearch client. Our code that is causing the indexing activity is mostly calls to helpers.streaming_bulk containing up to 300 indexing actions each.

Some other info about the cluster that might be helpful:

Cat nodes output:

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

10.1.4.33 32 86 12 0.21 0.51 0.81 cdfhilmrstw - flywheel-elasticsearch-es-default-1

10.1.20.71 56 90 37 1.64 1.54 1.55 cdfhilmrstw * flywheel-elasticsearch-es-default-3

10.1.21.5 42 92 11 0.22 0.31 0.59 cdfhilmrstw - flywheel-elasticsearch-es-default-0

10.1.2.109 60 97 6 0.32 0.29 0.25 cdfhilmrstw - flywheel-elasticsearch-es-default-2

Cat shards output (why is one primary shard so much larger than the rest, when they all have about the same number of documents?):

index shard prirep state docs store dataset ip node

.ds-.logs-elasticsearch.deprecation-default-2025.09.03-000001 0 r STARTED 1 10.6kb 10.6kb 10.1.2.109 flywheel-elasticsearch-es-default-2

.ds-.logs-elasticsearch.deprecation-default-2025.09.03-000001 0 p STARTED 1 10.6kb 10.6kb 10.1.4.33 flywheel-elasticsearch-es-default-1

.ds-ilm-history-7-2025.09.03-000001 0 r STARTED 3 9.8kb 9.8kb 10.1.2.109 flywheel-elasticsearch-es-default-2

.ds-ilm-history-7-2025.09.03-000001 0 p STARTED 3 9.8kb 9.8kb 10.1.21.5 flywheel-elasticsearch-es-default-0

.ds-ilm-history-7-2025.09.10-000002 0 r STARTED 0 249b 249b 10.1.20.71 flywheel-elasticsearch-es-default-3

.ds-ilm-history-7-2025.09.10-000002 0 p STARTED 0 249b 249b 10.1.21.5 flywheel-elasticsearch-es-default-0

data_explorer_fields 0 p STARTED 0 249b 249b 10.1.21.5 flywheel-elasticsearch-es-default-0

.security-7 0 p STARTED 29 52kb 52kb 10.1.20.71 flywheel-elasticsearch-es-default-3

.security-7 0 r STARTED 29 52kb 52kb 10.1.4.33 flywheel-elasticsearch-es-default-1

data_explorer_search_20250916t170522z 0 p STARTED 30394 1.8gb 1.8gb 10.1.2.109 flywheel-elasticsearch-es-default-2

data_explorer_search_20250916t170522z 1 p STARTED 30701 1.6gb 1.6gb 10.1.21.5 flywheel-elasticsearch-es-default-0

data_explorer_search_20250916t170522z 2 p STARTED 30471 1.6gb 1.6gb 10.1.20.71 flywheel-elasticsearch-es-default-3

data_explorer_search_20250916t170522z 3 p STARTED 30963 1.6gb 1.6gb 10.1.4.33 flywheel-elasticsearch-es-default-1

data_explorer_search_20250916t170522z 4 p STARTED 30639 1.6gb 1.6gb 10.1.2.109 flywheel-elasticsearch-es-default-2

data_explorer_search_20250916t170522z 5 p STARTED 30728 1.6gb 1.6gb 10.1.21.5 flywheel-elasticsearch-es-default-0

data_explorer_search_20250916t170522z 6 p STARTED 30507 11.5gb 11.5gb 10.1.20.71 flywheel-elasticsearch-es-default-3

data_explorer_search_20250916t170522z 7 p STARTED 30715 1.6gb 1.6gb 10.1.4.33 flywheel-elasticsearch-es-default-1

data_explorer_search_20250916t170522z 8 p STARTED 30558 1.6gb 1.6gb 10.1.21.5 flywheel-elasticsearch-es-default-0

data_explorer_search_20250916t170522z 9 p STARTED 30418 1.6gb 1.6gb 10.1.2.109 flywheel-elasticsearch-es-default-2

data_explorer_search_20250916t170522z 10 p STARTED 30940 1.6gb 1.6gb 10.1.20.71 flywheel-elasticsearch-es-default-3

data_explorer_search_20250916t170522z 11 p STARTED 30420 1.6gb 1.6gb 10.1.4.33 flywheel-elasticsearch-es-default-1

Hot threads info:

::: {flywheel-elasticsearch-es-default-0}{mC6Ro8vATY2ZD9yrU0siRg}{o5B-wOyFSPWOMFREtEfQwQ}{flywheel-elasticsearch-es-default-0}{10.1.21.5}{10.1.21.5:9300}{cdfhilmrstw}{9.1.2}{8000099-9033000}{ml.allocated_processors_double=4.0, ml.machine_memory=12884901888, ml.config_version=12.0.0, ml.max_jvm_size=6442450944, ml.allocated_processors=4, xpack.installed=true, transform.config_version=10.0.0, k8s_node_name=gke-gcp-qa-cluster-gcp-qa-node-pool-7bd16219-n9sv}

Hot threads at 2025-09-16T20:47:19.479Z, interval=500ms, busiestThreads=3, ignoreIdleThreads=true:

::: {flywheel-elasticsearch-es-default-1}{-HSrS5F_T_OxB7QG_OSNUA}{m1sxiKTsStu2cb9Dk2TxaQ}{flywheel-elasticsearch-es-default-1}{10.1.4.33}{10.1.4.33:9300}{cdfhilmrstw}{9.1.2}{8000099-9033000}{ml.allocated_processors=4, ml.max_jvm_size=6442450944, ml.config_version=12.0.0, ml.machine_memory=12884901888, ml.allocated_processors_double=4.0, transform.config_version=10.0.0, xpack.installed=true, k8s_node_name=gke-gcp-qa-cluster-gcp-qa-node-pool-7bd16219-2yce}

Hot threads at 2025-09-16T20:47:19.480Z, interval=500ms, busiestThreads=3, ignoreIdleThreads=true:

100.0% [cpu=0.0%, other=100.0%] (500ms out of 500ms) cpu usage by thread 'elasticsearch[readiness-service]'

10/10 snapshots sharing following 7 elements

java.base@24/sun.nio.ch.Net.accept(Native Method)

java.base@24/sun.nio.ch.ServerSocketChannelImpl.implAccept(ServerSocketChannelImpl.java:424)

java.base@24/sun.nio.ch.ServerSocketChannelImpl.accept(ServerSocketChannelImpl.java:391)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.readiness.ReadinessService.lambda$startListener$0(ReadinessService.java:176)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.readiness.ReadinessService$$Lambda/0x00000000a74c20c0.run(Unknown Source)

java.base@24/java.lang.Thread.runWith(Thread.java:1460)

java.base@24/java.lang.Thread.run(Thread.java:1447)

::: {flywheel-elasticsearch-es-default-2}{HQkFKLjjQESUgxT6VubUWw}{6acuA91ZRP2WHA4j5D08IA}{flywheel-elasticsearch-es-default-2}{10.1.2.109}{10.1.2.109:9300}{cdfhilmrstw}{9.1.2}{8000099-9033000}{ml.allocated_processors=4, ml.max_jvm_size=6442450944, ml.config_version=12.0.0, ml.machine_memory=12884901888, ml.allocated_processors_double=4.0, transform.config_version=10.0.0, xpack.installed=true, k8s_node_name=gke-gcp-qa-cluster-gcp-qa-node-pool-7bd16219-icfi}

Hot threads at 2025-09-16T20:47:19.481Z, interval=500ms, busiestThreads=3, ignoreIdleThreads=true:

100.0% [cpu=0.1%, other=99.9%] (500ms out of 500ms) cpu usage by thread 'elasticsearch[readiness-service]'

10/10 snapshots sharing following 7 elements

java.base@24/sun.nio.ch.Net.accept(Native Method)

java.base@24/sun.nio.ch.ServerSocketChannelImpl.implAccept(ServerSocketChannelImpl.java:424)

java.base@24/sun.nio.ch.ServerSocketChannelImpl.accept(ServerSocketChannelImpl.java:391)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.readiness.ReadinessService.lambda$startListener$0(ReadinessService.java:176)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.readiness.ReadinessService$$Lambda/0x000000006f4be000.run(Unknown Source)

java.base@24/java.lang.Thread.runWith(Thread.java:1460)

java.base@24/java.lang.Thread.run(Thread.java:1447)

::: {flywheel-elasticsearch-es-default-3}{DkwriEhsSHa91NYAm75lUg}{ekY7bC8uT9aHHcUtj717dA}{flywheel-elasticsearch-es-default-3}{10.1.20.71}{10.1.20.71:9300}{cdfhilmrstw}{9.1.2}{8000099-9033000}{ml.allocated_processors=4, ml.max_jvm_size=6442450944, ml.config_version=12.0.0, ml.machine_memory=12884901888, ml.allocated_processors_double=4.0, transform.config_version=10.0.0, xpack.installed=true, k8s_node_name=gke-gcp-qa-cluster-gcp-qa-node-pool-7bd16219-2pw9}

Hot threads at 2025-09-16T20:47:19.480Z, interval=500ms, busiestThreads=3, ignoreIdleThreads=true:

100.3% [cpu=100.3%, other=0.0%] (501.4ms out of 500ms) cpu usage by thread 'elasticsearch[flywheel-elasticsearch-es-default-3][flush][T#17]'

10/10 snapshots sharing following 40 elements

app/org.apache.lucene.core@10.2.2/org.apache.lucene.util.BytesRefHash$1$1.buildHistogram(BytesRefHash.java:170)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.util.MSBRadixSorter.computeCommonPrefixLengthAndBuildHistogramPart2(MSBRadixSorter.java:267)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.util.MSBRadixSorter.computeCommonPrefixLengthAndBuildHistogramPart1(MSBRadixSorter.java:257)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.util.MSBRadixSorter.computeCommonPrefixLengthAndBuildHistogram(MSBRadixSorter.java:220)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.util.MSBRadixSorter.radixSort(MSBRadixSorter.java:157)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.util.MSBRadixSorter.sort(MSBRadixSorter.java:136)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.util.MSBRadixSorter.sort(MSBRadixSorter.java:129)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.util.StringSorter.sort(StringSorter.java:54)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.util.BytesRefHash.sort(BytesRefHash.java:216)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.index.TermsHashPerField.sortTerms(TermsHashPerField.java:114)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.index.FreqProxTermsWriter.flush(FreqProxTermsWriter.java:97)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.index.IndexingChain.flush(IndexingChain.java:342)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.index.DocumentsWriterPerThread.flush(DocumentsWriterPerThread.java:456)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.index.DocumentsWriter.doFlush(DocumentsWriter.java:502)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.index.DocumentsWriter.maybeFlush(DocumentsWriter.java:456)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.index.DocumentsWriter.flushAllThreads(DocumentsWriter.java:649)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.index.IndexWriter.getReader(IndexWriter.java:579)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.index.StandardDirectoryReader.doOpenFromWriter(StandardDirectoryReader.java:382)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.index.StandardDirectoryReader.doOpenIfChanged(StandardDirectoryReader.java:356)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.index.StandardDirectoryReader.doOpenIfChanged(StandardDirectoryReader.java:346)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.index.FilterDirectoryReader.doOpenIfChanged(FilterDirectoryReader.java:112)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.index.DirectoryReader.openIfChanged(DirectoryReader.java:170)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.index.engine.ElasticsearchReaderManager.refreshIfNeeded(ElasticsearchReaderManager.java:49)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.index.engine.ElasticsearchReaderManager.refreshIfNeeded(ElasticsearchReaderManager.java:28)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.search.ReferenceManager.doMaybeRefresh(ReferenceManager.java:167)

app/org.apache.lucene.core@10.2.2/org.apache.lucene.search.ReferenceManager.maybeRefreshBlocking(ReferenceManager.java:240)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.index.engine.InternalEngine.refresh(InternalEngine.java:2072)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.index.engine.InternalEngine.flushHoldingLock(InternalEngine.java:2277)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.index.engine.Engine.flush(Engine.java:1423)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.index.shard.IndexShard.lambda$flush$12(IndexShard.java:1536)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.index.shard.IndexShard$$Lambda/0x000000003d542940.accept(Unknown Source)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.action.ActionListener.run(ActionListener.java:465)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.index.shard.IndexShard.flush(IndexShard.java:1528)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.index.shard.IndexShard.lambda$afterWriteOperation$51(IndexShard.java:4151)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.index.shard.IndexShard$$Lambda/0x000000003d5819d8.run(Unknown Source)

app/org.elasticsearch.server@9.1.2/org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:977)

java.base@24/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1095)

java.base@24/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:619)

java.base@24/java.lang.Thread.runWith(Thread.java:1460)

java.base@24/java.lang.Thread.run(Thread.java:1447)

I have been seeing this issue for a long time and had hoped that upgrading to ES 9 would help alleviate it, but this seems to not be the case. Please let me know if I should provide any other info to help diagnose this. Thanks in advance!