Hello,

We have definitely contracted Elastic Cloud. And we've started building integrations for IIS, which collect access and error logs, and IIS metrics as well.

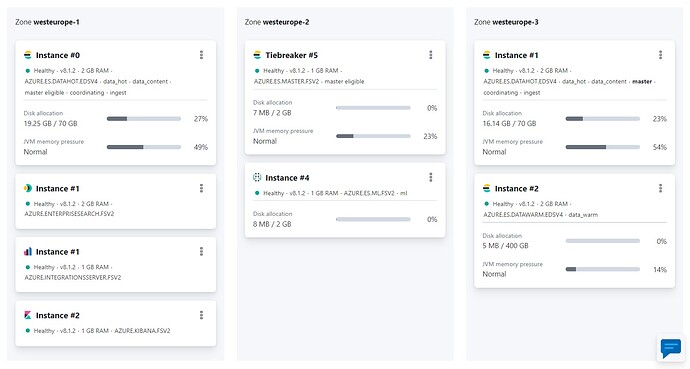

When we had added about 5 such integrations, Elastic started crashing. JVM memory pressure was high and servers were constantly restarting. On the recommendation of the commercial part of Elastic, for the little use that we give to the platform, we start with the most basic servers, with 1GB of RAM. We were always told to look at the GB of space we would use. And we contracted Elastic Cloud precisely so as not to need people specialized in performance, since we don't have the people or the time for this learning.

After upgrading the machines to 2GB yesterday, Elastic support tells us that we have to improve performance as it is. Right now we have more than 400 shards and about 120 indexes. The deployment has been running for a week. We have read something about how to correct this, although it is not clear to us what we should do.

One of the recommendations is the ILM policy. But there are integrations that have been working for 2-3 days. What policy can we apply to something like that?

Thanks!