Environment: Win10 WLS2 Ubuntu 20.04, Docker version 20.10.5, Docker-compose version 1.29.0, ELK 7.12.0, nginx version 1.18.0

I have enabled xpack in the last version docker-compose.yml setting and use ./bin/elasticsearch-setup-passwords auto to create accounts and passwords. Login with elastic username and its corresponding password both elasticsearch(http://localhost:9200) and kibana(http://localhost:5601) works fine. The parameter and its value in .env file is correct and the docker-compose.yml file is as below.

version: '2.2'

services:

elasticsearch:

image: elasticsearch:7.12.0

privileged: true

user: root

command:

- /bin/bash

- -c

- sysctl -w vm.max_map_count=262144 && su elasticsearch -c bin/elasticsearch

container_name: elasticsearch

environment:

- discovery.type=single-node

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m -Dhttp.proxyHost=172.23.176.1 -Dhttp.proxyPort=7890 -Dhttps.proxyHost=172.23.176.1 -Dhttps.proxyPort=7890"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- esdata1:/usr/share/elasticsearch/data

- ./analysis-ik/IKAnalyzer.cfg.xml:/usr/share/elasticsearch/config/analysis-ik/IKAnalyzer.cfg.xml

ports:

- 9200:9200

networks:

- elastic

kibana:

image: kibana:7.12.0

container_name: kibana

depends_on:

- elasticsearch

environment:

- xpack.security.enabled=true

- ELASTICSEARCH_URL=http://elasticsearch:9200

- ELASTICSEARCH_USERNAME=kibana_system

- ELASTICSEARCH_PASSWORD=$KIBANA_SYSTEM_PASSWORD

ports:

- 5601:5601

networks:

- elastic

volumes:

esdata1:

driver: local

networks:

elastic:

driver: bridge

To secure the connection, I try to config ssl. I generate .crt and .key files for ca, elasticsearch, kibana with the code below.

openssl req -newkey rsa:4096 \

-x509 \

-sha256 \

-days 3650 \

-nodes \

-out elasticsearch.crt \

-keyout elasticsearch.key \

-subj "/C=CN/ST=Beijing/L=Beijing/O=XXXX/OU=XXXX"

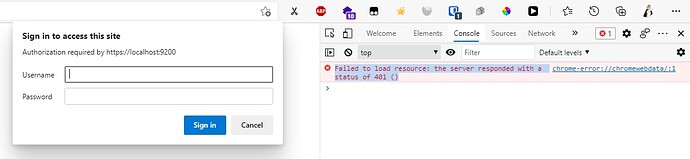

I follow the official doc's guideline to config docker-compose.yml , but the new version file not work. I have checked that all the .key and .crt file are place in the correct location, I have not add these file to nginx. I can open https://localhost:9200 , but after typing the username elastic and its password, the webpage refresh and is stilled on the original page.

As for the Browser's Console of https://localhost:9200, it says Failed to load resource: the server responded with a status of 401 ()

New docker-compose.yml is as below.

version: "2.2"

services:

elasticsearch:

image: elasticsearch:${VERSION}

container_name: elasticsearch

environment:

- discovery.type=single-node

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- xpack.license.self_generated.type=basic

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=$CERTS_DIR/elasticsearch/elasticsearch.key

- xpack.security.http.ssl.certificate_authorities=$CERTS_DIR/ca/ca.crt

- xpack.security.http.ssl.certificate=$CERTS_DIR/elasticsearch/elasticsearch.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.security.transport.ssl.certificate_authorities=$CERTS_DIR/ca/ca.crt

- xpack.security.transport.ssl.certificate=$CERTS_DIR/elasticsearch/elasticsearch.crt

- xpack.security.transport.ssl.key=$CERTS_DIR/elasticsearch/elasticsearch.key

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- data01:/usr/share/elasticsearch/data

- ./analysis-ik/IKAnalyzer.cfg.xml:/usr/share/elasticsearch/config/analysis-ik/IKAnalyzer.cfg.xml

# - ./elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- certs:$CERTS_DIR

ports:

- 9200:9200

- 9300:9300

networks:

- elastic

mem_limit: 2g # Delete when deploy in the server

healthcheck:

test: curl --cacert $CERTS_DIR/ca/ca.crt -s https://localhost:9200 >/dev/null; if [[ $? == 52 ]]; then echo 0; else echo 1; fi

interval: 30s

timeout: 10s

retries: 5

kibana:

image: kibana:${VERSION}

container_name: kibana

depends_on: {elasticsearch}

depends_on: {"elasticsearch": {"condition": "service_healthy"}}

ports:

- 5601:5601

environment:

SERVERNAME: localhost

ELASTICSEARCH_URL: https://elasticsearch:9200

ELASTICSEARCH_HOSTS: https://elasticsearch:9200

ELASTICSEARCH_USERNAME: kibana_system

ELASTICSEARCH_PASSWORD: ${KIBANA_SYSTEM_PASSWORD}

ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES: $CERTS_DIR/ca/ca.crt

SERVER_SSL_ENABLED: "true"

SERVER_SSL_KEY: $CERTS_DIR/kibana/kibana.key

SERVER_SSL_CERTIFICATE: $CERTS_DIR/kibana/kibana.crt

volumes:

- certs:$CERTS_DIR

networks:

- elastic

volumes:

data01:

driver: local

certs:

driver: local

networks:

elastic:

driver: bridge

Both the code below are not OK.

$ docker run --rm -v es_certs:/certs --network=es_elastic elasticsearch:7.12.0 curl --cacert /certs/ca/ca.crt -u elastic:PASSWORD https://elasticsearch:9200

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

curl: (60) SSL certificate problem: self signed certificate

More details here: https://curl.haxx.se/docs/sslcerts.html

curl failed to verify the legitimacy of the server and therefore could not

establish a secure connection to it. To learn more about this situation and

how to fix it, please visit the web page mentioned above.

$ docker run --rm -v es_certs:/certs --network=es_elastic elasticsearch:7.12.0 curl --insecure --cacert /certs/ca/ca.crt -u elastic:PASSWORD https://elasticsearch:9200

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 465 100 465 0 0 5109 0 --:--:-- --:--:-- --:--:-- 5109

{"error":{"root_cause":[{"type":"security_exception","reason":"unable to authenticate user [elastic] for REST request [/]","header":{"WWW-Authenticate":["Basic realm=\"security\" charset=\"UTF-8\"","Bearer realm=\"security\"","ApiKey"]}}],"type":"security_exception","reason":"unable to authenticate user [elastic] for REST request [/]","header":{"WWW-Authenticate":["Basic realm=\"security\" charset=\"UTF-8\"","Bearer realm=\"security\"","ApiKey"]}},"status":401}

How to fix it?