Hi @leandrojmp ,

I find it fail every morning , Just found these logs below..

][r.suppressed ] [seats-elk-vm] path: /_security/_query/api_key, params: {pretty=true}

org.elasticsearch.ElasticsearchException: Security must be explicitly enabled when using a [basic] license. Enable security by setting [xpack.security.enabled] to [true] in the elasticsearch.yml file and restart the node.

0:33:43,638][INFO ][o.e.p.PluginsService ] [seats-elk-vm] no plugins loaded

[2023-02-13T10:33:43,689][INFO ][o.e.e.NodeEnvironment ] [seats-elk-vm] using [1] data paths, mounts [[/ (/dev/sda1)]], net usable_space [23.1gb], net total_space [28.8gb], types [ext4]

[2023-02-13T10:33:43,690][INFO ][o.e.e.NodeEnvironment ] [seats-elk-vm] heap size [1.9gb], compressed ordinary object pointers [true]

[2023-02-13T10:33:43,853][INFO ][o.e.n.Node ] [seats-elk-vm] node name [seats-elk-vm], node ID [PZ4AAXiVRSmSWezIBzgZmg], cluster name [elasticsearch], roles [transform, data_frozen, master, remote_cluster_client, data, ml, data_content, data_hot, data_warm, data_cold, ingest]

[2023-02-13T10:33:48,347][INFO ][o.e.x.m.p.l.CppLogMessageHandler] [seats-elk-vm] [controller/10187] [Main.cc@122] controller (64 bit): Version 7.17.9 (Build ffceceeb3d63bc) Copyright (c) 2023 Elasticsearch BV

[2023-02-13T10:33:49,447][INFO ][o.e.x.s.a.s.FileRolesStore] [seats-elk-vm] parsed [0] roles from file [/etc/elasticsearch/roles.yml]

[2023-02-13T10:33:50,077][INFO ][o.e.i.g.ConfigDatabases ] [seats-elk-vm] initialized default databases [[GeoLite2-Country.mmdb, GeoLite2-City.mmdb, GeoLite2-ASN.mmdb]], config databases [[]] and watching [/etc/elasticsearch/ingest-geoip] for changes

[2023-02-13T10:33:50,078][INFO ][o.e.i.g.DatabaseNodeService] [seats-elk-vm] initialized database registry, using geoip-databases directory [/tmp/elasticsearch-1983527832423388062/geoip-databases/PZ4AAXiVRSmSWezIBzgZmg]

[2023-02-13T10:33:50,711][INFO ][o.e.t.NettyAllocator ] [seats-elk-vm] creating NettyAllocator with the following configs: [name=elasticsearch_configured, chunk_size=1mb, suggested_max_allocation_size=1mb, factors={es.unsafe.use_netty_default_chunk_and_page_size=false, g1gc_enabled=true, g1gc_region_size=4mb}]

[2023-02-13T10:33:50,745][INFO ][o.e.i.r.RecoverySettings ] [seats-elk-vm] using rate limit [40mb] with [default=40mb, read=0b, write=0b, max=0b]

[2023-02-13T10:33:50,789][INFO ][o.e.d.DiscoveryModule ] [seats-elk-vm] using discovery type [zen] and seed hosts providers [settings]

[2023-02-13T10:33:51,229][INFO ][o.e.g.DanglingIndicesState] [seats-elk-vm] gateway.auto_import_dangling_indices is disabled, dangling indices will not be automatically detected or imported and must be managed manually

[2023-02-13T10:33:51,886][INFO ][o.e.n.Node ] [seats-elk-vm] initialized

[2023-02-13T10:33:51,887][INFO ][o.e.n.Node ] [seats-elk-vm] starting ...

[2023-02-13T10:33:51,912][INFO ][o.e.x.s.c.f.PersistentCache] [seats-elk-vm] persistent cache index loaded

[2023-02-13T10:33:51,913][INFO ][o.e.x.d.l.DeprecationIndexingComponent] [seats-elk-vm] deprecation component started

[2023-02-13T10:33:52,046][INFO ][o.e.t.TransportService ] [seats-elk-vm] publish_address {10.50.98.5:9300}, bound_addresses {[::]:9300}

[2023-02-13T10:33:52,512][INFO ][o.e.b.BootstrapChecks ] [seats-elk-vm] bound or publishing to a non-loopback address, enforcing bootstrap checks

[2023-02-13T10:33:52,538][INFO ][o.e.c.c.Coordinator ] [seats-elk-vm] cluster UUID [dwY8x9UwQ_uhhMGjSfFr8w]

[2023-02-13T10:33:52,681][INFO ][o.e.c.s.MasterService ] [seats-elk-vm] elected-as-master ([1] nodes joined)[{seats-elk-vm}{PZ4AAXiVRSmSWezIBzgZmg}{oV1QVwS-QHG-u1aokRv4og}{10.50.98.5}{10.50.98.5:9300}{cdfhilmrstw} elect leader, _BECOME_MASTER_TASK_, _FINISH_ELECTION_], term: 7, version: 154, delta: master node changed {previous [], current [{seats-elk-vm}{PZ4AAXiVRSmSWezIBzgZmg}{oV1QVwS-QHG-u1aokRv4og}{10.50.98.5}{10.50.98.5:9300}{cdfhilmrstw}]}

[2023-02-13T10:33:52,798][INFO ][o.e.c.s.ClusterApplierService] [seats-elk-vm] master node changed {previous [], current [{seats-elk-vm}{PZ4AAXiVRSmSWezIBzgZmg}{oV1QVwS-QHG-u1aokRv4og}{10.50.98.5}{10.50.98.5:9300}{cdfhilmrstw}]}, term: 7, version: 154, reason: Publication{term=7, version=154}

[2023-02-13T10:33:52,859][INFO ][o.e.h.AbstractHttpServerTransport] [seats-elk-vm] publish_address {10.50.98.5:9200}, bound_addresses {[::]:9200}

[2023-02-13T10:33:52,860][INFO ][o.e.n.Node ] [seats-elk-vm] started

[2023-02-13T10:33:53,285][INFO ][o.e.l.LicenseService ] [seats-elk-vm] license [bb80596b-6f22-45e7-a429-672184e91914] mode [basic] - valid

[2023-02-13T10:33:53,286][INFO ][o.e.x.s.s.SecurityStatusChangeListener] [seats-elk-vm] Active license is now [BASIC]; Security is disabled

[2023-02-13T10:33:53,287][WARN ][o.e.x.s.s.SecurityStatusChangeListener] [seats-elk-vm] Elasticsearch built-in security features are not enabled. Without authentication, your cluster could be accessible to anyone. See https://www.elastic.co/guide/en/elasticsearch/reference/7.17/security-minimal-setup.html to enable security.

[2023-02-13T10:33:53,294][INFO ][o.e.g.GatewayService ] [seats-elk-vm] recovered [11] indices into cluster_state

[2023-02-13T10:33:54,055][ERROR][o.e.i.g.GeoIpDownloader ] [seats-elk-vm] exception during geoip databases update

org.elasticsearch.ElasticsearchException: not all primary shards of [.geoip_databases] index are active

at org.elasticsearch.ingest.geoip.GeoIpDownloader.updateDatabases(GeoIpDownloader.java:137) ~[ingest-geoip-7.17.9.jar:7.17.9]

at org.elasticsearch.ingest.geoip.GeoIpDownloader.runDownloader(GeoIpDownloader.java:284) [ingest-geoip-7.17.9.jar:7.17.9]

at org.elasticsearch.ingest.geoip.GeoIpDownloaderTaskExecutor.nodeOperation(GeoIpDownloaderTaskExecutor.java:100) [ingest-geoip-7.17.9.jar:7.17.9]

at org.elasticsearch.ingest.geoip.GeoIpDownloaderTaskExecutor.nodeOperation(GeoIpDownloaderTaskExecutor.java:46) [ingest-geoip-7.17.9.jar:7.17.9]

at org.elasticsearch.persistent.NodePersistentTasksExecutor$1.doRun(NodePersistentTasksExecutor.java:42) [elasticsearch-7.17.9.jar:7.17.9]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.doRun(ThreadContext.java:777) [elasticsearch-7.17.9.jar:7.17.9]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:26) [elasticsearch-7.17.9.jar:7.17.9]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1144) [?:?]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:642) [?:?]

at java.lang.Thread.run(Thread.java:1589) [?:?]

[2023-02-13T10:33:54,485][INFO ][o.e.i.g.DatabaseNodeService] [seats-elk-vm] retrieve geoip database [GeoLite2-Country.mmdb] from [.geoip_databases] to [/tmp/elasticsearch-1983527832423388062/geoip-databases/PZ4AAXiVRSmSWezIBzgZmg/GeoLite2-Country.mmdb.tmp.gz]

[2023-02-13T10:33:54,486][INFO ][o.e.i.g.DatabaseNodeService] [seats-elk-vm] retrieve geoip database [GeoLite2-City.mmdb] from [.geoip_databases] to [/tmp/elasticsearch-1983527832423388062/geoip-databases/PZ4AAXiVRSmSWezIBzgZmg/GeoLite2-City.mmdb.tmp.gz]

[2023-02-13T10:33:54,488][INFO ][o.e.i.g.DatabaseNodeService] [seats-elk-vm] retrieve geoip database [GeoLite2-ASN.mmdb] from [.geoip_databases] to [/tmp/elasticsearch-1983527832423388062/geoip-databases/PZ4AAXiVRSmSWezIBzgZmg/GeoLite2-ASN.mmdb.tmp.gz]

[2023-02-13T10:33:55,237][INFO ][o.e.c.r.a.AllocationService] [seats-elk-vm] Cluster health status changed from [RED] to [YELLOW] (reason: [shards started [[.ds-.logs-deprecation.elasticsearch-default-2023.02.08-000001][0]]]).

[2023-02-13T10:33:55,360][INFO ][o.e.i.g.DatabaseNodeService] [seats-elk-vm] successfully reloaded changed geoip database file [/tmp/elasticsearch-1983527832423388062/geoip-databases/PZ4AAXiVRSmSWezIBzgZmg/GeoLite2-Country.mmdb]

[2023-02-13T10:33:55,819][INFO ][o.e.i.g.DatabaseNodeService] [seats-elk-vm] successfully reloaded changed geoip database file [/tmp/elasticsearch-1983527832423388062/geoip-databases/PZ4AAXiVRSmSWezIBzgZmg/GeoLite2-ASN.mmdb]

[2023-02-13T10:33:56,995][INFO ][o.e.i.g.DatabaseNodeService] [seats-elk-vm] successfully reloaded changed geoip database file [/tmp/elasticsearch-1983527832423388062/geoip-databases/PZ4AAXiVRSmSWezIBzgZmg/GeoLite2-City.mmdb]

[2023-02-13T12:03:00,518][INFO ][o.e.t.LoggingTaskListener] [seats-elk-vm] 58943 finished with response BulkByScrollResponse[took=74.5ms,timed_out=false,sliceId=null,updated=17,created=0,deleted=0,batches=1,versionConflicts=0,noops=0,retries=0,throttledUntil=0s,bulk_failures=[],search_failures=[]]

[2023-02-13T12:03:00,587][INFO ][o.e.t.LoggingTaskListener] [seats-elk-vm] 58944 finished with response BulkByScrollResponse[took=300.6ms,timed_out=false,sliceId=null,updated=342,created=0,deleted=0,batches=1,versionConflicts=0,noops=0,retries=0,throttledUntil=0s,bulk_failures=[],search_failures=[]]

[2023-02-13T12:03:02,794][INFO ][o.e.x.i.a.TransportPutLifecycleAction] [seats-elk-vm] updating index lifecycle policy [.alerts-ilm-policy]

[2023-02-13T12:24:06,665][INFO ][o.e.t.LoggingTaskListener] [seats-elk-vm] 74860 finished with response BulkByScrollResponse[took=40.6ms,timed_out=false,sliceId=null,updated=17,created=0,deleted=0,batches=1,versionConflicts=0,noops=0,retries=0,throttledUntil=0s,bulk_failures=[],search_failures=[]]

[2023-02-13T12:24:06,897][INFO ][o.e.t.LoggingTaskListener] [seats-elk-vm] 74883 finished with response BulkByScrollResponse[took=171.5ms,timed_out=false,sliceId=null,updated=348,created=0,deleted=0,batches=1,versionConflicts=0,noops=0,retries=0,throttledUntil=0s,bulk_failures=[],search_failures=[]]

[2023-02-13T12:24:09,068][INFO ][o.e.x.i.a.TransportPutLifecycleAction] [seats-elk-vm] updating index lifecycle policy [.alerts-ilm-policy]

[2023-02-13T12:26:09,407][WARN ][r.suppressed ] [seats-elk-vm] path: /_security/_query/api_key, params: {pretty=true}

org.elasticsearch.ElasticsearchException: Security must be explicitly enabled when using a [basic] license. Enable security by setting [xpack.security.enabled] to [true] in the elasticsearch.yml file and restart the node.

at org.elasticsearch.xpack.security.rest.action.SecurityBaseRestHandler.checkFeatureAvailable(SecurityBaseRestHandler.java:73) ~[x-pack-security-7.17.9.jar:7.17.9]

at org.elasticsearch.xpack.security.rest.action.SecurityBaseRestHandler.prepareRequest(SecurityBaseRestHandler.java:49) [x-pack-security-7.17.9.jar:7.17.9]

at org.elasticsearch.rest.BaseRestHandler.handleRequest(BaseRestHandler.java:86) [elasticsearch-7.17.9.jar:7.17.9]

at org.elasticsearch.xpack.security.rest.SecurityRestFilter.handleRequest(SecurityRestFilter.java:105) [x-pack-security-7.17.9.jar:7.17.9]

at org.elasticsearch.rest.RestController.dispatchRequest(RestController.java:337) [elasticsearch-7.17.9.jar:7.17.9]

at org.elasticsearch.rest.RestController.tryAllHandlers(RestController.java:403) [elasticsearch-7.17.9.jar:7.17.9]

at org.elasticsearch.rest.RestController.dispatchRequest(RestController.java:255) [elasticsearch-7.17.9.jar:7.17.9]

at org.elasticsearch.http.AbstractHttpServerTransport.dispatchRequest(AbstractHttpServerTransport.java:382) [elasticsearch-7.17.9.jar:7.17.9]

at org.elasticsearch.http.AbstractHttpServerTransport.handleIncomingRequest(AbstractHttpServerTransport.java:461) [elasticsearch-7.17.9.jar:7.17.9]

at org.elasticsearch.http.AbstractHttpServerTransport.incomingRequest(AbstractHttpServerTransport.java:357) [elasticsearch-7.17.9.jar:7.17.9]

at org.elasticsearch.http.netty4.Netty4HttpRequestHandler.channelRead0(Netty4HttpRequestHandler.java:35) [transport-netty4-client-7.17.9.jar:7.17.9]

at org.elasticsearch.http.netty4.Netty4HttpRequestHandler.channelRead0(Netty4HttpRequestHandler.java:19) [transport-netty4-client-7.17.9.jar:7.17.9]

at io.netty.channel.SimpleChannelInboundHandler.channelRead(SimpleChannelInboundHandler.java:99) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at org.elasticsearch.http.netty4.Netty4HttpPipeliningHandler.channelRead(Netty4HttpPipeliningHandler.java:48) [transport-netty4-client-7.17.9.jar:7.17.9]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:103) [netty-codec-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:103) [netty-codec-4.1.66.Final.jar:4.1.66.Final]

at io.netty.handler.codec.MessageToMessageCodec.channelRead(MessageToMessageCodec.java:111) [netty-codec-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:103) [netty-codec-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:103) [netty-codec-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:324) [netty-codec-4.1.66.Final.jar:4.1.66.Final]

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:296) [netty-codec-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286) [netty-handler-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:103) [netty-codec-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:719) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeysPlain(NioEventLoop.java:620) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:583) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:493) [netty-transport-4.1.66.Final.jar:4.1.66.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986) [netty-common-4.1.66.Final.jar:4.1.66.Final]

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74) [netty-common-4.1.66.Final.jar:4.1.66.Final]

at java.lang.Thread.run(Thread.java:1589) [?:?]

[2023-02-13T14:40:10,742][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] execution of [ReschedulingRunnable{runnable=org.elasticsearch.watcher.ResourceWatcherService$ResourceMonitor@5d96df62, interval=5s}] took [206555ms] which is above the warn threshold of [5000ms]

[2023-02-13T14:40:10,996][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [16s/16097ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:42:23,997][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [16s/16096630100ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:43:15,504][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] execution of [ReschedulingRunnable{runnable=org.elasticsearch.monitor.jvm.JvmGcMonitorService$1@3cedb60, interval=1s}] took [226345ms] which is above the warn threshold of [5000ms]

[2023-02-13T14:43:40,746][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [3.7m/226345ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:43:55,957][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [3.7m/226345452400ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:44:17,554][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1.5m/91515ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:44:37,447][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1.5m/91514485300ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:44:52,508][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [38.8s/38838ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:45:12,206][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [38.8s/38838241200ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:45:42,348][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [49.9s/49952ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:46:05,346][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [49.9s/49952197800ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:46:01,802][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] execution of [ReschedulingRunnable{runnable=org.elasticsearch.search.SearchService$Reaper@482ec1f9, interval=1m}] took [49952ms] which is above the warn threshold of [5000ms]

[2023-02-13T14:46:26,448][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [44.5s/44550ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:46:38,501][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [44.5s/44550112600ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:47:02,046][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [35.2s/35247ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:47:16,202][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [35.2s/35246191600ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:47:53,345][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [51.4s/51462ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:47:34,695][WARN ][o.e.m.f.FsHealthService ] [seats-elk-vm] health check of [/var/lib/elasticsearch/nodes/0] took [35246ms] which is above the warn threshold of [5s]

[2023-02-13T14:48:40,105][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [51.4s/51462090800ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:50:10,396][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [2.2m/136650ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:50:38,002][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [2.2m/136650183800ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:51:12,002][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1m/61544ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:52:11,002][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1m/61544485400ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:52:31,305][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1.3m/79253ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:54:15,544][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1.3m/79252940400ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:54:42,850][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [2.1m/131551ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:55:32,097][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [2.1m/131550906400ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:56:27,855][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1.7m/103703ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:56:57,996][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1.7m/103702725100ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:57:30,393][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1m/63996ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T14:58:27,752][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1m/63995692200ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T14:59:03,446][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1.5m/93103ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T15:00:05,946][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1.5m/93103457100ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T15:01:00,500][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1.9m/116650ms] on absolute clock which is above the warn threshold of [5000ms]

[2023-02-13T15:01:43,507][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1.9m/116650064800ns] on relative clock which is above the warn threshold of [5000ms]

[2023-02-13T15:02:18,555][WARN ][o.e.t.ThreadPool ] [seats-elk-vm] timer thread slept for [1.2m/77236ms] on absolute clock which is above the warn threshold of [5000ms]

elasticsearch@seats-elk-vm:/var/log/elasticsearch$

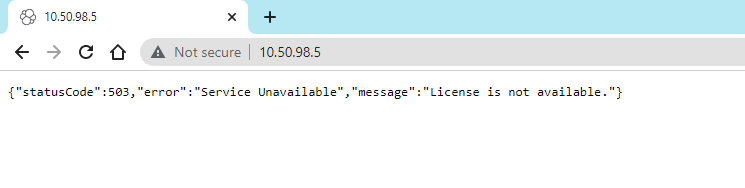

I guess it has something with security level ..