Hi,

My ES cluster is used to store logs. When writing data in graylog, I found that occasionally the load of a node would suddenly increase, affecting the data written to the whole cluster. The node did not have gc, and I did not see any obvious hotlines, probably for what reason?

elasticsearch:6.8.1

java:1.8

55.3% (276.6ms out of 500ms) cpu usage by thread 'elasticsearch[10.0.2.1_hot][transport_worker][T#113]'

6/10 snapshots sharing following 34 elements

org.elasticsearch.transport.TcpTransport.inboundMessage(TcpTransport.java:763)

org.elasticsearch.transport.netty4.Netty4MessageChannelHandler.channelRead(Netty4MessageChannelHandler.java:53)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:323)

io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:297)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.handler.logging.LoggingHandler.channelRead(LoggingHandler.java:241)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.handler.ssl.SslHandler.unwrap(SslHandler.java:1436)

io.netty.handler.ssl.SslHandler.decodeJdkCompatible(SslHandler.java:1203)

io.netty.handler.ssl.SslHandler.decode(SslHandler.java:1247)

io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:502)

io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:441)

--

54.9% (274.3ms out of 500ms) cpu usage by thread 'elasticsearch[10.0.2.1_hot][transport_worker][T#110]'

6/10 snapshots sharing following 26 elements

sun.security.ssl.SSLEngineImpl.isInboundDone(SSLEngineImpl.java:636)

sun.security.ssl.SSLEngineImpl.readRecord(SSLEngineImpl.java:551)

sun.security.ssl.SSLEngineImpl.unwrap(SSLEngineImpl.java:398)

sun.security.ssl.SSLEngineImpl.unwrap(SSLEngineImpl.java:377)

javax.net.ssl.SSLEngine.unwrap(SSLEngine.java:626)

io.netty.handler.ssl.SslHandler$SslEngineType$3.unwrap(SslHandler.java:295)

io.netty.handler.ssl.SslHandler.unwrap(SslHandler.java:1301)

io.netty.handler.ssl.SslHandler.decodeJdkCompatible(SslHandler.java:1203)

io.netty.handler.ssl.SslHandler.decode(SslHandler.java:1247)

io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:502)

io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:441)

io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:278)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1434)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:965)

--

50.3% (251.7ms out of 500ms) cpu usage by thread 'elasticsearch[10.0.2.1_hot][transport_worker][T#128]'

10/10 snapshots sharing following 20 elements

io.netty.handler.ssl.SslHandler.wrapAndFlush(SslHandler.java:797)

io.netty.handler.ssl.SslHandler.flush(SslHandler.java:778)

io.netty.channel.AbstractChannelHandlerContext.invokeFlush0(AbstractChannelHandlerContext.java:776)

io.netty.channel.AbstractChannelHandlerContext.invokeFlush(AbstractChannelHandlerContext.java:768)

io.netty.channel.AbstractChannelHandlerContext.flush(AbstractChannelHandlerContext.java:749)

io.netty.handler.logging.LoggingHandler.flush(LoggingHandler.java:265)

io.netty.channel.AbstractChannelHandlerContext.invokeFlush0(AbstractChannelHandlerContext.java:776)

io.netty.channel.AbstractChannelHandlerContext.invokeFlush(AbstractChannelHandlerContext.java:768)

io.netty.channel.AbstractChannelHandlerContext.flush(AbstractChannelHandlerContext.java:749)

io.netty.channel.ChannelDuplexHandler.flush(ChannelDuplexHandler.java:117)

io.netty.channel.AbstractChannelHandlerContext.invokeFlush0(AbstractChannelHandlerContext.java:776)

io.netty.channel.AbstractChannelHandlerContext.invokeFlush(AbstractChannelHandlerContext.java:768)

io.netty.channel.AbstractChannelHandlerContext.access$1500(AbstractChannelHandlerContext.java:38)

io.netty.channel.AbstractChannelHandlerContext$WriteAndFlushTask.write(AbstractChannelHandlerContext.java:1152)

io.netty.channel.AbstractChannelHandlerContext$AbstractWriteTask.run(AbstractChannelHandlerContext.java:1075)

io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:163)

io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:404)

io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:474)

io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:909)

What is the specification of your hardware? What type of storage do you have? What is the full output of the cluster stats API?

Also please do not post images of text as they can be very hard to read and are not searchable.

ElasticSearch:6.8.1

java:1.8.0-275

Hardware:256G RAM,40 vCore, 1.5 TB nvme0n1/SSD

I found that the cpu sys occupancy is relatively high

top - 09:23:31 up 66 days, 19:12, 2 users, load average: 58.18, 28.24, 16.51

Tasks: 735 total, 6 running, 728 sleeping, 1 stopped, 0 zombie

%Cpu(s): 12.9 us, 83.7 sy, 0.0 ni, 3.4 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem : 52781283+total, 15956684 free, 23306942+used, 27878672+buff/cache

KiB Swap: 0 total, 0 free, 0 used. 29171699+avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

82566 elastic+ 20 0 73.7g 44.6g 126220 S 5456 8.9 630454:26 java

Samples: 24K of event 'cycles:ppp', Event count (approx.): 16945917753

Overhead Shared Object Symbol

86.59% [kernel] [k] native_queued_spin_lock_slowpath

8.26% [kernel] [k] cpu_idle_poll

0.35% [kernel] [k] _raw_spin_unlock_irqrestore

0.21% [kernel] [k] compact_checklock_irqsave.isra.24

0.17% [kernel] [k] _raw_spin_lock_irqsave

0.12% perf-80906.map [.] 0x00002b16ff2e2d13

0.11% perf-80906.map [.] 0x00002b16ff2e2fe7

0.11% perf-80906.map [.] 0x00002b16ff2e2ec0

0.11% [kernel] [k] isolate_migratepages_range

0.09% [kernel] [k] change_pte_range

0.08% perf-80906.map [.] 0x00002b16ff2e2fe3

0.07% [kernel] [k] mem_cgroup_page_lruvec

0.07% perf-80906.map [.] 0x00002b16fcc02086

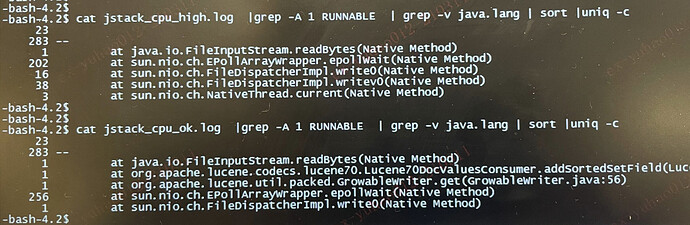

I found that it has something to do with the operating system, in the following environment, bulk write data, the node receiving the write request will appear

centos linux release 7.6.1810

3.10.0-957.el7.x86_64

This topic was automatically closed 28 days after the last reply. New replies are no longer allowed.