Can you go through this and give any updates for this?

Can you please provide the full output of the cluster stats API?

{

"_nodes": {

"total": 3,

"successful": 3,

"failed": 0

},

"cluster_name": "XXXXXXXX",

"timestamp": 1528894949232,

"status": "green",

"indices": {

"count": 26,

"shards": {

"total": 178,

"primaries": 86,

"replication": 1.069767441860465,

"index": {

"shards": {

"min": 6,

"max": 10,

"avg": 6.846153846153846

},

"primaries": {

"min": 3,

"max": 5,

"avg": 3.3076923076923075

},

"replication": {

"min": 1,

"max": 2,

"avg": 1.0769230769230769

}

}

},

"docs": {

"count": 3565594,

"deleted": 213371

},

"store": {

"size": "104.5gb",

"size_in_bytes": 112247772532,

"throttle_time": "0s",

"throttle_time_in_millis": 0

},

"fielddata": {

"memory_size": "1.1mb",

"memory_size_in_bytes": 1199920,

"evictions": 0

},

"query_cache": {

"memory_size": "71.3mb",

"memory_size_in_bytes": 74815152,

"total_count": 280366128,

"hit_count": 89906010,

"miss_count": 190460118,

"cache_size": 52293,

"cache_count": 315282,

"evictions": 262989

},

"completion": {

"size": "0b",

"size_in_bytes": 0

},

"segments": {

"count": 1238,

"memory": "173.6mb",

"memory_in_bytes": 182122800,

"terms_memory": "134.8mb",

"terms_memory_in_bytes": 141404856,

"stored_fields_memory": "7.7mb",

"stored_fields_memory_in_bytes": 8122984,

"term_vectors_memory": "466.5kb",

"term_vectors_memory_in_bytes": 477704,

"norms_memory": "10.3mb",

"norms_memory_in_bytes": 10823936,

"points_memory": "1.2mb",

"points_memory_in_bytes": 1261336,

"doc_values_memory": "19.1mb",

"doc_values_memory_in_bytes": 20031984,

"index_writer_memory": "0b",

"index_writer_memory_in_bytes": 0,

"version_map_memory": "0b",

"version_map_memory_in_bytes": 0,

"fixed_bit_set": "236.7kb",

"fixed_bit_set_memory_in_bytes": 242480,

"max_unsafe_auto_id_timestamp": -1,

"file_sizes": {}

}

},

"nodes": {

"count": {

"total": 3,

"data": 3,

"coordinating_only": 0,

"master": 3,

"ingest": 3

},

"versions": [

"5.1.2"

],

"os": {

"available_processors": 48,

"allocated_processors": 48,

"names": [

{

"name": "Linux",

"count": 3

}

],

"mem": {

"total": "90.2gb",

"total_in_bytes": 96943034368,

"free": "1.4gb",

"free_in_bytes": 1567109120,

"used": "88.8gb",

"used_in_bytes": 95375925248,

"free_percent": 2,

"used_percent": 98

}

},

"process": {

"cpu": {

"percent": 16

},

"open_file_descriptors": {

"min": 955,

"max": 962,

"avg": 958

}

},

"jvm": {

"max_uptime": "21.1h",

"max_uptime_in_millis": 76133306,

"versions": [

{

"version": "1.8.0_45",

"vm_name": "Java HotSpot(TM) 64-Bit Server VM",

"vm_version": "25.45-b02",

"vm_vendor": "Oracle Corporation",

"count": 3

}

],

"mem": {

"heap_used": "18gb",

"heap_used_in_bytes": 19396256344,

"heap_max": "47.6gb",

"heap_max_in_bytes": 51199475712

},

"threads": 561

},

"fs": {

"total": "885.4gb",

"total_in_bytes": 950796226560,

"free": "625.9gb",

"free_in_bytes": 672104439808,

"available": "625.6gb",

"available_in_bytes": 671796477952

},

"plugins": [

{

"name": "analysis-icu",

"version": "5.1.2",

"description": "The ICU Analysis plugin integrates Lucene ICU module into elasticsearch, adding ICU relates analysis components.",

"classname": "org.elasticsearch.plugin.analysis.icu.AnalysisICUPlugin"

}

],

"network_types": {

"transport_types": {

"netty4": 3

},

"http_types": {

"netty4": 3

}

}

}

}

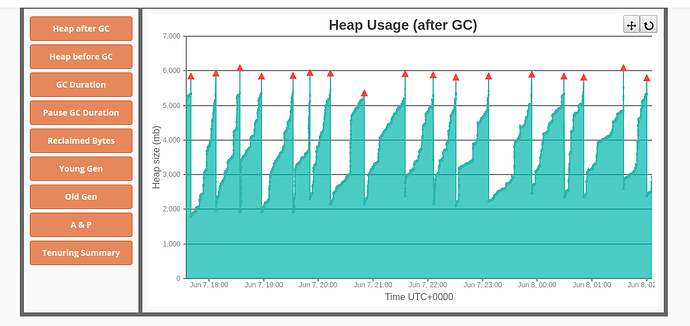

That looks fine. Can you zoom in on the GC graph you provided and show us here? It would be good if we could see the pattern and GC frequency across a shorter time period, e.g. an hour or two.

Doesn't look too bad, nor exceptionally frequent, but I can see the base is creeping upwards. There has been some memory leak issues fixed since the release you are running, so I would recommend upgrading to version 5.6.9.

I can see 2.2 GB unrachable objects .. after that. i could see 2.7GB unreachable objects in heap dumps

Sounds like you may be affected by one of the memory leaks that has since been fixed, which is why I am recommending upgrading.

This topic was automatically closed 28 days after the last reply. New replies are no longer allowed.