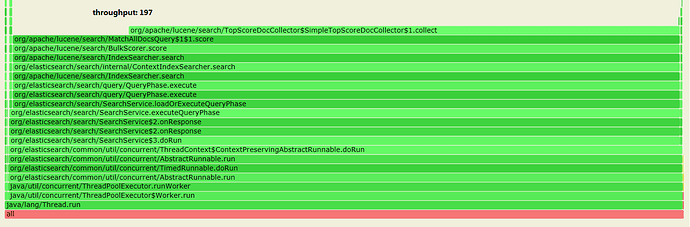

I am using rally to benchmark ES, and track is geonames. Every time when ES starts, run several match_all tests to warm up and get best throughput. The throughputs is between 45 and 200 in different test. Here is the environment configuration.

Rally

- version 1.0.0

operations

{

"name": "default",

"operation-type": "search",

"body": {

"query": {

"match_all": {}

}

}

},

challenges

"schedule": [

{

"operation": "default",

"clients": 4,

"warmup-iterations": 500,

"iterations": 1000,

"target-throughput": 210

}

]

Hardware & OS

-

Intel(R) Xeon(R) Gold 6148 CPU @ 2.40GHz

-

Memory 100G every numa

-

SSD

-

Linux 3.10.0

-

ES binds 4 cores using numactl

JVM

- -Xms8g

- -Xmx8g

- -XX:NewRatio=2

- -XX:+UseConcMarkSweepGC

ES

- version 6.2.3

$ curl -XGET 'http://localhost:9200/_cluster/stats?pretty'

{

"_nodes" : {

"total" : 1,

"successful" : 1,

"failed" : 0

},

"cluster_name" : "elasticsearch",

"timestamp" : 1553929322953,

"status" : "green",

"indices" : {

"count" : 1,

"shards" : {

"total" : 5,

"primaries" : 5,

"replication" : 0.0,

"index" : {

"shards" : {

"min" : 5,

"max" : 5,

"avg" : 5.0

},

"primaries" : {

"min" : 5,

"max" : 5,

"avg" : 5.0

},

"replication" : {

"min" : 0.0,

"max" : 0.0,

"avg" : 0.0

}

}

},

"docs" : {

"count" : 10320000,

"deleted" : 0

},

"store" : {

"size_in_bytes" : 2795283680

},

...