Hi there.

I'm now running a elasticsearch cluster with 5 nodes on java 7 (

jre1.7.0_04 ) using the new G1 garbage collector.

We use the latest version of elasticsearch, 0.19.8

Here's an overview of our setup: http://www.screencast.com/t/wwW6Pcua

I use these java settings:

Java Additional Parameters

wrapper.java.additional.1=-Delasticsearch-service

wrapper.java.additional.2=-Des.path.home=%ES_HOME%

wrapper.java.additional.3=-Xss512k

wrapper.java.additional.4=-XX:+UnlockExperimentalVMOptions

wrapper.java.additional.5=-XX:+UseG1GC

wrapper.java.additional.6=-XX:MaxGCPauseMillis=50

wrapper.java.additional.7=-XX:GCPauseIntervalMillis=100

wrapper.java.additional.8=-XX:SurvivorRatio=16

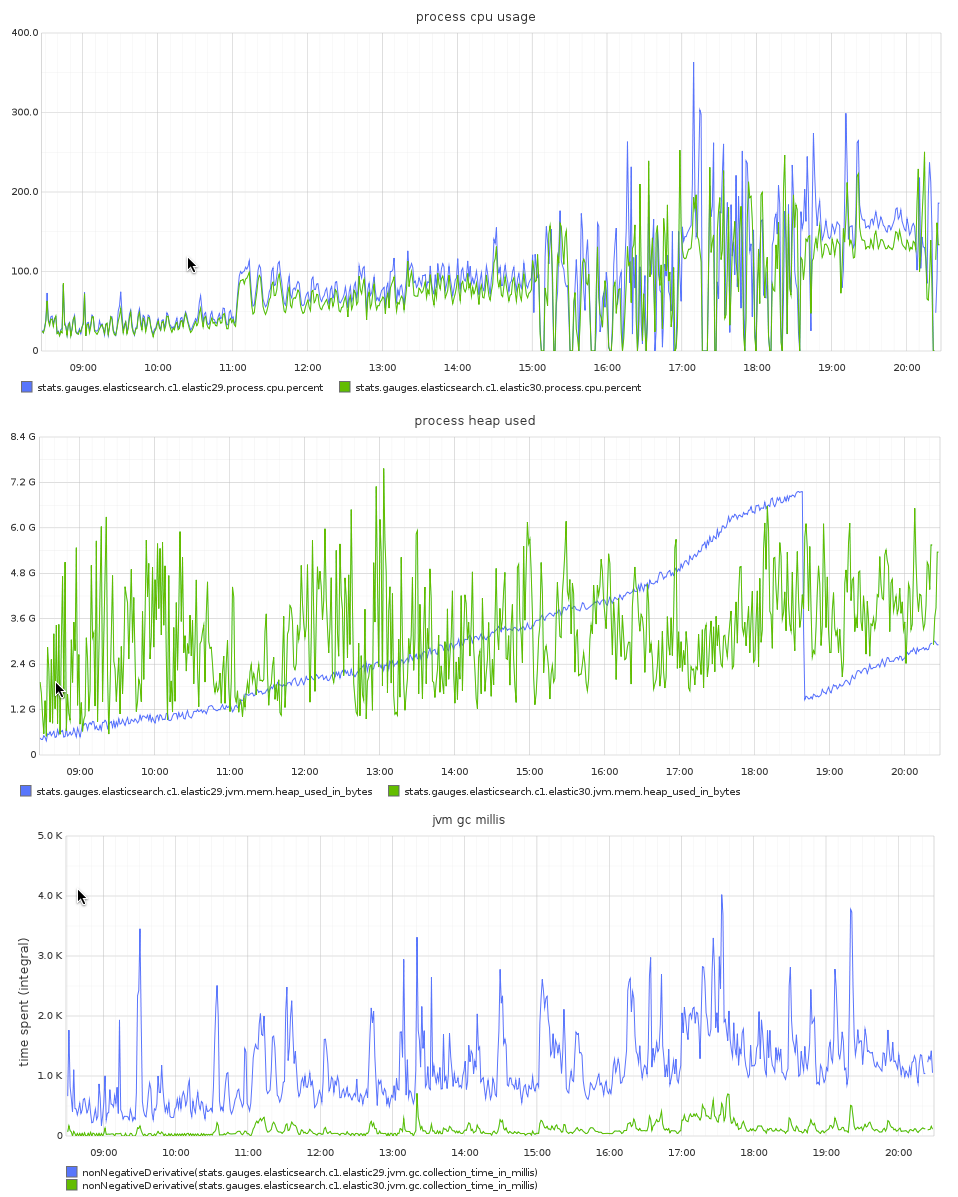

Initially the nodes run great. Avg. query time is ~ 12ms. But the cpu load

grows slowly, (without affecting the query time) but eventually

overwhelming the cpu at which time the cluster starts freaking out and the

response times grow into the seconds. As a very ugly workaround we simply

recycle the java processes every 12 hours, in a rolling fashion across the

entire cluster. After the restart the cpu usage drops very low for that

node, and then starts to creep up again.

We graph the whole thing with statsd/graphite, here's a bunch of graphs

that show the effect:

process cpu usage: http://www.screencast.com/t/dun2dpNJdPh

heap usage: http://www.screencast.com/t/7DeB2Mqp

gc count: http://www.screencast.com/t/evMK6zUPQm

gc time: http://www.screencast.com/t/9rwv9WxxBfKs

the actual work load doesn't seem related:

query: http://www.screencast.com/t/GgjU3h4d1

index: http://www.screencast.com/t/Ozb1d3qlx

These are ec2 m1.xlarge (16gb ram, 4 cores)

What could cause this? Maybe I'm wrong, but I doesn't look to me like the

garbage collection is to blame here. Compare to some values I've seen with

Java 6 and without G1 these gc counts are very acceptable.

Is it even recommended to run elasticsearch on Java 7? Do you use it with

or without G1?

Are there any best-practice java7 settings someone is willing to share?

Best regards,

Toni

--