Hi All ,

iam using filebeat for the dasbboard creation for monitoring the jobs and i find log.flags:dissect_parsing_error as error and can any one please help to resolve this issue.

Hi @ssksraja, could you provide more details? I didn't understand the issue you are facing.

In the title you wrote about duplicated logs, but on the body of the topic you mentioned the "dissect_parsing_error" that will lead to events being dropped, not duplicated.

Which problem are you actually facing? Is it both at the same time?

In either case, could you provide your input configuration and the error logs you are seeing?

Hi ,

Thanks for your reply. i face the both the issue.

- need to know how to resolve this "dissect_parsing_error"

- to avoid duplicate in the dashboard.

here i share the imput configuration file

- type: log

enabled: true

paths: ['/apps/waae/11.3/autouser.IA1/out/event_demon.IA1*']

tags: ['autosys', 'Dev', 'XXX']

processors:-

add_id: ~

-

dissect:

tokenizer: "%{sp} for %{job.name} %{job.id}.%{job.run_id}.%{job.n_try}]"

field: "message"

target_prefix: "event1"

when:

regexp:

message: ".CAUAJM_I_10082. for [^KILLJOB].*" -

rename:

fields:- from: "event1.job.name"

to: "event.job.name"

ignore_missing: true

- from: "event1.job.name"

-

rename:

fields:- from: "event1.job.id"

to: "event.job.job_id"

ignore_missing: true

- from: "event1.job.id"

-

rename:

fields:- from: "event1.job.run_id"

to: "event.job.run_id"

ignore_missing: true

- from: "event1.job.run_id"

-

rename:

fields:- from: "event1.job.n_try"

to: "event.job.n_try"

ignore_missing: true

- from: "event1.job.n_try"

-

drop_fields:

fields: [ "event1" ] -

dissect:

tokenizer: "%{sp} box %{job.name} %{job.id}.%{job.run_id}.%{job.n_try}]"

field: "message"

target_prefix: "event1"

when:

regexp:

message: ".CAUAJM_I_40245." -

rename:

fields:- from: "event1.job.name"

to: "event.job.name"

ignore_missing: true

- from: "event1.job.name"

-

rename:

fields:- from: "event1.job.id"

to: "event.job.box_id"

ignore_missing: true

- from: "event1.job.id"

-

rename:

fields:- from: "event1.job.run_id"

to: "event.job.run_id"

ignore_missing: true

- from: "event1.job.run_id"

-

rename:

fields:- from: "event1.job.n_try"

to: "event.job.n_try"

ignore_missing: true

- from: "event1.job.n_try"

-

drop_fields:

fields: [ "event1" ] -

dissect:

tokenizer: "%{sp}CAUAJM_I_40245%{sp}STATUS: %{job.status} %{sp}JOB: %{job.name}"

field: "message"

target_prefix: "event1"

when:

regexp:

message: ".*CAUAJM_I_40245.STATUS." -

dissect:

tokenizer: "%{sp}JOB: %{job.name} %{sp}"

field: "message"

target_prefix: "event2"

when:

regexp:

message: ".*CAUAJM_I_40245.*STATUS.MACHINE." -

dissect:

tokenizer: "%{sp}JOB: %{job.name} %{sp}MACHINE: %{job.machine}"

field: "message"

target_prefix: "event3"

when:

regexp:

message: ".*CAUAJM_I_40245.*STATUS.MACHINE: ." -

dissect:

tokenizer: "%{sp}MACHINE: %{job.machine} %{sp}EXITCODE: %{job.exit_code}"

field: "message"

target_prefix: "event4"

when:

regexp:

message: ".*CAUAJM_I_40245.*STATUS.EXITCODE: ." -

rename:

fields:- from: "event4.job.exit_code"

to: "event.job.exit_code"

ignore_missing: true

- from: "event4.job.exit_code"

-

rename:

fields:- from: "event4.job.machine"

to: "event.job.machine"

ignore_missing: true

- from: "event4.job.machine"

-

rename:

fields:- from: "event3.job.name"

to: "event.job.name"

ignore_missing: true

- from: "event3.job.name"

-

rename:

fields:- from: "event3.job.machine"

to: "event.job.machine"

ignore_missing: true

when:

not:

has_fields: [ "event.job.machine" ]

- from: "event3.job.machine"

-

rename:

fields:- from: "event2.job.name"

to: "event.job.name"

ignore_missing: true

when:

not:

has_fields: [ "event.job.name" ]

- from: "event2.job.name"

-

rename:

fields:- from: "event1.job.status"

to: "event.job.status"

ignore_missing: true

- from: "event1.job.status"

-

rename:

fields:- from: "event1.job.name"

to: "event.job.name"

ignore_missing: true

when:

not:

has_fields: [ "event.job.name" ]

- from: "event1.job.name"

-

drop_fields:

fields: ["event1", "event2", "event3", "event4"]

-

@ssksraja your configuration lost most of its formation, so it's hard to read, but I can see you have many dissect processors.

The dissect_parsing_error error you're seeing, is because the tokenizer string you set is somehow not working for the input data (the message field on your event). You will have to check all your dissect processors to make sure they work correctly. Also check your regexps to make sure the correct dissect processor is running for the messages.

If you enable the debug logs for the processors logger Filebeat will log the dissect errors on debug level.

You can add this to your Filebeat config to log the debug information from the processors:

logging.level: debug

logging.selectors: ["processors"]

Hi Tiago ,

Now after adding the tags which you have mentioned to check the issue in process i got the error which is creating the problem and below is the error which i get. can you please help to debug and find where the issue ?.

THanks in advance

2022-08-03T18:59:05.847+0200 DEBUG [processors] processing/processors.go:128 Fail to apply processor client{add_locale=[format=offset], dissect=%{sp} for %{job.name} %{job.id}.%{job.run_id}.%{job.n_try}],field=message,target_prefix=event1, condition=regexp: map, rename=[{From:event1.job.name To:event.job.name}], rename=[{From:event1.job.id To:event.job.job_id}], rename=[{From:event1.job.run_id To:event.job.run_id}], rename=[{From:event1.job.n_try To:event.job.n_try}], drop_fields={"Fields":["event1"],"IgnoreMissing":false}, dissect=%{sp} box %{job.name} %{job.id}.%{job.run_id}.%{job.n_try}],field=message,target_prefix=event1, condition=regexp: map, rename=[{From:event1.job.name To:event.job.name}], rename=[{From:event1.job.id To:event.job.box_id}], rename=[{From:event1.job.run_id To:event.job.run_id}], rename=[{From:event1.job.n_try To:event.job.n_try}], drop_fields={"Fields":["event1"],"IgnoreMissing":false}, dissect=%{sp}CAUAJM_I_40245%{sp}STATUS: %{job.status} %{sp}JOB: %{job.name},field=message,target_prefix=event1, condition=regexp: map, dissect=%{sp}JOB: %{job.name} %{sp},field=message,target_prefix=event2, condition=regexp: map, dissect=%{sp}JOB: %{job.name} %{sp}MACHINE: %{job.machine},field=message,target_prefix=event3, condition=regexp: map, dissect=%{sp}MACHINE: %{job.machine} %{sp}EXITCODE: %{job.exit_code},field=message,target_prefix=event4, condition=regexp: map, rename=[{From:event4.job.exit_code To:event.job.exit_code}], rename=[{From:event4.job.machine To:event.job.machine}], rename=[{From:event3.job.name To:event.job.name}], rename=[{From:event3.job.machine To:event.job.machine}], condition=!has_fields: [event.job.machine], rename=[{From:event2.job.name To:event.job.name}], condition=!has_fields: [event.job.name], rename=[{From:event1.job.status To:event.job.status}], rename=[{From:event1.job.name To:event.job.name}], condition=!has_fields: [event.job.name], drop_fields={"Fields":["event1","event2","event3","event4"],"IgnoreMissing":false}}: failed to drop field [event1]: key not found; failed to drop field [event2]: key not found; failed to drop field [event3]: key not found; failed to drop field [event4]: key not found

Hi @ssksraja,

Whenever you post some code/logs, please use the Preformatted Text option (highlight the code block then press Ctrl + E, or the equivalent for your OS). It is a bit hard to read the whole log entry, some of its content got converted into tick-boxes.

If you read carefully the whole line, after some internal representation of the processors, you get the error:

failed to drop field [event1]: key not found; failed to drop field [event2]: key not found; failed to drop field [event3]: key not found; failed to drop field [event4]: key not found

It seems those fields do not exist in your event.

You have a huge list of processors and different conditions to run them, my suggestion is to get a few log entries as a test dataset and go testing processor by processor. That way you know exactly which processor is failing and in which case. After you get all processors working for one of your log formats, you can start from the beginning with another format.

It might seem like a laborious process, but it will help you to spot issues very easily and to know exactly which processor/regexp is failing.

Hi Thanks for the reply . yes as per the requirement all the log lines will not have fields and only few lines will have .now i have used ignore_missing: true in the drop fileds i.e.

- drop_fields:

fields: ["event1", "event2", "event3", "event4"]

ignore_missing: true

{sp} for %{job.name} %{job.id}.%{job.run_id}.%{job.n_try}],field=message,target_prefix=event1, condition=regexp: map[], rename=[{From:event1.job.name To:event.job.name}], rename=[{From:event1.job.id To:event.job.job_id}], rename=[{From:event1.job.run_id To:event.job.run_id}], rename=[{From:event1.job.n_try To:event.job.n_try}], drop_fields={"Fields":["event1"],"IgnoreMissing":true}, dissect=%{sp} box %{job.name} %{job.id}.%{job.run_id}.%{job.n_try}],field=message,target_prefix=event1, condition=regexp: map[], rename=[{From:event1.job.name To:event.job.name}], rename=[{From:event1.job.id To:event.job.box_id}], rename=[{From:event1.job.run_id To:event.job.run_id}], rename=[{From:event1.job.n_try To:event.job.n_try}], drop_fields={"Fields":["event1"],"IgnoreMissing":true}, dissect=%{sp}CAUAJM_I_40245%{sp}STATUS: %{job.status} %{sp}JOB: %{job.name},field=message,target_prefix=event1, condition=regexp: map[], dissect=%{sp}JOB: %{job.name} %{sp},field=message,target_prefix=event2, condition=regexp: map[], dissect=%{sp}JOB: %{job.name} %{sp}MACHINE: %{job.machine},field=message,target_prefix=event3, condition=regexp: map[], dissect=%{sp}MACHINE: %{job.machine} %{sp}EXITCODE: %{job.exit_code},field=message,target_prefix=event4, condition=regexp: map[], rename=[{From:event4.job.exit_code To:event.job.exit_code}], rename=[{From:event4.job.machine To:event.job.machine}], rename=[{From:event3.job.name To:event.job.name}], rename=[{From:event3.job.machine To:event.job.machine}], condition=!has_fields: [event.job.machine], rename=[{From:event2.job.name To:event.job.name}], condition=!has_fields: [event.job.name], rename=[{From:event1.job.status To:event.job.status}], rename=[{From:event1.job.name To:event.job.name}], condition=!has_fields: [event.job.name], drop_fields={"Fields":["event1","event2","event3","event4"],"IgnoreMissing":true}}: could not find delimiter: `` in remaining: `[2022/08/04 00:47:32] CAUAJM_I_40245 EVENT: CHANGE_STATUS STATUS: RUNNING JOB: IPALP11-Q1BOX-FND-LOAD MACHINE:`, (offset: 0)

Now iam getting above error . can you please help to resolve this issue ?.

@ssksraja you need to carefully read the log messages to understand why the processors are failing. Because you have at least 4 different dissect processors configured, you might have to iterate a few times until you get it all working.

If you look at the log message you posted, the error is at the end:

could not find delimiter: `` in remaining: `[2022/08/04 00:47:32] CAUAJM_I_40245 EVENT: CHANGE_STATUS STATUS: RUNNING JOB: IPALP11-Q1BOX-FND-LOAD MACHINE:`

So the tokenizer expression and the message do not match.

Also make sure your conditions are well indented (because your config lost it's formatting I can't tell whether it's correct or not)

Here is an example of setting the conditions to run a processor using regexp: Filter and enhance data with processors | Filebeat Reference [8.3] | Elastic

Here is the overall documentation to defining processors and their conditions: Define processors | Filebeat Reference [8.3] | Elastic

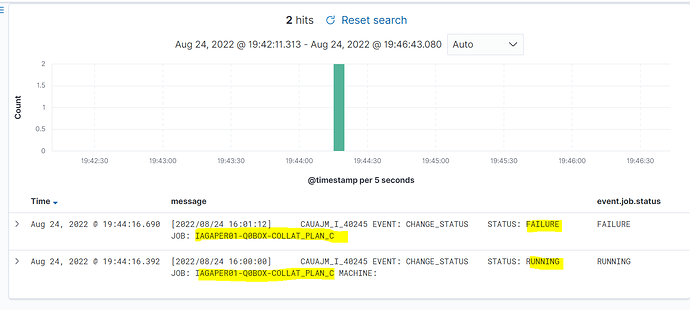

@TiagoQueiroz Thanks for your reply. now iam getting the duplicate records in the Dashboard where it shows all the status of the jobs. but for me i need to show only one records for that job.

can u plz help to fix the issue technically. Thanks in advance

Regards

Raja s

Filebeat will ship every log entry to Elasticsearch, that is the expected behaviour. You can try to do some filtering in Kibana to only return the latest entry.

This topic was automatically closed 28 days after the last reply. New replies are no longer allowed.