Hi,

Thanks for your quick response

{"message":"<189>227601: *Jul 10 13:12:52.119 UTC: %SSH-5-SSH2_SESSION: SSH2 Session request from 10.200.250.20 (tty = 0) using crypto cipher 'aes128-ctr', hmac 'hmac-sha2-256-etm@openssh.com' Succeeded","@version":"1","tags":["_jsonparsefailure","_grokparsefailure"],"host":{"ip":"10.200.226.59"},"@timestamp":"2025-07-10T13:07:18.318901969Z","event":{"original":"<189>227601: *Jul 10 13:12:52.119 UTC: %SSH-5-SSH2_SESSION: SSH2 Session request from 10.200.250.20 (tty = 0) using crypto cipher 'aes128-ctr', hmac 'hmac-sha2-256-etm@openssh.com' Succeeded"},"type":"syslog"}

{"message":"<189>227603: *Jul 10 13:12:52.167 UTC: %SSH-5-SSH2_USERAUTH: User 't_dnac' authentication for SSH2 Session from 10.200.250.20 (tty = 0) using crypto cipher 'aes128-ctr', hmac 'hmac-sha2-256-etm@openssh.com' Succeeded","@version":"1","tags":["_jsonparsefailure","_grokparsefailure"],"host":{"ip":"10.200.226.59"},"@timestamp":"2025-07-10T13:07:18.319350624Z","event":{"original":"<189>227603: *Jul 10 13:12:52.167 UTC: %SSH-5-SSH2_USERAUTH: User 't_dnac' authentication for SSH2 Session from 10.200.250.20 (tty = 0) using crypto cipher 'aes128-ctr', hmac 'hmac-sha2-256-etm@openssh.com' Succeeded"},"type":"syslog"}

{"message":"<189>227602: *Jul 10 13:12:52.167 UTC: %SEC_LOGIN-5-LOGIN_SUCCESS: Login Success [user: t_dnac] [Source: 10.200.250.20] [localport: 22] at 13:12:52 UTC Thu Jul 10 2025","@version":"1","tags":["_jsonparsefailure","_grokparsefailure"],"host":{"ip":"10.200.226.59"},"@timestamp":"2025-07-10T13:07:18.318984443Z","event":{"original":"<189>227602: *Jul 10 13:12:52.167 UTC: %SEC_LOGIN-5-LOGIN_SUCCESS: Login Success [user: t_dnac] [Source: 10.200.250.20] [localport: 22] at 13:12:52 UTC Thu Jul 10 2025"},"type":"syslog"}

{"message":"<189>227601: *Jul 10 13:12:52.119 UTC: %SSH-5-SSH2_SESSION: SSH2 Session request from 10.200.250.20 (tty = 0) using crypto cipher 'aes128-ctr', hmac 'hmac-sha2-256-etm@openssh.com' Succeeded","@version":"1","tags":["_jsonparsefailure","_grokparsefailure"],"host":{"ip":"10.200.226.59"},"@timestamp":"2025-07-10T13:07:18.318901969Z","event":{"original":"<189>227601: *Jul 10 13:12:52.119 UTC: %SSH-5-SSH2_SESSION: SSH2 Session request from 10.200.250.20 (tty = 0) using crypto cipher 'aes128-ctr', hmac 'hmac-sha2-256-etm@openssh.com' Succeeded"},"type":"syslog"}

{"message":"<189>227603: *Jul 10 13:12:52.167 UTC: %SSH-5-SSH2_USERAUTH: User 't_dnac' authentication for SSH2 Session from 10.200.250.20 (tty = 0) using crypto cipher 'aes128-ctr', hmac 'hmac-sha2-256-etm@openssh.com' Succeeded","@version":"1","tags":["_jsonparsefailure","_grokparsefailure"],"host":{"ip":"10.200.226.59"},"@timestamp":"2025-07-10T13:07:18.319350624Z","event":{"original":"<189>227603: *Jul 10 13:12:52.167 UTC: %SSH-5-SSH2_USERAUTH: User 't_dnac' authentication for SSH2 Session from 10.200.250.20 (tty = 0) using crypto cipher 'aes128-ctr', hmac 'hmac-sha2-256-etm@openssh.com' Succeeded"},"type":"syslog"}

{"message":"<189>227602: *Jul 10 13:12:52.167 UTC: %SEC_LOGIN-5-LOGIN_SUCCESS: Login Success [user: t_dnac] [Source: 10.200.250.20] [localport: 22] at 13:12:52 UTC Thu Jul 10 2025","@version":"1","tags":["_jsonparsefailure","_grokparsefailure"],"host":{"ip":"10.200.226.59"},"@timestamp":"2025-07-10T13:07:18.318984443Z","event":{"original":"<189>227602: *Jul 10 13:12:52.167 UTC: %SEC_LOGIN-5-LOGIN_SUCCESS: Login Success [user: t_dnac] [Source: 10.200.250.20] [localport: 22] at 13:12:52 UTC Thu Jul 10 2025"},"type":"syslog"}

{"message":"<189>227601: *Jul 10 13:12:52.119 UTC: %SSH-5-SSH2_SESSION: SSH2 Session request from 10.200.250.20 (tty = 0) using crypto cipher 'aes128-ctr', hmac 'hmac-sha2-256-etm@openssh.com' Succeeded","@version":"1","tags":["_jsonparsefailure","_grokparsefailure"],"host":{"ip":"10.200.226.59"},"@timestamp":"2025-07-10T13:07:18.318901969Z","event":{"original":"<189>227601: *Jul 10 13:12:52.119 UTC: %SSH-5-SSH2_SESSION: SSH2 Session request from 10.200.250.20 (tty = 0) using crypto cipher 'aes128-ctr', hmac 'hmac-sha2-256-etm@openssh.com' Succeeded"},"type":"syslog"}

{"message":"<189>227603: *Jul 10 13:12:52.167 UTC: %SSH-5-SSH2_USERAUTH: User 't_dnac' authentication for SSH2 Session from 10.200.250.20 (tty = 0) using crypto cipher 'aes128-ctr', hmac 'hmac-sha2-256-etm@openssh.com' Succeeded","@version":"1","tags":["_jsonparsefailure","_grokparsefailure"],"host":{"ip":"10.200.226.59"},"@timestamp":"2025-07-10T13:07:18.319350624Z","event":{"original":"<189>227603: *Jul 10 13:12:52.167 UTC: %SSH-5-SSH2_USERAUTH: User 't_dnac' authentication for SSH2 Session from 10.200.250.20 (tty = 0) using crypto cipher 'aes128-ctr', hmac 'hmac-sha2-256-etm@openssh.com' Succeeded"},"type":"syslog"}

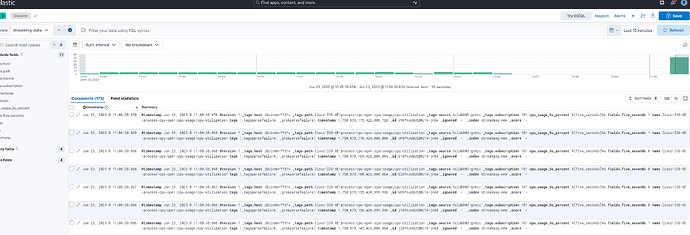

{"@timestamp":"2025-07-10T13:07:39.711967069Z","tags":["_tagsparsefailure"],"_tags":[{"name":"GigabitEthernet0/0","source":"hclab043-gnmic","path":"openconfig-interfaces:interfaces/interface/state/counters","subscription":"140","host":"ibcinmnrffd1v"}],"@version":"1","timestamp":1752153191207000000,"fields":{"in_octets":2623532390},"name":"openconfig-interfaces:interfaces/interface/state/counters"}

{"@timestamp":"2025-07-10T13:08:09.713833274Z","tags":["_tagsparsefailure"],"_tags":[{"name":"GigabitEthernet0/0","source":"hclab043-gnmic","path":"openconfig-interfaces:interfaces/interface/state/counters","subscription":"140","host":"ibcinmnrffd1v"}],"@version":"1","timestamp":1752153221206000000,"fields":{"in_octets":2623594978},"name":"openconfig-interfaces:interfaces/interface/state/counters"}

#{"@timestamp":"2025-07-10T13:08:39.714581855Z","tags":["_tagsparsefailure"],"_tags":[{"name":"GigabitEthernet0/0","source":"hclab043-gnmic","path":"openconfig-interfaces:interfaces/interface/state/counters","subscription":"140","host":"ibcinmnrffd1v"}],"@version":"1","timestamp":1752153251207000000,"fields":{"in_octets":2623619950},"name":"openconfig-interfaces:interfaces/interface/state/counters"}

Thanks

Daley