How CPU and memory and disk usage are utilized when we keep running Logstash as a log receiver/compressor/transmitter and increase the amount of input log?

I watch/maintain a server where only one logstash runs and receiver logs from quite a few clients (sending logs with beats through logstash to the server) through the internet. Watching the server performance, I was wondering how the cpu and memory utilization changes as the number of logs and clients increases.

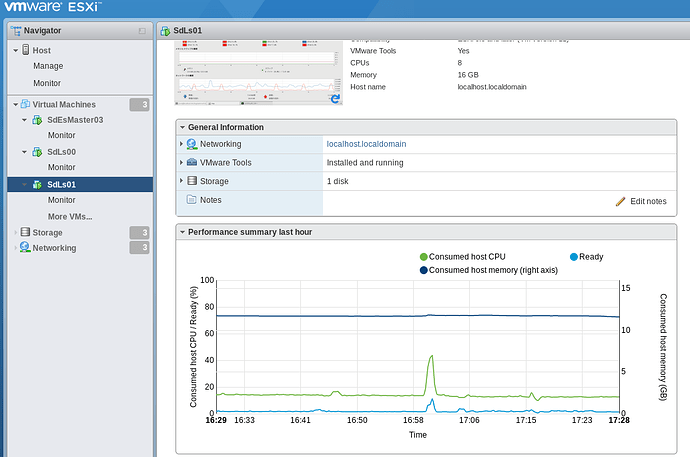

Here are the utilization pictures showing the current on-premise server's utilization.

For now, the server receives log in real time, output the log as a file every second by logstash, zip the log files and forward to the local NAS and restart logstash every hour.

Here are the utilization pictures showing the aws cloud server's utilization that I am developing.

In the pictures above, the cloud server receives log in real time, output the log as a file every 30 seconds by logstash, zip the log files and forward to the S3 every 30 seconds.

0-1 In general, how does logstash consume/utilize memory and CPU resource according to the amount of data input/output?

0-2 To begin with, how does logstash temporarily store the logs received? Does it store on computer's memory?

0-3 Does CPU usage increases as logstash receives more log, or does CPU usage depends only on how frequently output the received logs?

1 While I guess it may depend on how I configure logstash.conf especially on the frequency of forwarding logs, how (much) does the memory utilization change (increase) when the server receives much more logs than it does now or it is capable of? Does it just slow down or crash?

2 While I guess it may depend on how I configure logstash.conf especially on the frequency of forwarding logs, how (much) does the CPU utilization change (increase) when the server receives much more logs than it does now or it is capable of? Does it just slow down or crash?

3 Why does the memory utilization not change even though the amount of logs received changed by time more or less on the on-premise server? Does logstash reserve some memory when it starts (and increases the allocated memory when logstash has to tentatively store the logs more than it can with the initially reserved memory)?

I you wanna more information to answer the questions, feel free to let me know.

Thank you.