I wasn't asking that question of you, rather sort of rhetorically, and the other ELK experts on the forum - apologies for lack of clarity.

Well, I'm not saying it should or does or .... But it offers fairly low-level/fine grained tools for lots of things, via APIs, like disk usage, memory usage, query performance, hot threads, etc.

Yeah, absolutely, forget that. I understand the limitation that using the docker image gives you few tools, we should try to understand the issue via the tools you have.

That's useful, another data point. I presume you meant segment merges? Those done automatically by the system, or you are doing some merging yourselves?

... and another.

You actual use case, summarized in a few words is ... ? i.e. what sort of data is your 5TB of stuff stored in elasticsearch?

Your indices, the actual indices you can see from _cat/indices, are "the same" as before? Meaning only, for example, that you ingested say 10 millions docs per day, and you still ingest roughly 10 million docs per day, and the storage size per index and per document look very similar too? You do significant number of deletes ?

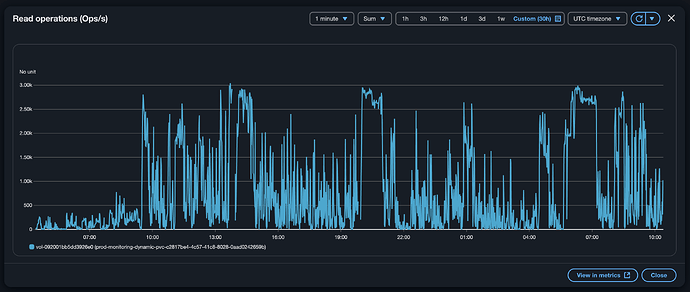

If prometheus has any stats on iops per index I'd also be surprised, but maybe there is a useful API I am just forgetting (or never knew).

A very interesting mystery!