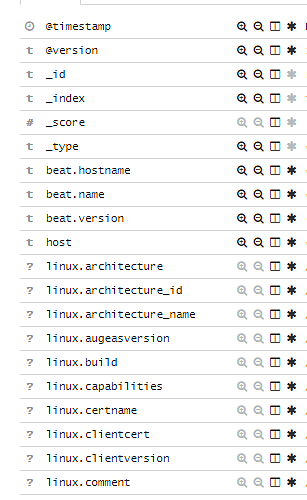

Hi I am ingesting following Json data to elasticseach through Logstash . There are around 100 different fields in that document. I am only interested in sending 4 fields ie linux.domain, linux.environment_id, linux.hostname and linux.id .

Is there a better to include only 4 fields I am interested in sending to elasticserch instead of using remove_field option and manually add all 100 fields in the config.

grok {

remove_field => ["linux.architecture","linux.architecture_id","linux.architecture_name"]-----> do not want to remove manually

My logstash config :

input {

beats {

port => "5044"

type => ["doc"]

}

}

filter {

grok {

match => {"message" => "^{?\n?.*?(?<logged_json>{.*)" }

}

json

{

source => "logged_json"

target => "linux"

# remove_field => "parameter"

}

}

output {

if [type] == "doc" {

elasticsearch {

hosts => [ "10.138.7.51:9200" ]

index => "testing-%{+YYYY-MM-dd}"

}

}

else if [type] != "doc" {

elasticsearch {

hosts => [ "10.138.7.51:9200" ]

index => "tester-%{+YYYY-MM-dd}"

}

}