Hi there

I have 2 problems which is probably related to each other:

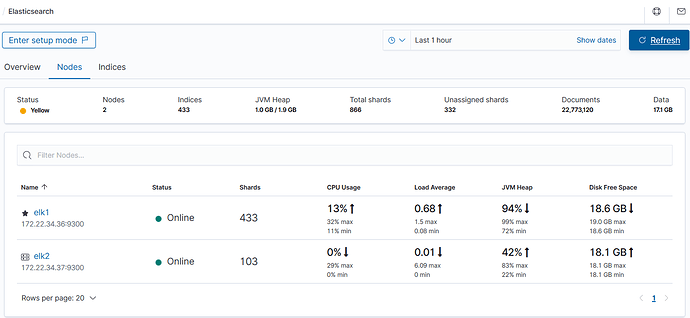

I have a basic cluster with 2 nodes of data.

But my status is always "Yellow" and these 2nodes can not divide the primary shards and replicas:

So I wrote down the reroute: curl -XPOST "localhost:9200/_cluster/reroute?retry_failed=true"

It returned some logs but didn`t work properly (yet there are a number of unassigned shards)

These are some of the logs:

{"acknowledged":true,"state":{"cluster_uuid":"nembwwEEQGCpPb0zW0C8_A","version":261913,"state_uuid":"vK1LtVbzQ8-j7UNJ7DV-6w","master_node":"veBpqUZrSIC9pX1SNrTvug","blocks":{},"nodes":{"2m_WCRVhQk6SAHo6zIG6cA":{"name":"elk2","ephemeral_id":"icEWYUHrTS2lxW7lGJ9sBw","transport_address":"172.22.34.37:9300","attributes":{"ml.machine_memory":"3973464064","ml.max_open_jobs":"20","xpack.installed":"true"}},"veBpqUZrSIC9pX1SNrTvug":{"name":"elk1","ephemeral_id":"IAcFFk3TROapKhfq2FB7tg","transport_address":"172.22.34.36:9300","attributes":{"ml.machine_memory":"3973468160","ml.max_open_jobs":"20","xpack.installed":"true"}}},"routing_table":{"indices":{"testlog-2020.07.15":{"shards":{"0":[{"state":"STARTED","primary":true,"node":"veBpqUZrSIC9pX1SNrTvug","relocating_node":null,"shard":0,"index":"testlog-2020.07.15","allocation_id":{"id":"kM1UZhkxSbe1ModVTQxkmw"}},{"state":"UNASSIGNED","primary":false,"node":null,"relocating_node":null,"shard":0,"index":"testlog-2020.07.15","recovery_source":{"type":"PEER"},"unassigned_info":{"reason":"NODE_LEFT","at":"2021-04-26T05:01:20.117Z","delayed":false,"details":"node_left [2m_WCRVhQk6SAHo6zIG6cA]","allocation_status":"no_attempt"}}]}},"testlog-2020.11.27":{"shards":{"0":[{"state":"STARTED","primary":true,"node":"veBpqUZrSIC9pX1SNrTvug","relocating_node":null,"shard":0,"index":"testlog-2020.11.27","allocation_id":{"id":"WmSFM1m2T7uzCcc66R0A_w"}},{"state":"UNASSIGNED","primary":false,"node":null,"relocating_node":null,"shard":0,"index":"testlog-2020.11.27","recovery_source":{"type":"PEER"},"unassigned_info":{"reason":"NODE_LEFT","at":"2021-04-26T05:01:20.117Z","delayed":false,"details":"node_left [2m_WCRVhQk6SAHo6zIG6cA]","allocation_status":"no_attempt"}}]}},"testlog-2020.09.24":{"shards":{"0":[{"state":"STARTED","primary":true,"node":"veBpqUZrSIC9pX1SNrTvug","relocating_node":null,"shard":0,"index":"testlog-2020.09.24","allocation_id":{"id":"zWPF9tY4SpeEjlCrbWqJeA"}},{"state":"UNASSIGNED","primary":false,"node":null,"relocating_node":null,"shard":0,"index":"testlog-2020.09.24","recovery_source":{"type":"PEER"},"unassigned_info":{"reason":"NODE_LEFT","at":"2021-04-24T09:47:12.975Z","delayed":false,"details":"node_left [2m_WCRVhQk6SAHo6zIG6cA]","allocation_status":"no_attempt"}}]}}...

This is my Kibana monitoring:

Beside that

I have another problem, I have some errors when I`m in stack monitoring:

My RAM and JVM Heap is ok (each node has RAM 4G and 1.9 JVM Heap), I don`t know where is the problem?

These are the relative logs in Kibana:

{"type":"log","@timestamp":"2021-04-26T05:36:07Z","tags":["status","plugin:spaces@7.6.2","error"],"pid":4153,"state":"red","message":"Status changed from red to red - [parent] Data too large, data for [<http_request>] would be [989246376/943.4mb], which is larger than the limit of [986932838/941.2mb], real usage: [989246376/943.4mb], new bytes reserved: [0/0b], usages [request=0/0b, fielddata=44990/43.9kb, in_flight_requests=0/0b, accounting=60808081/57.9mb]: [circuit_breaking_exception] [parent] Data too large, data for [<http_request>] would be [989246376/943.4mb], which is larger than the limit of [986932838/941.2mb], real usage: [989246376/943.4mb], new bytes reserved: [0/0b], usages [request=0/0b, fielddata=44990/43.9kb, in_flight_requests=0/0b, accounting=60808081/57.9mb], with { bytes_wanted=989246376 & bytes_limit=986932838 & durability=\"PERMANENT\" }","prevState":"red","prevMsg":"[parent] Data too large, data for [<http_request>] would be [988629224/942.8mb], which is larger than the limit of [986932838/941.2mb], real usage: [988629224/942.8mb], new bytes reserved: [0/0b], usages [request=0/0b, fielddata=44990/43.9kb, in_flight_requests=0/0b, accounting=60808081/57.9mb]: [circuit_breaking_exception] [parent] Data too large, data for [<http_request>] would be [988629224/942.8mb], which is larger than the limit of [986932838/941.2mb], real usage: [988629224/942.8mb], new bytes reserved: [0/0b], usages [request=0/0b, fielddata=44990/43.9kb, in_flight_requests=0/0b, accounting=60808081/57.9mb], with { bytes_wanted=988629224 & bytes_limit=986932838 & durability=\"PERMANENT\" }"}

And these are one of the Elasticsearch logs at the moment:

[2021-04-26T10:11:53,115][DEBUG][o.e.a.g.TransportGetAction] [elk2] null: failed to execute [get [.kibana][_doc][space:default]: routing [null]]

org.elasticsearch.transport.RemoteTransportException: [elk1][172.22.34.36:9300][indices:data/read/get[s]]

Caused by: org.elasticsearch.common.breaker.CircuitBreakingException: [parent] Data too large, data for [<transport_request>] would be [990432222/944.5mb], which is larger than the limit of [986932838/941.2mb], real usage: [990431960/944.5mb], new bytes reserved: [262/262b], usages [request=0/0b, fielddata=47965/46.8kb, in_flight_requests=262/262b, accounting=60837409/58mb]

at org.elasticsearch.indices.breaker.HierarchyCircuitBreakerService.checkParentLimit(HierarchyCircuitBreakerService.java:343) ~[elasticsearch-7.6.2.jar:7.6.2]

at org.elasticsearch.common.breaker.ChildMemoryCircuitBreaker.addEstimateBytesAndMaybeBreak(ChildMemoryCircuitBreaker.java:128) ~[elasticsearch-7.6.2.jar:7.6.2]

at org.elasticsearch.transport.InboundHandler.handleRequest(InboundHandler.java:171) [elasticsearch-7.6.2.jar:7.6.2]

at org.elasticsearch.transport.InboundHandler.messageReceived(InboundHandler.java:119) [elasticsearch-7.6.2.jar:7.6.2]

at org.elasticsearch.transport.InboundHandler.inboundMessage(InboundHandler.java:103) [elasticsearch-7.6.2.jar:7.6.2]

at org.elasticsearch.transport.TcpTransport.inboundMessage(TcpTransport.java:667) [elasticsearch-7.6.2.jar:7.6.2]

at org.elasticsearch.transport.netty4.Netty4MessageChannelHandler.channelRead(Netty4MessageChannelHandler.java:62) [transport-netty4-client-7.6.2.jar:7.6.2]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:374) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:360) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:352) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:326) [netty-codec-4.1.43.Final.jar:4.1.43.Final]

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:300) [netty-codec-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:374) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:360) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:352) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.handler.logging.LoggingHandler.channelRead(LoggingHandler.java:241) [netty-handler-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:374) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:360) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:352) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1422) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:374) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:360) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:931) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:163) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:700) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeysPlain(NioEventLoop.java:600) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:554) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:514) [netty-transport-4.1.43.Final.jar:4.1.43.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor$6.run(SingleThreadEventExecutor.java:1050) [netty-common-4.1.43.Final.jar:4.1.43.Final]

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74) [netty-common-4.1.43.Final.jar:4.1.43.Final]

at java.lang.Thread.run(Thread.java:830) [?:?]

Could you help me about these problems?

How can I fix this monitoring issue and why my status does not change to "Green" ??

Thanks in advance