Hello Team,

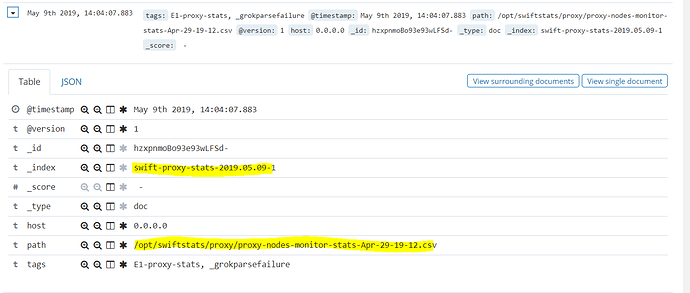

I have 2 conf files you can see below 1st conf file using output index alias "stats_write_swift_proxy_alias" to process proxy-nodes-monitor-stats-.csv & proxy-nodes-latency-stats-.csv files but I see this alias not processing mentioned files instead its processing /opt/swiftstats/aco/new/.csv* files, anything I am doing wrong but it was working as expected in 6.1.3 version I am seeing this issues right after upgrade 6.7.1.

proxy-nodes-monitor-stats-.csv & proxy-nodes-latency-stats-.csv files supposed to go to index (stats_write_swift_proxy_alias:alias) but you see its processing csv file which is given in 2nd confile

)Here are my indexes and alias details:

green open swift-proxy-stats-2019.05.09-1 qSZopH_LTziMbNXfUL5Szg 5 0 200750 0 17.5mb 17.5mb

green open swift-aco-stats-os-2019.05.09-1 o36VEV07Rd-WdHkaor7VYg 5 0 1669229 0 197.3mb 197.3mb

alias index filter routing.index routing.search

stats_write_swift_proxy_alias swift-proxy-stats-2019.05.09-1 - - -

stats_write_swift_aco_alias swift-aco-stats-os-2019.05.09-1 - - -

1. 1st conf file:

input {

file {

path => "/opt/swiftstats/proxy/proxy-nodes-monitor-stats-*.csv"

start_position => "beginning"

sincedb_path => "/opt/data/logstash/plugins/inputs/file/proxy-stats.log"

tags => ["E1-proxy-stats"]

}

file {

path => "/opt/swiftstats/proxy-latency/proxy-nodes-latency-stats-*.csv"

start_position => "beginning"

sincedb_path => "/opt/data/logstash/plugins/inputs/file/proxy-latency-stats.log"

tags => ["E1-proxy-latency"]

}

}

--filters

output {

elasticsearch {

hosts => [ "http://10.1.28.176:9200" ]

index => "stats_write_swift_proxy_alias"

template => "/opt/logstash/config/templates/swift_proxy_stats.json"

template_name => "swift_proxy_stats_template"

template_overwrite => true

}

Template:

[elk@elk2 572019]$ cat /opt/logstash/config/templates/swift_proxy_stats.json

{

"template": "swift_proxy_stats_template",

"index_patterns": ["swift-proxy-stats-*"],

"settings": {

"index.refresh_interval": "5s",

"index.codec": "best_compression",

"number_of_shards": 5,

"number_of_replicas": 0

},

"aliases": {

"stats_write_swift_proxy_alias": {}

}

}

1. 2nd conf file:

input {

file {

path => "/opt/swiftstats/aco/new/*.csv"

start_position => "beginning"

#sincedb_path => "/dev/null"

sincedb_path => "/opt/data/logstash/plugins/inputs/file/swift_aco_os_handoff.log"

tags => ["E1-aco"]

}

}

-- filters

output {

if "E1-aco" in [tags] {

elasticsearch {

hosts => [ "http://10.1.28.176:9200" ]

index => "stats_write_swift_aco_alias"

template => "/opt/logstash/config/templates/swift_aco_stats.json"

template_name => "swift_aco_stats_template"

template_overwrite => true

}

}

}

2nd conf file Template:

[elk@elk2 572019]$ cat /opt/logstash/config/templates/swift_aco_stats.json

{

"template": "swift_aco_stats_template",

"index_patterns": ["swift-aco-stats-os*"],

"settings": {

"index.refresh_interval": "5s",

"index.codec": "best_compression",

"number_of_shards": 5,

"number_of_replicas": 0

},

"aliases": {

"stats_write_swift_aco_alias": {}

}

}

Thanks

Chandra