Hi,

I am newbie on elasticsearch.

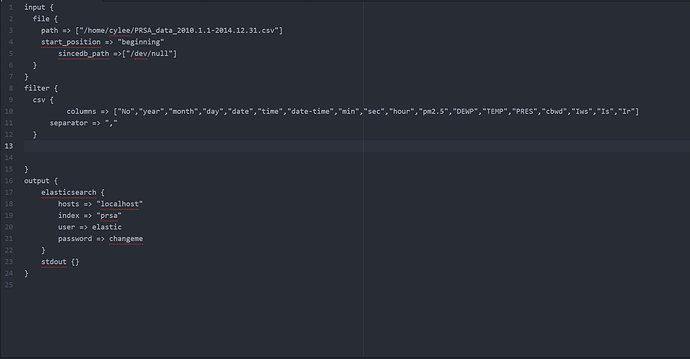

I tried to import my "logstash_general.conf" to elasticsearch.

However, It didn't work.

when I entered following command,

/usr/share/logstash/bin/logstash -f /home/cylee/logstash_general.conf --path.settings=/etc/logstash

The response from the terminal is like this

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console. Sending Logstash's logs to /var/log/logstash which is now configured via log4j2.properties

I imported log.conf successfully whichi is provided by elasticsearch, so I think either .csv or .conf would be a cause.

This is log file for logstsash.

[2017-08-08T16:27:11,988][INFO ][logstash.outputs.elasticsearch] Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://elastic:xxxxxx@localhost:9200/]}}

[2017-08-08T16:27:11,993][INFO ][logstash.outputs.elasticsearch] Running health check to see if an Elasticsearch connection is working {:healthcheck_url=>http://elastic:xxxxxx@localhost:9200/, :path=>"/"}

[2017-08-08T16:27:12,127][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>#<Java::JavaNet::URI:0x7f1af198>}

[2017-08-08T16:27:12,128][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil}

[2017-08-08T16:27:12,172][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>50001, "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"_all"=>{"enabled"=>true, "norms"=>false}, "dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date", "include_in_all"=>false}, "@version"=>{"type"=>"keyword", "include_in_all"=>false}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}

[2017-08-08T16:27:12,179][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>[#<Java::JavaNet::URI:0x16313c2>]}

[2017-08-08T16:27:12,185][INFO ][logstash.pipeline ] Starting pipeline {"id"=>"main", "pipeline.workers"=>8, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>1000}

[2017-08-08T16:27:12,332][INFO ][logstash.pipeline ] Pipeline main started

[2017-08-08T16:27:12,374][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

This is my "logstash_general.conf" file & "PRSA_data_2010.1.1-2014.12.31.csv "

ps) I try to import prsa~.csv to use machine learning but I have no idea how to make timestamp.

It will be really great if you consider about timestamp.

Have a nice day!