Hello team,

I am sending data to Elasticsearch , I have .log files which containes below data. But Only title/first row get inserted in in Elasticsearch

Sample log:

Server Started Timestamp SeverityID EventID Severity Message

20161121T183241.000+0400 20161121T183241.000+0400 4 700 Information NUMA: QVS configured to get adapt to NUMA environment

Logstash:

filter {

if [type] == "facebook" {

grok {

match => {

message => [

'%{NUMBER}\s*%{NUMBER}\s*%{WORD:level}\s*%{GREEDYDATA}\s*EBIL\\%{WORD:userid}',

'%{NUMBER}\s*%{NUMBER}\s*%{WORD:level}\s*%{GREEDYDATA:message1}',

'%{GREEDYDATA}\s*user\\%{WORD:userid}',

'%{GREEDYDATA:message1}'

]

}

}

mutate {

add_field => { "log" => "%{time} %{message}" }

}

date {

match => ["time", "yyyyMMdd'T'HHmmss.SSS"]

target => "@timestamp"

}

mutate {

remove_field => ["message1","message","kafkatime"]

}

}

}

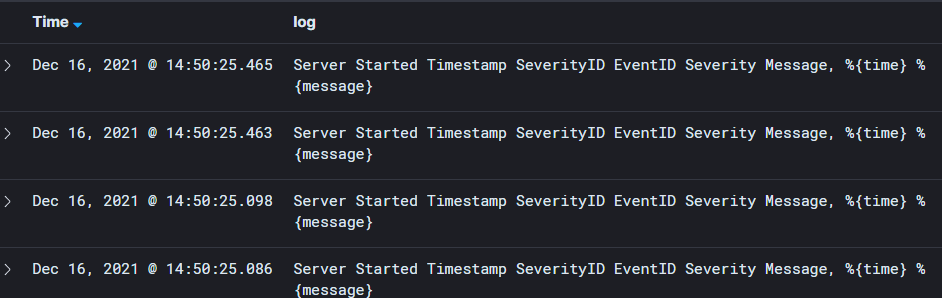

Kibana data looks like: