Guys I have been trying to deploy ELK cluster using ELK Operator method for Azure AKS from here.

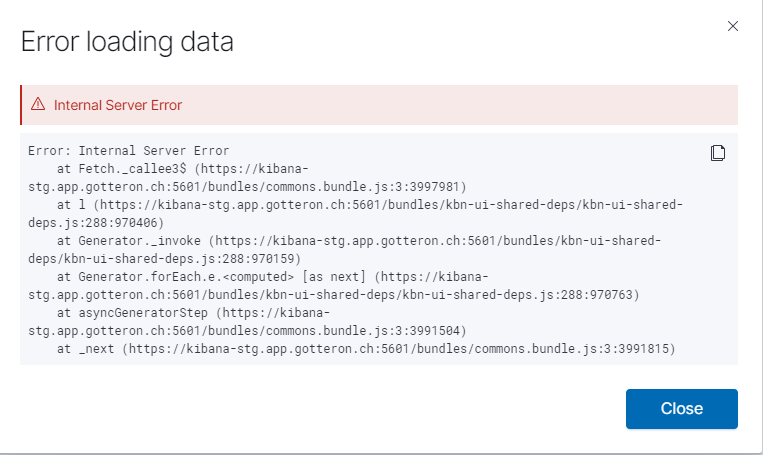

However after I deploy ELK and access Kibana and add Filebeat index creation I am unable to query anything in the Discover Tab.

I can see that the nodes that were used to deploy this ELK cluster is hardly being utilized in terms of CPU and Memory.

I have made any custom changes to deployment however only change that I made was adding our own CA certificate while deploying Kibana.

I am clueless as to where could the issue persists and cause this Timeouts.

Error in Kibana Dash :

Health Status "unknown" for elasticsearch pod

Error from elasticsearch log:

indent preformatted text by 4 spaces {"type": "server", "timestamp": "2020-11-26T18:47:14,483Z", "level": "ERROR", "component": "o.e.c.a.s.ShardStateAction", "cluster.name": "quickstart", "node.name": "quickstart-es-default-0", "message": "[filebeat-7.10.0-2020.11.26-000001][0] unexpected failure while failing shard [shard id [[filebeat-7.10.0-2020.11.26-000001][0]], allocation id [dGatSxahTQ2p1vdcEpS6Xg], primary term [0], message [shard failure, reason [lucene commit failed]], failure [IOException[No space left on device]], markAsStale [true]]", "cluster.uuid": "ibUNaDnCR_-jbjxNyqCflQ", "node.id": "FFqCPSzeQU2w1X_DeHiU9w" ,

"stacktrace": ["org.elasticsearch.cluster.coordination.FailedToCommitClusterStateException: publication failed",

"at org.elasticsearch.cluster.coordination.Coordinator$CoordinatorPublication$4.onFailure(Coordinator.java:1431) ~[elasticsearch-7.7.0.jar:7.7.0]",

"at org.elasticsearch.action.ActionRunnable.onFailure(ActionRunnable.java:88) ~[elasticsearch-7.7.0.jar:7.7.0]",

"at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:39) ~[elasticsearch-7.7.0.jar:7.7.0]",

"at org.elasticsearch.common.util.concurrent.EsExecutors$DirectExecutorService.execute(EsExecutors.java:225) ~[elasticsearch-7.7.0.jar:7.7.0]",

"at org.elasticsearch.common.util.concurrent.ListenableFuture.notifyListener(ListenableFuture.java:106) ~[elasticsearch-7.7.0.jar:7.7.0]",

"at org.elasticsearch.common.util.concurrent.ListenableFuture.addListener(ListenableFuture.java:68) ~[elasticsearch-7.7.0.jar:7.7.0]",

"at org.elasticsearch.cluster.coordination.Coordinator$CoordinatorPublication.onCompletion(Coordinator.java:1354) ~[elasticsearch-7.7.0.jar:7.7.0]",

"at org.elasticsearch.cluster.coordination.Publication.onPossibleCompletion(Publication.java:125) ~[elasticsearch-7.7.0.jar:7.7.0]",

"at org.elasticsearch.cluster.coordination.Publication.onPossibleCommitFailure(Publication.java:173) ~[elasticsearch-7.7.0.jar:7.7.0]",

"at org.elasticsearch.cluster.coordination.Publication.access$500(Publication.java:42) ~[elasticsearch-7.7.0.jar:7.7.0]",

"at org.elasticsearch.cluster.coordination.Publication$PublicationTarget$PublishResponseHandler.onFailure(Publication.java:369) ~[elasticsearch-7.7.0.jar:7.7.0]",

"at org.elasticsearch.cluster.coordination.Coordinator$5.onFailure(Coordinator.java:1120) ~[elasticsearch-7.7.0.jar:7.7.0]",

"at org.elasticsearch.cluster.coordination.PublicationTransportHandler$2$1.onFailure(PublicationTransportHandler.java:206) ~[elasticsearch-7.7.0.jar:7.7.0]",

"at org.elasticsearch.cluster.coordination.PublicationTransportHandler.lambda$sendClusterStateToNode$6(PublicationTransportHandler.java:273) ~[elasticsearch-7.7.0.jar:7.7.0]",

{"type": "server", "timestamp": "2020-11-26T18:48:53,100Z", "level": "ERROR", "component": "o.e.t.TransportService", "cluster.name": "quickstart", "node.name": "quickstart-es-default-0", "message": "failed to handle exception for action [indices:data/read/search[phase/query]], handler [org.elasticsearch.transport.TransportService$ContextRestoreResponseHandler/org.elasticsearch.action.search.SearchTransportService$ConnectionCountingHandler@18e3b2fc/org.elasticsearch.action.search.SearchExecutionStatsCollector@7dd0f04e]", "cluster.uuid": "ibUNaDnCR_-jbjxNyqCflQ", "node.id": "FFqCPSzeQU2w1X_DeHiU9w" ,

Something to do with "o.e.t.TransportService" Please throw some light guys?