2023-11-21 13:36:50 [2023-11-21T05:36:50,943][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600, :ssl_enabled=>false}

2023-11-21 13:36:52 [2023-11-21T05:36:52,189][INFO ][org.reflections.Reflections] Reflections took 252 ms to scan 1 urls, producing 132 keys and 464 values

2023-11-21 13:36:52 [2023-11-21T05:36:52,727][ERROR][logstash.outputs.elasticsearch] Unknown setting 'mapping' for elasticsearch

2023-11-21 13:36:52 [2023-11-21T05:36:52,743][ERROR][logstash.agent ] Failed to execute action {:action=>LogStash::PipelineAction::Create/pipeline_id:main, :exception=>"Java::JavaLang::IllegalStateException", :message=>"Unable to configure plugins: (ConfigurationError) Something is wrong with your configuration.", :backtrace=>["org.logstash.config.ir.CompiledPipeline.<init>(CompiledPipeline.java:120)", "org.logstash.execution.AbstractPipelineExt.initialize(AbstractPipelineExt.java:186)", "org.logstash.execution.AbstractPipelineExt$INVOKER$i$initialize.call(AbstractPipelineExt$INVOKER$i$initialize.gen)", "org.jruby.internal.runtime.methods.JavaMethod$JavaMethodN.call(JavaMethod.java:847)", "org.jruby.ir.runtime.IRRuntimeHelpers.instanceSuper(IRRuntimeHelpers.java:1318)", "org.jruby.ir.instructions.InstanceSuperInstr.interpret(InstanceSuperInstr.java:139)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:128)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:115)", "org.jruby.runtime.callsite.CachingCallSite.cacheAndCall(CachingCallSite.java:452)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:92)", "org.jruby.RubyClass.newInstance(RubyClass.java:931)", "org.jruby.RubyClass$INVOKER$i$newInstance.call(RubyClass$INVOKER$i$newInstance.gen)", "org.jruby.runtime.callsite.CachingCallSite.cacheAndCall(CachingCallSite.java:452)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:92)", "org.jruby.ir.instructions.CallBase.interpret(CallBase.java:561)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:88)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:238)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:225)", "org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:228)", "org.jruby.runtime.callsite.CachingCallSite.cacheAndCall(CachingCallSite.java:516)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:293)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:328)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.Interpreter.INTERPRET_BLOCK(Interpreter.java:116)", "org.jruby.runtime.MixedModeIRBlockBody.commonYieldPath(MixedModeIRBlockBody.java:136)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:66)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:58)", "org.jruby.runtime.Block.call(Block.java:143)", "org.jruby.RubyProc.call(RubyProc.java:352)", "org.jruby.internal.runtime.RubyRunnable.run(RubyRunnable.java:110)", "java.base/java.lang.Thread.run(Thread.java:840)"]}

2023-11-21 13:36:56 [2023-11-21T05:36:56,136][ERROR][logstash.outputs.elasticsearch] Unknown setting 'mapping' for elasticsearch

2023-11-21 13:36:56 [2023-11-21T05:36:56,143][ERROR][logstash.agent ] Failed to execute action {:action=>LogStash::PipelineAction::Create/pipeline_id:main, :exception=>"Java::JavaLang::IllegalStateException", :message=>"Unable to configure plugins: (ConfigurationError) Something is wrong with your configuration.", :backtrace=>["org.logstash.config.ir.CompiledPipeline.<init>(CompiledPipeline.java:120)", "org.logstash.execution.AbstractPipelineExt.initialize(AbstractPipelineExt.java:186)", "org.logstash.execution.AbstractPipelineExt$INVOKER$i$initialize.call(AbstractPipelineExt$INVOKER$i$initialize.gen)", "org.jruby.internal.runtime.methods.JavaMethod$JavaMethodN.call(JavaMethod.java:847)", "org.jruby.ir.runtime.IRRuntimeHelpers.instanceSuper(IRRuntimeHelpers.java:1318)", "org.jruby.ir.instructions.InstanceSuperInstr.interpret(InstanceSuperInstr.java:139)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:128)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:115)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.RubyClass.newInstance(RubyClass.java:931)", "org.jruby.RubyClass$INVOKER$i$newInstance.call(RubyClass$INVOKER$i$newInstance.gen)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.ir.instructions.CallBase.interpret(CallBase.java:561)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:88)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:238)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:225)", "org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:228)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:291)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:328)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.Interpreter.INTERPRET_BLOCK(Interpreter.java:116)", "org.jruby.runtime.MixedModeIRBlockBody.commonYieldPath(MixedModeIRBlockBody.java:136)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:66)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:58)", "org.jruby.runtime.Block.call(Block.java:143)", "org.jruby.RubyProc.call(RubyProc.java:352)", "org.jruby.internal.runtime.RubyRunnable.run(RubyRunnable.java:110)", "java.base/java.lang.Thread.run(Thread.java:840)"]}

2023-11-21 13:36:59 [2023-11-21T05:36:59,065][ERROR][logstash.outputs.elasticsearch] Unknown setting 'mapping' for elasticsearch

2023-11-21 13:36:59 [2023-11-21T05:36:59,068][ERROR][logstash.agent ] Failed to execute action {:action=>LogStash::PipelineAction::Create/pipeline_id:main, :exception=>"Java::JavaLang::IllegalStateException", :message=>"Unable to configure plugins: (ConfigurationError) Something is wrong with your configuration.", :backtrace=>["org.logstash.config.ir.CompiledPipeline.<init>(CompiledPipeline.java:120)", "org.logstash.execution.AbstractPipelineExt.initialize(AbstractPipelineExt.java:186)", "org.logstash.execution.AbstractPipelineExt$INVOKER$i$initialize.call(AbstractPipelineExt$INVOKER$i$initialize.gen)", "org.jruby.internal.runtime.methods.JavaMethod$JavaMethodN.call(JavaMethod.java:847)", "org.jruby.ir.runtime.IRRuntimeHelpers.instanceSuper(IRRuntimeHelpers.java:1318)", "org.jruby.ir.instructions.InstanceSuperInstr.interpret(InstanceSuperInstr.java:139)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:128)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:115)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.RubyClass.newInstance(RubyClass.java:931)", "org.jruby.RubyClass$INVOKER$i$newInstance.call(RubyClass$INVOKER$i$newInstance.gen)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.ir.instructions.CallBase.interpret(CallBase.java:561)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:88)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:238)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:225)", "org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:228)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:291)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:328)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.Interpreter.INTERPRET_BLOCK(Interpreter.java:116)", "org.jruby.runtime.MixedModeIRBlockBody.commonYieldPath(MixedModeIRBlockBody.java:136)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:66)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:58)", "org.jruby.runtime.Block.call(Block.java:143)", "org.jruby.RubyProc.call(RubyProc.java:352)", "org.jruby.internal.runtime.RubyRunnable.run(RubyRunnable.java:110)", "java.base/java.lang.Thread.run(Thread.java:840)"]}

2023-11-21 13:37:02 [2023-11-21T05:37:02,163][ERROR][logstash.outputs.elasticsearch] Unknown setting 'mapping' for elasticsearch

2023-11-21 13:37:02 [2023-11-21T05:37:02,166][ERROR][logstash.agent ] Failed to execute action {:action=>LogStash::PipelineAction::Create/pipeline_id:main, :exception=>"Java::JavaLang::IllegalStateException", :message=>"Unable to configure plugins: (ConfigurationError) Something is wrong with your configuration.", :backtrace=>["org.logstash.config.ir.CompiledPipeline.<init>(CompiledPipeline.java:120)", "org.logstash.execution.AbstractPipelineExt.initialize(AbstractPipelineExt.java:186)", "org.logstash.execution.AbstractPipelineExt$INVOKER$i$initialize.call(AbstractPipelineExt$INVOKER$i$initialize.gen)", "org.jruby.internal.runtime.methods.JavaMethod$JavaMethodN.call(JavaMethod.java:847)", "org.jruby.ir.runtime.IRRuntimeHelpers.instanceSuper(IRRuntimeHelpers.java:1318)", "org.jruby.ir.instructions.InstanceSuperInstr.interpret(InstanceSuperInstr.java:139)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:128)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:115)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.RubyClass.newInstance(RubyClass.java:931)", "org.jruby.RubyClass$INVOKER$i$newInstance.call(RubyClass$INVOKER$i$newInstance.gen)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.ir.instructions.CallBase.interpret(CallBase.java:561)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:88)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:238)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:225)", "org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:228)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:291)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:328)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.Interpreter.INTERPRET_BLOCK(Interpreter.java:116)", "org.jruby.runtime.MixedModeIRBlockBody.commonYieldPath(MixedModeIRBlockBody.java:136)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:66)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:58)", "org.jruby.runtime.Block.call(Block.java:143)", "org.jruby.RubyProc.call(RubyProc.java:352)", "org.jruby.internal.runtime.RubyRunnable.run(RubyRunnable.java:110)", "java.base/java.lang.Thread.run(Thread.java:840)"]}

2023-11-21 13:37:04 [2023-11-21T05:37:04,996][ERROR][logstash.outputs.elasticsearch] Unknown setting 'mapping' for elasticsearch

2023-11-21 13:37:04 [2023-11-21T05:37:04,998][ERROR][logstash.agent ] Failed to execute action {:action=>LogStash::PipelineAction::Create/pipeline_id:main, :exception=>"Java::JavaLang::IllegalStateException", :message=>"Unable to configure plugins: (ConfigurationError) Something is wrong with your configuration.", :backtrace=>["org.logstash.config.ir.CompiledPipeline.<init>(CompiledPipeline.java:120)", "org.logstash.execution.AbstractPipelineExt.initialize(AbstractPipelineExt.java:186)", "org.logstash.execution.AbstractPipelineExt$INVOKER$i$initialize.call(AbstractPipelineExt$INVOKER$i$initialize.gen)", "org.jruby.internal.runtime.methods.JavaMethod$JavaMethodN.call(JavaMethod.java:847)", "org.jruby.ir.runtime.IRRuntimeHelpers.instanceSuper(IRRuntimeHelpers.java:1318)", "org.jruby.ir.instructions.InstanceSuperInstr.interpret(InstanceSuperInstr.java:139)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:128)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:115)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.RubyClass.newInstance(RubyClass.java:931)", "org.jruby.RubyClass$INVOKER$i$newInstance.call(RubyClass$INVOKER$i$newInstance.gen)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.ir.instructions.CallBase.interpret(CallBase.java:561)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:88)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:238)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:225)", "org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:228)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:291)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:328)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.Interpreter.INTERPRET_BLOCK(Interpreter.java:116)", "org.jruby.runtime.MixedModeIRBlockBody.commonYieldPath(MixedModeIRBlockBody.java:136)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:66)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:58)", "org.jruby.runtime.Block.call(Block.java:143)", "org.jruby.RubyProc.call(RubyProc.java:352)", "org.jruby.internal.runtime.RubyRunnable.run(RubyRunnable.java:110)", "java.base/java.lang.Thread.run(Thread.java:840)"]}

2023-11-21 13:37:07 [2023-11-21T05:37:07,967][ERROR][logstash.outputs.elasticsearch] Unknown setting 'mapping' for elasticsearch

2023-11-21 13:37:07 [2023-11-21T05:37:07,969][ERROR][logstash.agent ] Failed to execute action {:action=>LogStash::PipelineAction::Create/pipeline_id:main, :exception=>"Java::JavaLang::IllegalStateException", :message=>"Unable to configure plugins: (ConfigurationError) Something is wrong with your configuration.", :backtrace=>["org.logstash.config.ir.CompiledPipeline.<init>(CompiledPipeline.java:120)", "org.logstash.execution.AbstractPipelineExt.initialize(AbstractPipelineExt.java:186)", "org.logstash.execution.AbstractPipelineExt$INVOKER$i$initialize.call(AbstractPipelineExt$INVOKER$i$initialize.gen)", "org.jruby.internal.runtime.methods.JavaMethod$JavaMethodN.call(JavaMethod.java:847)", "org.jruby.ir.runtime.IRRuntimeHelpers.instanceSuper(IRRuntimeHelpers.java:1318)", "org.jruby.ir.instructions.InstanceSuperInstr.interpret(InstanceSuperInstr.java:139)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:128)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:115)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.RubyClass.newInstance(RubyClass.java:931)", "org.jruby.RubyClass$INVOKER$i$newInstance.call(RubyClass$INVOKER$i$newInstance.gen)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.ir.instructions.CallBase.interpret(CallBase.java:561)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:88)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:238)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:225)", "org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:228)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:291)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:328)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.Interpreter.INTERPRET_BLOCK(Interpreter.java:116)", "org.jruby.runtime.MixedModeIRBlockBody.commonYieldPath(MixedModeIRBlockBody.java:136)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:66)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:58)", "org.jruby.runtime.Block.call(Block.java:143)", "org.jruby.RubyProc.call(RubyProc.java:352)", "org.jruby.internal.runtime.RubyRunnable.run(RubyRunnable.java:110)", "java.base/java.lang.Thread.run(Thread.java:840)"]}

2023-11-21 13:37:11 [2023-11-21T05:37:11,000][ERROR][logstash.outputs.elasticsearch] Unknown setting 'mapping' for elasticsearch

2023-11-21 13:37:11 [2023-11-21T05:37:11,004][ERROR][logstash.agent ] Failed to execute action {:action=>LogStash::PipelineAction::Create/pipeline_id:main, :exception=>"Java::JavaLang::IllegalStateException", :message=>"Unable to configure plugins: (ConfigurationError) Something is wrong with your configuration.", :backtrace=>["org.logstash.config.ir.CompiledPipeline.<init>(CompiledPipeline.java:120)", "org.logstash.execution.AbstractPipelineExt.initialize(AbstractPipelineExt.java:186)", "org.logstash.execution.AbstractPipelineExt$INVOKER$i$initialize.call(AbstractPipelineExt$INVOKER$i$initialize.gen)", "org.jruby.internal.runtime.methods.JavaMethod$JavaMethodN.call(JavaMethod.java:847)", "org.jruby.ir.runtime.IRRuntimeHelpers.instanceSuper(IRRuntimeHelpers.java:1318)", "org.jruby.ir.instructions.InstanceSuperInstr.interpret(InstanceSuperInstr.java:139)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:128)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:115)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.RubyClass.newInstance(RubyClass.java:931)", "org.jruby.RubyClass$INVOKER$i$newInstance.call(RubyClass$INVOKER$i$newInstance.gen)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.ir.instructions.CallBase.interpret(CallBase.java:561)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:88)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:238)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:225)", "org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:228)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:291)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:328)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.Interpreter.INTERPRET_BLOCK(Interpreter.java:116)", "org.jruby.runtime.MixedModeIRBlockBody.commonYieldPath(MixedModeIRBlockBody.java:136)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:66)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:58)", "org.jruby.runtime.Block.call(Block.java:143)", "org.jruby.RubyProc.call(RubyProc.java:352)", "org.jruby.internal.runtime.RubyRunnable.run(RubyRunnable.java:110)", "java.base/java.lang.Thread.run(Thread.java:840)"]}

2023-11-21 13:37:13 [2023-11-21T05:37:13,995][ERROR][logstash.outputs.elasticsearch] Unknown setting 'mapping' for elasticsearch

2023-11-21 13:37:13 [2023-11-21T05:37:13,996][ERROR][logstash.agent ] Failed to execute action {:action=>LogStash::PipelineAction::Create/pipeline_id:main, :exception=>"Java::JavaLang::IllegalStateException", :message=>"Unable to configure plugins: (ConfigurationError) Something is wrong with your configuration.", :backtrace=>["org.logstash.config.ir.CompiledPipeline.<init>(CompiledPipeline.java:120)", "org.logstash.execution.AbstractPipelineExt.initialize(AbstractPipelineExt.java:186)", "org.logstash.execution.AbstractPipelineExt$INVOKER$i$initialize.call(AbstractPipelineExt$INVOKER$i$initialize.gen)", "org.jruby.internal.runtime.methods.JavaMethod$JavaMethodN.call(JavaMethod.java:847)", "org.jruby.ir.runtime.IRRuntimeHelpers.instanceSuper(IRRuntimeHelpers.java:1318)", "org.jruby.ir.instructions.InstanceSuperInstr.interpret(InstanceSuperInstr.java:139)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:128)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:115)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.RubyClass.newInstance(RubyClass.java:931)", "org.jruby.RubyClass$INVOKER$i$newInstance.call(RubyClass$INVOKER$i$newInstance.gen)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.ir.instructions.CallBase.interpret(CallBase.java:561)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:88)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:238)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:225)", "org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:228)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:291)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:328)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.Interpreter.INTERPRET_BLOCK(Interpreter.java:116)", "org.jruby.runtime.MixedModeIRBlockBody.commonYieldPath(MixedModeIRBlockBody.java:136)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:66)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:58)", "org.jruby.runtime.Block.call(Block.java:143)", "org.jruby.RubyProc.call(RubyProc.java:352)", "org.jruby.internal.runtime.RubyRunnable.run(RubyRunnable.java:110)", "java.base/java.lang.Thread.run(Thread.java:840)"]}

2023-11-21 13:37:16 [2023-11-21T05:37:16,946][ERROR][logstash.outputs.elasticsearch] Unknown setting 'mapping' for elasticsearch

2023-11-21 13:37:16 [2023-11-21T05:37:16,948][ERROR][logstash.agent ] Failed to execute action {:action=>LogStash::PipelineAction::Create/pipeline_id:main, :exception=>"Java::JavaLang::IllegalStateException", :message=>"Unable to configure plugins: (ConfigurationError) Something is wrong with your configuration.", :backtrace=>["org.logstash.config.ir.CompiledPipeline.<init>(CompiledPipeline.java:120)", "org.logstash.execution.AbstractPipelineExt.initialize(AbstractPipelineExt.java:186)", "org.logstash.execution.AbstractPipelineExt$INVOKER$i$initialize.call(AbstractPipelineExt$INVOKER$i$initialize.gen)", "org.jruby.internal.runtime.methods.JavaMethod$JavaMethodN.call(JavaMethod.java:847)", "org.jruby.ir.runtime.IRRuntimeHelpers.instanceSuper(IRRuntimeHelpers.java:1318)", "org.jruby.ir.instructions.InstanceSuperInstr.interpret(InstanceSuperInstr.java:139)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:128)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:115)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.RubyClass.newInstance(RubyClass.java:931)", "org.jruby.RubyClass$INVOKER$i$newInstance.call(RubyClass$INVOKER$i$newInstance.gen)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:90)", "org.jruby.ir.instructions.CallBase.interpret(CallBase.java:561)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:367)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:88)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:238)", "org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:225)", "org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:228)", "org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:291)", "org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:328)", "org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:66)", "org.jruby.ir.interpreter.Interpreter.INTERPRET_BLOCK(Interpreter.java:116)", "org.jruby.runtime.MixedModeIRBlockBody.commonYieldPath(MixedModeIRBlockBody.java:136)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:66)", "org.jruby.runtime.IRBlockBody.call(IRBlockBody.java:58)", "org.jruby.runtime.Block.call(Block.java:143)", "org.jruby.RubyProc.call(RubyProc.java:352)", "org.jruby.internal.runtime.RubyRunnable.run(RubyRunnable.java:110)", "java.base/java.lang.Thread.run(Thread.java:840)"]}

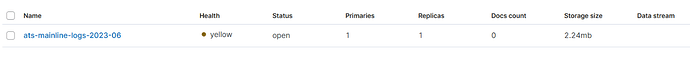

decided to add a pipeline and mapping directory to the json files I obtained from the mapping and ingest pipeline.

Not sure if this is the right format. logstash.conf

output {

elasticsearch {

index => "ats-mainline-logs-2023-06"

hosts => ["https://es01:9200"]

manage_template => false

user => "elastic"

password => "elastic123"

ssl_verification_mode=> "none"

pipeline => "./ats-logs-mainline/ats-logs-ingest pipeline.json"

mapping => "./ats-logs-mainline/ats-logs-mapping.json"

Regarding

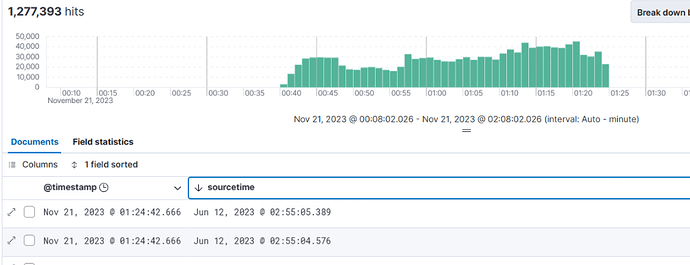

Is there a way to delete rows based on a range of timestamp? I tried to do that but the format isn't right.

format is 1/6/2023 00:00:00 - but as you can see, the display format is just different