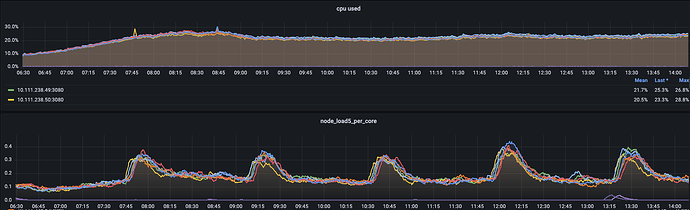

I noticed that the monitoring chart of my Elasticsearch data node looks a bit strange, as the cpuload keeps increasing at regular intervals, while cpuused remains stable. Additionally, when cpuload increases, metrics that could potentially cause an increase in cpuload, such as cpu user time, cpu iowait time, and context switch count, also remain stable without fluctuations.

Does executing Elasticsearch queries lead to this situation? What kind of queries might cause this?

Which version of Elasticsearch are you using?

That is very, very old. I recommend you upgrade at least to Elasticsearch 7.17, which should be relatively easy.

Is this a standard Elasticsearch deployment or do you have any third party plugins installed?

Have you tried running the hot threads API during times of increased CPU load? If not, can you try doing that?

When comparing the hot_threads results during high CPU load with normal conditions, the following differences were observed:

app//org.apache.lucene.store.BufferedIndexInput.readBytes(BufferedIndexInput.java:116)

app//org.apache.lucene.codecs.compressing.LZ4.decompress(LZ4.java:99)

app//org.apache.lucene.codecs.compressing.CompressionMode$4.decompress(CompressionMode.java:138)

app//org.apache.lucene.codecs.compressing.CompressingStoredFieldsReader$BlockState.document(CompressingStoredFieldsReader.java:555)

app//org.apache.lucene.codecs.compressing.CompressingStoredFieldsReader.document(CompressingStoredFieldsReader.java:571)

app//org.apache.lucene.codecs.compressing.CompressingStoredFieldsReader.visitDocument(CompressingStoredFieldsReader.java:578)

app//org.apache.lucene.index.CodecReader.document(CodecReader.java:84)

app//org.apache.lucene.index.FilterLeafReader.document(FilterLeafReader.java:355)

app//org.elasticsearch.search.fetch.FetchPhase.loadStoredFields(FetchPhase.java:426)

app//org.elasticsearch.search.fetch.FetchPhase.getSearchFields(FetchPhase.java:233)

app//org.elasticsearch.search.fetch.FetchPhase.createSearchHit(FetchPhase.java:215)

app//org.elasticsearch.search.fetch.FetchPhase.execute(FetchPhase.java:163)

app//org.elasticsearch.search.SearchService.executeFetchPhase(SearchService.java:384)

app//org.elasticsearch.search.SearchService.executeQueryPhase(SearchService.java:364)

app//org.elasticsearch.search.SearchService.lambda$executeQueryPhase$1(SearchService.java:340)

app//org.elasticsearch.search.SearchService$$Lambda$4592/0x00000008018ae840.apply(Unknown Source)

app//org.elasticsearch.action.ActionListener.lambda$map$2(ActionListener.java:146)

app//org.elasticsearch.action.ActionListener$$Lambda$3802/0x0000000801684040.accept(Unknown Source)

app//org.elasticsearch.action.ActionListener$1.onResponse(ActionListener.java:63)

app//org.elasticsearch.action.ActionRunnable.lambda$supply$0(ActionRunnable.java:58)

app//org.elasticsearch.action.ActionRunnable$$Lambda$4603/0x00000008018ac440.accept(Unknown Source)

app//org.elasticsearch.action.ActionRunnable$2.doRun(ActionRunnable.java:73)

app//org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37)

app//org.elasticsearch.common.util.concurrent.TimedRunnable.doRun(TimedRunnable.java:44)

app//org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.doRun(ThreadContext.java:773)

app//org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37)

java.base@13.0.1/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

java.base@13.0.1/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

java.base@13.0.1/java.lang.Thread.run(Thread.java:830)

13.3% (66.3ms out of 500ms) cpu usage by thread 'elasticsearch[238051][search][T#25]'

unique snapshot

app//org.apache.lucene.geo.Polygon2D.contains(Polygon2D.java:289)

app//org.apache.lucene.geo.Polygon2D.contains(Polygon2D.java:293)

app//org.apache.lucene.geo.Polygon2D.contains(Polygon2D.java:289)

app//org.apache.lucene.geo.Polygon2D.componentContains(Polygon2D.java:80)

app//org.apache.lucene.geo.Polygon2D.numberOfCorners(Polygon2D.java:210)

app//org.apache.lucene.geo.Polygon2D.componentRelate(Polygon2D.java:101)

app//org.apache.lucene.geo.EdgeTree.internalComponentRelate(EdgeTree.java:162)

app//org.apache.lucene.geo.EdgeTree.relate(EdgeTree.java:108)

app//org.apache.lucene.geo.GeoEncodingUtils.lambda$createPolygonPredicate$1(GeoEncodingUtils.java:186)

app//org.apache.lucene.geo.GeoEncodingUtils$$Lambda$4999/0x00000008016af440.apply(Unknown Source)

app//org.apache.lucene.geo.GeoEncodingUtils.createSubBoxes(GeoEncodingUtils.java:240)

app//org.apache.lucene.geo.GeoEncodingUtils.createPolygonPredicate(GeoEncodingUtils.java:188)

app//org.apache.lucene.document.LatLonPointInPolygonQuery.createWeight(LatLonPointInPolygonQuery.java:158)

app//org.apache.lucene.search.IndexSearcher.createWeight(IndexSearcher.java:721)

app//org.elasticsearch.search.internal.ContextIndexSearcher.createWeight(ContextIndexSearcher.java:130)

app//org.apache.lucene.search.BooleanWeight.<init>(BooleanWeight.java:63)

app//org.apache.lucene.search.BooleanQuery.createWeight(BooleanQuery.java:231)

app//org.apache.lucene.search.IndexSearcher.createWeight(IndexSearcher.java:721)

app//org.elasticsearch.search.internal.ContextIndexSearcher.createWeight(ContextIndexSearcher.java:130)

app//org.apache.lucene.search.IndexSearcher.search(IndexSearcher.java:442)

app//org.elasticsearch.search.query.QueryPhase.execute(QueryPhase.java:270)

app//org.elasticsearch.search.query.QueryPhase.execute(QueryPhase.java:113)

app//org.elasticsearch.search.SearchService.loadOrExecuteQueryPhase(SearchService.java:335)

app//org.elasticsearch.search.SearchService.executeQueryPhase(SearchService.java:355)

app//org.elasticsearch.search.SearchService.lambda$executeQueryPhase$1(SearchService.java:340)

app//org.elasticsearch.search.SearchService$$Lambda$4592/0x00000008018ae840.apply(Unknown Source)

app//org.elasticsearch.action.ActionListener.lambda$map$2(ActionListener.java:146)

app//org.elasticsearch.action.ActionListener$$Lambda$3802/0x0000000801684040.accept(Unknown Source)

app//org.elasticsearch.action.ActionListener$1.onResponse(ActionListener.java:63)

app//org.elasticsearch.action.ActionRunnable.lambda$supply$0(ActionRunnable.java:58)

app//org.elasticsearch.action.ActionRunnable$$Lambda$4603/0x00000008018ac440.accept(Unknown Source)

app//org.elasticsearch.action.ActionRunnable$2.doRun(ActionRunnable.java:73)

app//org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37)

app//org.elasticsearch.common.util.concurrent.TimedRunnable.doRun(TimedRunnable.java:44)

app//org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.doRun(ThreadContext.java:773)

app//org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37)

java.base@13.0.1/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

java.base@13.0.1/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

java.base@13.0.1/java.lang.Thread.run(Thread.java:830)

14.9% (74.5ms out of 500ms) cpu usage by thread 'elasticsearch[238050][search][T#19]'

unique snapshot

app//org.apache.lucene.geo.GeoEncodingUtils.lambda$createDistancePredicate$0(GeoEncodingUtils.java:169)

app//org.apache.lucene.geo.GeoEncodingUtils$$Lambda$4876/0x0000000801845440.apply(Unknown Source)

app//org.apache.lucene.geo.GeoEncodingUtils.createSubBoxes(GeoEncodingUtils.java:240)

app//org.apache.lucene.geo.GeoEncodingUtils.createDistancePredicate(GeoEncodingUtils.java:171)

app//org.apache.lucene.document.LatLonPointDistanceQuery$1.<init>(LatLonPointDistanceQuery.java:118)

app//org.apache.lucene.document.LatLonPointDistanceQuery.createWeight(LatLonPointDistanceQuery.java:116)

app//org.apache.lucene.search.IndexOrDocValuesQuery.createWeight(IndexOrDocValuesQuery.java:121)

app//org.apache.lucene.search.IndexSearcher.createWeight(IndexSearcher.java:721)

app//org.elasticsearch.search.internal.ContextIndexSearcher.createWeight(ContextIndexSearcher.java:130)

app//org.apache.lucene.search.BooleanWeight.<init>(BooleanWeight.java:63)

app//org.apache.lucene.search.BooleanQuery.createWeight(BooleanQuery.java:231)

app//org.apache.lucene.search.IndexSearcher.createWeight(IndexSearcher.java:721)

app//org.elasticsearch.search.internal.ContextIndexSearcher.createWeight(ContextIndexSearcher.java:130)

app//org.apache.lucene.search.IndexSearcher.search(IndexSearcher.java:442)

app//org.elasticsearch.search.query.QueryPhase.execute(QueryPhase.java:270)

app//org.elasticsearch.search.query.QueryPhase.execute(QueryPhase.java:113)

app//org.elasticsearch.search.SearchService.loadOrExecuteQueryPhase(SearchService.java:335)

app//org.elasticsearch.search.SearchService.executeQueryPhase(SearchService.java:355)

app//org.elasticsearch.search.SearchService.lambda$executeQueryPhase$1(SearchService.java:340)

app//org.elasticsearch.search.SearchService$$Lambda$4515/0x0000000801881c40.apply(Unknown Source)

app//org.elasticsearch.action.ActionListener.lambda$map$2(ActionListener.java:146)

app//org.elasticsearch.action.ActionListener$$Lambda$3802/0x0000000801684040.accept(Unknown Source)

app//org.elasticsearch.action.ActionListener$1.onResponse(ActionListener.java:63)

app//org.elasticsearch.action.ActionRunnable.lambda$supply$0(ActionRunnable.java:58)

app//org.elasticsearch.action.ActionRunnable$$Lambda$4526/0x00000008018a2840.accept(Unknown Source)

app//org.elasticsearch.action.ActionRunnable$2.doRun(ActionRunnable.java:73)

app//org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37)

app//org.elasticsearch.common.util.concurrent.TimedRunnable.doRun(TimedRunnable.java:44)

app//org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.doRun(ThreadContext.java:773)

app//org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37)

java.base@13.0.1/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

java.base@13.0.1/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

java.base@13.0.1/java.lang.Thread.run(Thread.java:830)

leandrojmp

July 4, 2024, 12:13pm

6

To troubleshoot what may be the query you can use the following request:

GET _tasks?actions=*search*&detailed

This will show you all queries running at the time of the request, you will then be able to analyze the queries and maybe find which one is impact your cluster.

wangxr1985

November 14, 2024, 1:35pm

7

Recently, I expanded the cluster by adding some data nodes and increasing the number of replicas for the index. I noticed that the cpuload on the new nodes has a different periodicity compared to the original nodes. It seems that the increase in cpuload is unrelated to user queries; rather, it is caused by the different allocation times of the replicas.

Additionally, the index data is fixed and does not experience any writes, so there are no segment merge operations occurring. Furthermore, common metrics that could lead to increased cpuload, such as disk I/O, context switch counts, and interrupt counts, are all normal.