Hi guys,

I would really need your advice on this one;

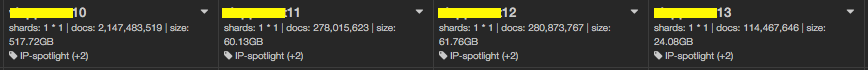

There is 1 elastic search node with 4 indices. kopf shows

After 22h, the __10 index stops indexing documents, while the other ones operate as expected

The data are getting indexed using bulk requests via HTTP and this the log when "not working"

Responce is 200

2016-05-24 12:26:36.910 : DEBUG : IPacc-datastore : bin_packing_cache : > process_id "31922768" [IPacc:cache] found data "IPacc:cache:__10:pmacct" and calculated "2" bins of size "150000" plus "41974" messages

2016-05-24 12:27:05.100 : DEBUG : IPacc-datastore : bin_packing_cache : > {'req': <Response [200]>, 'pid': 31922768, 'rc': True}

2016-05-24 12:27:05.631 : DEBUG : IPacc-datastore : bin_packing_cache : > {'req': <Response [200]>, 'pid': 31922768, 'rc': True}

2016-05-24 12:27:05.886 : DEBUG : IPacc-datastore : bin_packing_cache : > {'req': <Response [200]>, 'pid': 31922768, 'rc': True}

Could you please please help me and try to find what is going wrong ?

Nikos

. Indeed I read the guideline. But time-based indices do not 'fit' well in my data pipeline because of the bulk insertion. According to the documentation they will replace it with another mechanism (retention policy) instead of the _ttl.

. Indeed I read the guideline. But time-based indices do not 'fit' well in my data pipeline because of the bulk insertion. According to the documentation they will replace it with another mechanism (retention policy) instead of the _ttl.