So I have the rr index, with the mapping:

{

"mappings": {

"properties": {

"amount EUR": {

"type": "float"

},

"amount USD": {

"type": "float"

},

"date": {

"type": "date",

"format": "epoch_millis"

},

"email": {

"type": "keyword"

}

}

}

}

You have 38k records with various dates, "grouped" in months. One record look like:

{

"_index": "rr",

"_id": "xxxID",

"_version": 3,

"_score": 1,

"_source": {

"date": "1658721600000",

"email": "john.doe@example.com",

"amount USD": "200",

"amount EUR": "170.1722"

},

"fields": {

"date": [

"2022-07-25T04:00:00.000Z"

],

"amount EUR": [

170.1722

],

"amount USD": [

200

],

"email": [

"john.doe@example.com"

]

}

}

To give you a simple example, you can have multiple records with the same email during the same month (multiple products) and the number of active customers is simple to compute (unique_count('email')). Monthly recurring revenue is also simple to compute (sum('amount USD')).

I can also compute how many new customers are added/removed each month with the help of differences.

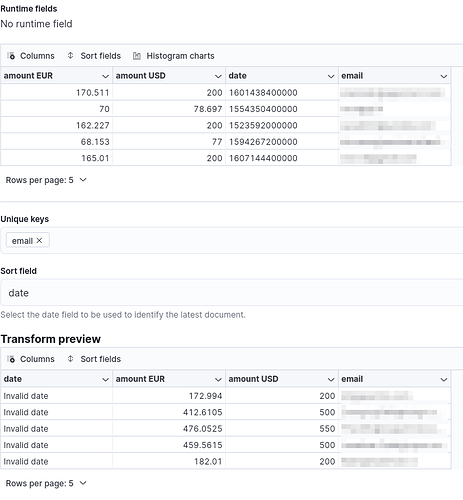

But to get the churn, I needed to create the transform you helped me with: I created a new index with the same mapping than rr (called lastrr) containing ONLY last records of my user from the original index.

This works perfectly, and I can compute how many customers I'm losing every month, and even the amount.

The last part is computing the churn, which is "lost customers" (from lastrr index) divided by unique_count('email') from rr index. Since lastrr doesn't have all emails records from rr.

I hope it's more clear, sorry if I am not, the fact I'm not an ES expert might made me using bad vocabulary to describe what I'm trying to achieve.

Thank you very much for your assistance!