I have an elastic agent running in a docker container with a custom logs integration. The elastic agent generates this warning when ingesting logs:

2024-09-11 11:14:59 {"log.level":"warn","@timestamp":"2024-09-11T15:14:59.598Z","message":"Cannot index event (status=400): dropping event! Look at the event log to view the event and cause.","component":{"binary":"filebeat","dataset":"elastic_agent.filebeat","id":"log-default","type":"log"},"log":{"source":"log-default"},"log.logger":"elasticsearch","log.origin":{"file.line":488,"file.name":"elasticsearch/client.go","function":"github.com/elastic/beats/v7/libbeat/outputs/elasticsearch.(*Client).applyItemStatus"},"service.name":"filebeat","ecs.version":"1.6.0","ecs.version":"1.6.0"}

I found that the agent is logging locally to: /usr/share/elastic-agent/state/data/logs/

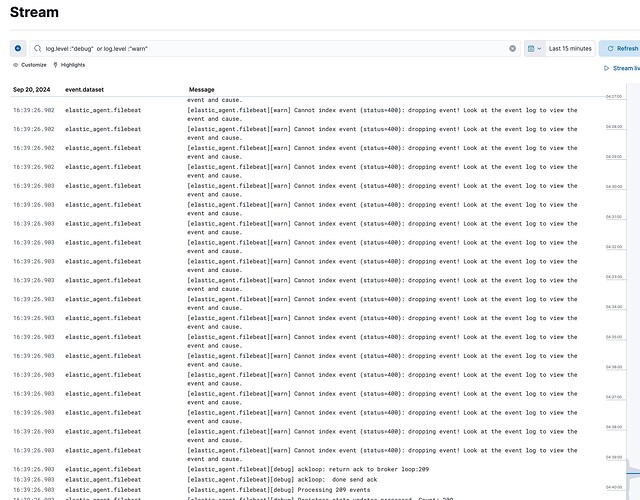

I also found the stream it is logging to.

In both locations I can find the above warning message. However, in neither location can I find more details nor the event log that is mentioned in the warning message. I have bumped the elastic agent logging to debug and I have found no useful information.

From other discussions I found out that status=400 refers to a mapping error. So when I inserted a document to the pipeline via console I got this error:

{

"error": {

"root_cause": [

{

"type": "document_parsing_exception",

"reason": "[1:258] object mapping for [host] tried to parse field [host] as object, but found a concrete value"

}

],

"type": "document_parsing_exception",

"reason": "[1:258] object mapping for [host] tried to parse field [host] as object, but found a concrete value"

},

"status": 400

}

My ingest pipeline has failure processors and they are not logging any information. Which makes sense since this looks to be a mapping error and not an ingest pipeline error.

I deduce that the Elastic Agent Custom Logs integration is generating those same errors. But I can find no evidence of them in any of the log files or streams for the agent.

Where would I find these error details so I can diagnose future errors?

Additionally what should I be monitoring to assure that logs are being fully ingested?