Ok thanks, can you also share the contents of the nginx.yml file you customized?

Sure.

# Module: nginx

# Docs: https://www.elastic.co/guide/en/beats/filebeat/main/filebeat-module-nginx.html

- module: nginx

# Access logs

access:

enabled: true

# Set custom paths for the log files. If left empty,

# Filebeat will choose the paths depending on your OS.

var.paths: ["C:/Program Files/nginx-1.25.3/logs"]

# Error logs

error:

enabled: true

# Set custom paths for the log files. If left empty,

# Filebeat will choose the paths depending on your OS.

var.paths: ["C:/Program Files/nginx-1.25.3/logs"]

# Ingress-nginx controller logs. This is disabled by default. It could be used in Kubernetes environments to parse ingress-nginx logs

ingress_controller:

enabled: false

# Set custom paths for the log files. If left empty,

# Filebeat will choose the paths depending on your OS.

#var.paths:

These settings need to point to files either directly or via wildcards, you currently have them pointing at directories.

- module: nginx

access:

enabled: true

var.paths: ["/path/to/log/nginx/access.log*"]

error:

enabled: true

var.paths: ["/path/to/log/nginx/error.log*"]

I made the changes to my nginx.yml file. Now its "C:/Program Files/nginx-1.25.3/logs/error.log*" and unfortunately the output remains the same.

Now the we've fixed the paths can you run filebeat -e -d "*" and share the output again?

Sure. Here are the changes in the message fields from the last run.

message : Start next scan

message : Check file for harvesting: C:\\Program Files\\nginx-1.25.3\\logs\\error.log

message : Check file for harvesting: C:\\Program Files\\nginx-1.25.3\\logs\\access.log

message : Update existing file for harvesting: C:\\Program Files\\nginx-1.25.3\\logs\\access.log offset: 802

message : Update existing file for harvesting: C:\\Program Files\\nginx-1.25.3\\logs\\error.log offset: 772

message : File didn't change: C:\\Program Files\\nginx-1.25.3\\logs\\access.log

message : File didn't change: C:\\Program Files\\nginx-1.25.3\\logs\\error.log

message : input states cleaned up. Before: 1 After: 1 Pending: 0

message : input states cleaned up. Before: 1 After: 1 Pending: 0

message : Registry file updated. 2 active states.

message : Start next scan

message : File scan complete

message : Run input

It looks like it believes it has read the files and forwarded logs, did you check the index in elasticsearch to see if logs are present?

Using the console in DevTools, I queried the filebeat-* index and all the logs are present. Here's a sample of the output when I ran this query:

GET filebeat-*/_search

{

"query": {

"match_all": {}

}

}

Output:

"event": {

"ingested": "2024-02-16T14:18:59.560536200Z",

"original": "127.0.0.1 - - [12/Feb/2024:10:48:12 +0530] \"GET / HTTP/1.1\" 200 615 \"-\" \"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36\"",

"timezone": "+05:30",

"created": "2024-02-16T14:18:46.548Z",

"kind": "event",

"module": "nginx",

"category": [

"web"

],

"type": [

"access"

],

"dataset": "nginx.access",

"outcome": "success"

}

So it looks like Filebeat has loaded the logs into Elasticsearch, but Kibana cannot display them, as I'm still getting the "400 Bad Request" on the Kibana console window.

So I did some digging around in my Elasticsearch and I found the following things:

-

When I ran

GET _cat/indicesin the console I can see the Filebeat datastream as.ds-filebeat-8.12.0-2024.02.06-000001. However, I cannot delete the datastream and when I runGET /_data_stream/.ds-filebeat-8.12.0-2024.02.06-000001, the output is empty:{ "data_streams": []} -

When I go under Search -> Content -> Indices, I can find the Filebeat datastream under the hidden indices, and it contains all the

nginxlogs/documents.

I'm not sure if this helps make progress with the issue at hand, but I guess this confirms that Filebeat is sending the log files to Elasticsearch, and Elasticsearch is storing them. It's just Kibana's dashboards that cannot display them.

Hi @NotTheRealV Progress, but I am a little confused...

When you say console, do you Mean Kibana -> Dev Tools?

That is not a proper command not sure what you are expecting to get out of that.

I think you are trying to run which show the backing indices...

GET /_data_stream/filebeat-8.12.0

When you go to Discover and Use the filebeat-* Data view do you see your data?

When you open a document does it look fully parsed?

Also can you run

GET filebeat-*/_search

and show a full document of an access log, not just a snippet?

And for sure you ran setup right? before your started sending the logs?

Exactly Which Dashboards? Can you show? What do they look like, do you have the correct time range?

It is possible that you have non-standard ngnix logs and that is why they are not displaying ...

You could share a couple of the raw log lines and we might be able to check.

Yes, I meant Kibana -> Dev Tools -> Console Tab

Well I ran - GET /_data_stream/.ds-filebeat-8.12.0-2024.02.06-000001 because I was facing some errors when I tried GET filebeat-*/_search the first time, so I tried

viewing all the indices. I saw that this .ds-filebeat-8.12.0-2024.02.06-000001 was a datastream, so I searched the Elasticsearch documentation and found this command: GET /_data_stream.

I ran that to get this result:

{

"data_streams": []

}

I assumed the result meant that the index was empty. However now when I run GET filebeat-*/_search it works fine and all the documents are visible. The errors I saw were probably due to some errors I did when I was running Filebeats the other day.

When you go to Discover and Use the filebeat-* Data view do you see your data?

When you open a document does it look fully parsed?

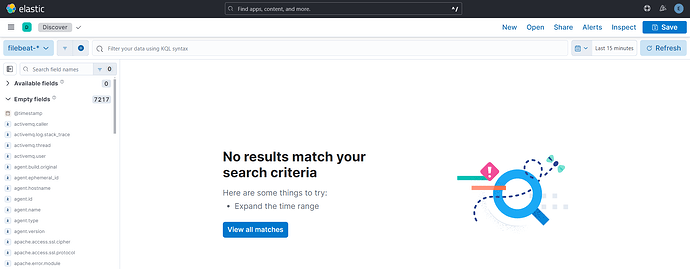

As I mentioned before, on my Discover page, the filebeat-* data view just shows empty fields as seen in this image:

Exactly Which Dashboards? Can you show? What do they look like, do you have the correct time range?

I was viewing the Dashboards -> Editing [Filebeat Nginx] Access and error logs ECS.

I've refreshed the time range multiple times and the Discover/Dashboard view does not change.

Also can you run

GET filebeat-*/_searchand show a full document of an access log, not just a snippet?

You could share a couple of the raw log lines and we might be able to check.

Sure, here are some of the raw log lines from the nginx/access.log and a full document of an access log in filebeat-*:

Log Lines from nginx/access.log:

127.0.0.1 - - [12/Feb/2024:10:48:12 +0530] "GET / HTTP/1.1" 200 615 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36"

127.0.0.1 - - [12/Feb/2024:10:48:12 +0530] "GET /favicon.ico HTTP/1.1" 404 555 "http://localhost/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36"

Full document of access log from filebeat-*:

{

"_index": ".ds-filebeat-8.12.0-2024.02.06-000001",

"_id": "0MJIso0BmY7W8rCq6hbx",

"_score": 1,

"_source": {

"agent": {

"name": "$LAPTOP NAME",

"id": "$ID",

"ephemeral_id": "$EPHEMERAL_ID",

"type": "filebeat",

"version": "8.12.0"

},

"nginx": {

"access": {

"remote_ip_list": [

"127.0.0.1"

]

}

},

"log": {

"file": {

"path": """C:\Program Files\nginx-1.25.3\logs\access.log"""

},

"offset": 0

},

"source": {

"address": "127.0.0.1",

"ip": "127.0.0.1"

},

"fileset": {

"name": "access"

},

"url": {

"path": "/",

"original": "/"

},

"input": {

"type": "log"

},

"@timestamp": "2024-02-12T05:18:12.000Z",

"ecs": {

"version": "1.12.0"

},

"_tmp": {},

"related": {

"ip": [

"127.0.0.1"

]

},

"service": {

"type": "nginx"

},

"host": {

"hostname": "$LAPTOP_NAME",

"os": {

$OS_DETAILS

},

"ip": [

$IP_DETAILS

],

"name": "$LAPTOP_NAME",

"id": "$ID",

"mac": [

$MAC_ADDR_DETAILS

],

"architecture": "x86_64"

},

"http": {

"request": {

"method": "GET"

},

"response": {

"status_code": 200,

"body": {

"bytes": 615

}

},

"version": "1.1"

},

"event": {

"ingested": "2024-02-16T14:18:59.560536200Z",

"original": "127.0.0.1 - - [12/Feb/2024:10:48:12 +0530] \"GET / HTTP/1.1\" 200 615 \"-\" \"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36\"",

"timezone": "+05:30",

"created": "2024-02-16T14:18:46.548Z",

"kind": "event",

"module": "nginx",

"category": [

"web"

],

"type": [

"access"

],

"dataset": "nginx.access",

"outcome": "success"

},

"user_agent": {

"original": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36",

"os": {

"name": "Windows",

"version": "10",

"full": "Windows 10"

},

"name": "Chrome",

"device": {

"name": "Other"

},

"version": "121.0.0.0"

}

}

}

"@timestamp": "2024-02-12T05:18:12.000Z

Perhaps On discover set time range last 90 days .... Your data is several weeks old and so you have to make the time range where your data is.

If that works try the same on the dashboards

Yes, that worked. All the documents are present. I tried it with the RabbitMQ module as well, and changing the time range worked. In the past when I changed the time range I didn't understand that the documents are stored by their timestamp, not when the filebeat index went active. Sorry for the confusion, and thank you very much for your help!

This topic was automatically closed 28 days after the last reply. New replies are no longer allowed.