I configured Elasticsearch, Logstash and Kibana after lots of errors. I also configured my fortinet firewall for syslogd server to send the logs to ELK server. But I am not getting any data from my firewall. Also I configured Elasticsearch, Kibana, Logstash all in one ubuntu server. I need to collect all types of logs like threat logs, event logs, network logs, wifi logs, etc. Kindly help me.

There are a few things that I'd suggest you take a look at:

- Use something like

tcpdumpto listen on the interface/port that should be receiving the syslog messages and see if packets are actually being received from the Fortinet firewall. - Make sure your protocols match, if your firewall is sending via

udpmake sure you're listening onudp. - Make sure you're actually listening on the correct port and that it is properly exposed.

- Depending on if it's possible, Elastic offers a Fortinet Module which I've found to be decent, you could use this instead of Logstash, and it may help streamline your log collection.

I have installed the filebeat and packetbeat. In /etc/filebeat/module.d/fortinet.yml file is available. Should make the changes on this file according to the document?

Yes, you should make any changes that fit your use case, then validate that Filebeat has properly loaded the module by looking at the Filebeat logs. Once you have that complete, if you're still having issues, I'd recommend following steps 1, 2, and 3 in the above that I posted for troubleshooting:

- Use something like

tcpdumpto listen on the interface/port that should be receiving the syslog messages and see if packets are actually being received from the Fortinet firewall.- Make sure your protocols match, if your firewall is sending via

udpmake sure you're listening onudp.- Make sure you're actually listening on the correct port and that it is properly exposed.

I'm not sure where to use the tcpdump. Where I'm using UDP protocol in syslog server. Below are the fortinet module data.

# Module: fortinet

# Docs: https://www.elastic.co/guide/en/beats/filebeat/master/filebeat-module-fortinet.html

- module: fortinet

firewall:

enabled: true

# Set which input to use between tcp, udp (default) or file.

var.input: udp

# The interface to listen to syslog traffic. Defaults to

# localhost. Set to 0.0.0.0 to bind to all available interfaces.

var.syslog_host: "firewall ISP IP"

# The port to listen for syslog traffic. Defaults to 9004.

var.syslog_port: 514

# Set internal interfaces. used to override parsed network.direction

# based on a tagged interface. Both internal and external interfaces must be

# set to leverage this functionality.

var.internal_interfaces: [ "LAN" ]

# Set external interfaces. used to override parsed network.direction

# based on a tagged interface. Both internal and external interfaces must be

# set to leverage this functionality.

var.external_interfaces: [ "WAN" ]

clientendpoint:

enabled: true

# Set which input to use between udp (default), tcp or file.

var.input: udp

var.syslog_host: "firewall ISP IP"

var.syslog_port: 514

# Set paths for the log files when file input is used.

var.paths: /var/log/filebeat/clientendpoint.log

# Toggle output of non-ECS fields (default true).

# var.rsa_fields: true

# Set custom timezone offset.

# "local" (default) for system timezone.

"+5:30" for GMT+5:30

var.tz_offset: GMT+5:30

fortimail:

enabled: true

# Set which input to use between udp (default), tcp or file.

var.input: udp

var.syslog_host: "firewall ISP IP"

var.syslog_port: 514

# Set paths for the log files when file input is used.

var.paths: /var/log/filebeat/fortimail.log

# Toggle output of non-ECS fields (default true).

# var.rsa_fields: true

# Set custom timezone offset.

# "local" (default) for system timezone.

"+5:30" for GMT+5:30

var.tz_offset: GMT+5:30

fortimanager:

enabled: true

# Set which input to use between udp (default), tcp or file.

var.input: udp

var.syslog_host: "firewall ISP IP"

var.syslog_port: 514

# Set paths for the log files when file input is used.

var.paths: /var/log/filebeat/fortimanager.log

# Toggle output of non-ECS fields (default true).

# var.rsa_fields: true

# Set custom timezone offset.

# "local" (default) for system timezone.

"+5:30" for GMT+5:30

var.tz_offset: GMT+5:30

I think your issue is:

var.syslog_host: "firewall ISP IP" instead of "firewall ISP IP", you should use the IP of the host that is running Filebeat, or as mentioned in the comment use 0.0.0.0 to listen for everything.

Hi Ben Thanks for your reply. I tried with all the options that you mentioned. But nothing works. I tried with 0.0.0.0 source IP Address and and with ISP IP Address. I done packet sniffing from the firewall to check the SYSLOG sends data to ELK server. Firewall sends the data to ELK server. I'm not sure where to work on this further. Kindly help me on this.

Hi @randyvinoth,

So, I think you need to be a bit clearer on how things are setup and some troubleshooting steps.

Some follow-up questions.

- Where is Filebeat installed? Is it installed on your ELK server?

- If Filebeat is installed on your ELK server, you say that you did packet sniffing to validate that the Firewall was sending logs to the ELK server.

2.1 Did you validate that it was sending to the correct port (514) and protocol (udp)?

2.2 Did you validate that the ELK server was actually receiving the packets with something like tcpdump? Example command:tcpdump -i any udp and port 514 - If validate that both 2.1 and 2.2 are working as intended, I'd then say configure Filebeat to listen on

0.0.0.0, just to keep things simple. If both 2.1 and 2.2 are true, and Filebeat is listening on0.0.0.0:

3.1 What do the Filebeat logs show when you start it up?

3.1.1 Are there anyerrorsorwarnings?

3.1.2 Can you possibly provide the startup logs from Filebeat?

3.2 Maybe also enable debug logging to get even more information: Configure logging | Filebeat Reference [8.0] | Elastic

The above questions should lead you down the correct path for troubleshooting this issue.

- Where is Filebeat installed? Is it installed on your ELK server?

Filebeat Installation Location in ubuntu: /usr/bin/filebeat /etc/filebeat /usr/share/filebeat

It is installed on the same server where ELK was installed.

2.If Filebeat is installed on your ELK server, you say that you did packet sniffing to validate that the Firewall was sending logs to the ELK server.

Yes, I done the packet sniffing from my firewall to check that syslog sends data to ELK server.

2.1 Did you validate that it was sending to the correct port (514) and protocol (udp)?

Yes.

2.2 Did you validate that the ELK server was actually receiving the packets with something like tcpdump?

Yes. I done the packet sniffing on the ELK server using wireshark and I could see the data receive on the UDP port in ELK server where the command is udp.port == 514

- If validate that both 2.1 and 2.2 are working as intended, I'd then say configure Filebeat to listen on

0.0.0.0, just to keep things simple. If both 2.1 and 2.2 are true, and Filebeat is listening on0.0.0.0:

Yes, I configured the fortinet module in Filebeat. I configured the output.Elasticsearch in the filebeat.yml file.

3.1 What do the Filebeat logs show when you start it up?

Below are the content of filebeat log file from the location /var/log/filebeat

"log.level":"info","@timestamp":"2022-02-21T15:37:54.508+0530","log.origin":{"file.name":"instance/beat.go","file.line":679},"message":"Home path: [/usr/share/filebeat] Config path: [/etc/filebeat] Data path: [/var/lib/filebeat] Logs path: [/var/log/filebeat]","service.name":"filebeat","ecs.version":"1.6.0"}

{"log.level":"info","@timestamp":"2022-02-21T15:37:54.509+0530","log.origin":{"file.name":"instance/beat.go","file.line":687},"message":"Beat ID: e7ebe7ac-88da-4bdb-9fe7-d02e1ada5f7f","service.name":"filebeat","ecs.version":"1.6.0"}

{"log.level":"warn","@timestamp":"2022-02-21T15:37:57.511+0530","log.logger":"add_cloud_metadata","log.origin":{"file.name":"add_cloud_metadata/provider_aws_ec2.go","file.line":80},"message":"read token request for getting IMDSv2 token returns empty: Put "http://169.254.169.254/latest/api/token": context deadline exceeded (Client.Timeout exceeded while awaiting headers). No token in the metadata request will be used.","service.name":"filebeat","ecs.version":"1.6.0"}

3.1.1 Are there any errors or warnings ?

How to check the error and warnings

3.2 Maybe also enable debug logging to get even more information:

Below the content of filebeat.yml file

I configured the logging section in filebeat.yml file

###################### Filebeat Configuration Example #########################

# This file is an example configuration file highlighting only the most common

# options. The filebeat.reference.yml file from the same directory contains all the

# supported options with more comments. You can use it as a reference.

#

# You can find the full configuration reference here:

# https://www.elastic.co/guide/en/beats/filebeat/index.html

# For more available modules and options, please see the filebeat.reference.yml sample

# configuration file.

# ============================== Filebeat inputs ===============================

filebeat.inputs:

# Each - is an input. Most options can be set at the input level, so

# you can use different inputs for various configurations.

# Below are the input specific configurations.

# filestream is an input for collecting log messages from files.

- type: filestream

# Change to true to enable this input configuration.

enabled: false

# Paths that should be crawled and fetched. Glob based paths.

paths:

- /var/log/*.log

#- c:\programdata\elasticsearch\logs\*

# Exclude lines. A list of regular expressions to match. It drops the lines that are

# matching any regular expression from the list.

#exclude_lines: ['^DBG']

# Include lines. A list of regular expressions to match. It exports the lines that are

# matching any regular expression from the list.

#include_lines: ['^ERR', '^WARN']

# Exclude files. A list of regular expressions to match. Filebeat drops the files that

# are matching any regular expression from the list. By default, no files are dropped.

#prospector.scanner.exclude_files: ['.gz$']

# Optional additional fields. These fields can be freely picked

# to add additional information to the crawled log files for filtering

#fields:

# level: debug

# review: 1

# ============================== Filebeat modules ==============================

filebeat.config.modules:

# Glob pattern for configuration loading

path: ${path.config}/modules.d/*.yml

# Set to true to enable config reloading

reload.enabled: false

# Period on which files under path should be checked for changes

#reload.period: 10s

# ======================= Elasticsearch template setting =======================

setup.template.settings:

index.number_of_shards: 1

#index.codec: best_compression

#_source.enabled: false

# ================================== General ===================================

# The name of the shipper that publishes the network data. It can be used to group

# all the transactions sent by a single shipper in the web interface.

#name:

# The tags of the shipper are included in their own field with each

# transaction published.

#tags: ["service-X", "web-tier"]

# Optional fields that you can specify to add additional information to the

# output.

#fields:

# env: staging

# ================================= Dashboards =================================

# These settings control loading the sample dashboards to the Kibana index. Loading

# the dashboards is disabled by default and can be enabled either by setting the

# options here or by using the `setup` command.

#setup.dashboards.enabled: false

# The URL from where to download the dashboards archive. By default this URL

# has a value which is computed based on the Beat name and version. For released

# versions, this URL points to the dashboard archive on the artifacts.elastic.co

# website.

#setup.dashboards.url:

# =================================== Kibana ===================================

# Starting with Beats version 6.0.0, the dashboards are loaded via the Kibana API.

# This requires a Kibana endpoint configuration.

setup.kibana:

# Kibana Host

# Scheme and port can be left out and will be set to the default (http and 5601)

# In case you specify and additional path, the scheme is required: http://localhost:5601/path

# IPv6 addresses should always be defined as: https://[2001:db8::1]:5601

host: "http://KIBANA IP ADDRESS:5601"

# Kibana Space ID

# ID of the Kibana Space into which the dashboards should be loaded. By default,

# the Default Space will be used.

#space.id:

# =============================== Elastic Cloud ================================

# These settings simplify using Filebeat with the Elastic Cloud (https://cloud.elastic.co/).

# The cloud.id setting overwrites the `output.elasticsearch.hosts` and

# `setup.kibana.host` options.

# You can find the `cloud.id` in the Elastic Cloud web UI.

#cloud.id:

# The cloud.auth setting overwrites the `output.elasticsearch.username` and

# `output.elasticsearch.password` settings. The format is `<user>:<pass>`.

#cloud.auth:

# ================================== Outputs ===================================

# Configure what output to use when sending the data collected by the beat.

# ---------------------------- Elasticsearch Output ----------------------------

output.elasticsearch:

# Array of hosts to connect to.

hosts: ["ELASTICSEARCH IP ADDRESS:9200"]

# Protocol - either `http` (default) or `https`.

#protocol: "https"

# Authentication credentials - either API key or username/password.

#api_key: "id:api_key"

username: "elastic"

password: "ELASTIC LOGIN PASSWORD"

# ------------------------------ Logstash Output -------------------------------

#output.logstash:

# The Logstash hosts

# hosts: ["LOGSTASH IP ADDRESS:5044"]

# Optional SSL. By default is off.

# List of root certificates for HTTPS server verifications

#ssl.certificate_authorities: ["/etc/pki/root/ca.pem"]

# Certificate for SSL client authentication

#ssl.certificate: "/etc/pki/client/cert.pem"

# Client Certificate Key

#ssl.key: "/etc/pki/client/cert.key"

# ================================= Processors =================================

processors:

- add_host_metadata:

when.not.contains.tags: forwarded

- add_cloud_metadata: ~

- add_docker_metadata: ~

- add_kubernetes_metadata: ~

# ================================== Logging ===================================

# Sets log level. The default log level is info.

# Available log levels are: error, warning, info, debug

#logging.level: debug

# At debug level, you can selectively enable logging only for some components.

# To enable all selectors use ["*"]. Examples of other selectors are "beat",

# "publisher", "service".

#logging.selectors: ["*"]

logging.level: debug

logging.to_syslog: true

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat

keepfiles: 1024

permissions: 0644

# ============================= X-Pack Monitoring ==============================

# Filebeat can export internal metrics to a central Elasticsearch monitoring

# cluster. This requires xpack monitoring to be enabled in Elasticsearch. The

# reporting is disabled by default.

# Set to true to enable the monitoring reporter.

#monitoring.enabled: false

# Sets the UUID of the Elasticsearch cluster under which monitoring data for this

# Filebeat instance will appear in the Stack Monitoring UI. If output.elasticsearch

# is enabled, the UUID is derived from the Elasticsearch cluster referenced by output.elasticsearch.

#monitoring.cluster_uuid:

# Uncomment to send the metrics to Elasticsearch. Most settings from the

# Elasticsearch output are accepted here as well.

# Note that the settings should point to your Elasticsearch *monitoring* cluster.

# Any setting that is not set is automatically inherited from the Elasticsearch

# output configuration, so if you have the Elasticsearch output configured such

# that it is pointing to your Elasticsearch monitoring cluster, you can simply

# uncomment the following line.

#monitoring.elasticsearch:

# ============================== Instrumentation ===============================

# Instrumentation support for the filebeat.

#instrumentation:

# Set to true to enable instrumentation of filebeat.

#enabled: false

# Environment in which filebeat is running on (eg: staging, production, etc.)

#environment: ""

# APM Server hosts to report instrumentation results to.

#hosts:

# - http://localhost:8200

# API Key for the APM Server(s).

# If api_key is set then secret_token will be ignored.

#api_key:

# Secret token for the APM Server(s).

#secret_token:

# ================================= Migration ==================================

# This allows to enable 6.7 migration aliases

#migration.6_to_7.enabled: true

As I couldn't add all the files on the reply, Kindly referer the below link for the reply of your questions. Kindly do the needful.

3.1.1 Are there any

errorsorwarnings?How to check the error and warnings

Warning and errors can be found in the Filebeat logs. You can use something like grep or ctrlf + f to find the words warn or error.

Two more clarifying questions,

- Is the ELK server a Windows server or a Linux server?

- Have you validated that the path for:

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

Properly goes to the path where you store the fortinet module file?

You should be able to tell as there should be a log in the Filebeat logs, saying the the fortinet module was loaded.

- Is the ELK server a Windows server or a Linux server?

It is hosted in Ubuntu Desktop machine.

- Have you validated that the path for:

The path is /etc/filebeat/module.d/fortinet.yml

Not sure how to do the following.

Properly goes to the path where you store the fortinet module file?

You should be able to tell as there should be a log in the Filebeat logs, saying the the fortinet module was loaded.

Not sure how to do the following.

Properly goes to the path where you store the fortinet module file?

You should be able to tell as there should be a log in the Filebeat logs, saying the the fortinet module was loaded.

The Filebeat logs should contain this information. Can you restart the Filebeat service, then wait a few minutes, then copy and paste the contents of the Filebeat logs into something like pastebin or gitgist so that the entire startup logs can be viewed?

Hi Ben,

I attached the git link for the file beat logs

Also I found that 401 communication error while connecting with Elasticsearch from logstash

Looking at your logs, the last line:

{"log.level":"error","@timestamp":"2022-02-22T12:32:15.205+0530","log.origin":{"file.name":"instance/beat.go","file.line":1025},"message":"Exiting: Failed to start crawler: creating module reloader failed: could not create module registry for filesets: module system is configured but has no enabled filesets","service.name":"filebeat","ecs.version":"1.6.0"}

module system is configured but has no enabled filesets

Seems to point to you having no enabled modules under modules.d folder.

Can you provide the actual file name that your Fortigate module file is called, and the full path for it?

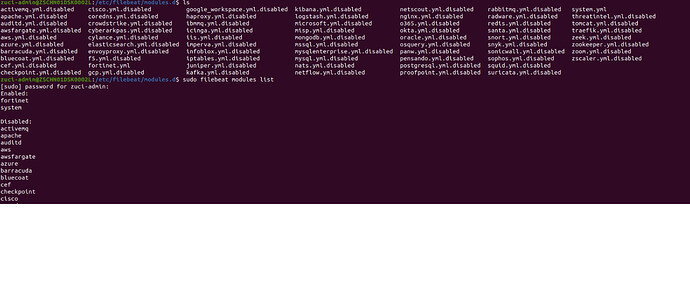

I've already enabled the fortinet module in Filebeat. The path of fortinet module and filebeat can be viewed in the attached terminal PIC

So, looking at the error line a bit further:

module system is configured but has no enabled filesets

Did you by chance edit the system.yml file, and remove all of the filesets? The issue might not be directly related to the fortinet module, but instead looks like the system module is causing the underlying issue.

But I've done it previously. Its not working.