Hi Warkolm,

I read the documents you provided, thank you.

So, I installed logstash-input-exec plugin, I created a bash script that, when it is executed, produces the following output

user=sysm02 limit=0 used=127.49

user=sysm01 limit=0 used=1315.69

user=sysm03 limit=0 used=17.42

user=sysm04 limit=0 used=20.53

user=sysm05 limit=0 used=16.50

user=sp1 limit=307200.00 used=151069.87

and I added "exec" input to the existing logstash config file(please, take a look at the code below)

input {

rabbitmq {

host => "localhost"

queue => "audit_messages"

}

exec {

command => "ssh irs02 icheckquota"

interval => 30

}

}

filter {

if "_jsonparsefailure" in [tags] {

mutate {

gsub => [ "message", "[\\]","" ]

gsub => [ "message", ".*__BEGIN_JSON__", ""]

gsub => [ "message", "__END_JSON__", ""]

}

mutate { remove_tag => [ "tags", "_jsonparsefailure" ] }

json { source => "message" }

}

# Parse the JSON message

json {

source => "message"

remove_field => ["message"]

}

# Replace @timestamp with the timestamp stored in time_stamp

date {

match => [ "time_stamp", "UNIX_MS" ]

}

# Convert select fields to integer

mutate {

convert => { "int" => "integer" }

convert => { "int__2" => "integer" }

convert => { "int__3" => "integer" }

convert => { "file_size" => "integer" }

}

}

output {

# Write the output to elastic search under the irods_audit index.

elasticsearch {

hosts => ["localhost:9200"]

index => "irods_audit"

}

}

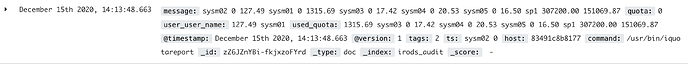

I restarted logstash, but logstash stop working for both the inputs and it returned this error:

[2020-12-15T10:19:31,706][WARN ][logstash.filters.json ] Parsed JSON object/hash requires a target configuration option {:source=>"message", :raw=>""}

Could you please help me to fix this error?

Thank you in advance,

Mauro