I am using ELK and the Twitter API to scrape data from twitter. It has been going for more than a week and suddenly stopped working. I am not sure if it is due to the "limits of total fields in index has been exceeded" error or other reasons. Although this error message has shown up during the week but the process kept going except this time.

I don't care about the reason it stopped. I just want it to restart again and still put the data into the existing index, instead of starting a new index.

Is there a way to do that?

FYI we’ve renamed ELK to the Elastic Stack, otherwise Beats and APM feel left out!

What OS are you on? How did you install things?

Ok, I will use Elastic Stack from now on.

Thanks for getting back to me on a holiday break.

I am using MacPro laptop. Installed everything in terminal with Java 1.8.0 background on the local computer and running it as one node, local host. I am using the Twitter API to acquire data from Twitter. It has been working for more than 10 days and this morning all of a sudden no new hits are coming in to the elastic search.

What do the logs show then?

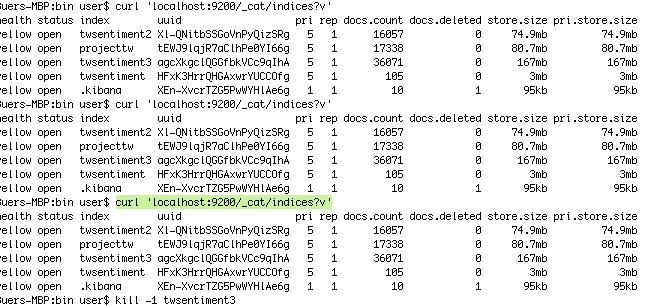

It shows nothing. It's just when I used the command " curl 'localhost:9200/_cat/indices?v' " the index is not showing increase in the number of hits

here is a screen shot

Please don't post pictures of text, they are difficult to read and some people may not be even able to see them

What version are you on?

Sorry I didn't know about the text in pictures are not a good way of presentation.

I am on elastic stack 6.0. all are the most up-to-date versions.

You will likely need to enable debug logs to see what is happening.

However I have noticed this same problem with the Twitter input, it's usually due to a network interruption.

I think you are right. I am doing this from my home and I know that my connection has interruptions once in a while. I am not dealing with critical log monitoring or anything. But I do want to accumulate large enough twitter hits into one index for my final analysis. Therefore I am wondering, is there a way for me to either restart the instance under the same index name or a way to combine the separate indices into one single index for the purpose of analysis.

It'll do this out of the box. Why are you changing the index?

So you are saying I can just restart the logstash using the same config file? I probably need to Ctrl + C out of everything and restart the computer to start everything again, right? Because last time I ran into a problem when trying to restart the logstash, the systems said "there's an instance already running with the same config", that's why I had to give it a different index name to get the process going again.

Yes.

No.

Sounds like you didn't properly exit things then.

Should I just leave the Elastic search and Kibana on and just "ctrl + c" the logstash and then restart logstash? Do I need to go back to the /logstash-6.0.0/bin folder to do the "ctrl + C" and then restart the logstash by "./logstash -f indexname.conf" ?

This is what I got by doing the list of thing I mentioned above.

"[2017-12-25T23:32:34,817][FATAL][logstash.runner ] Logstash could not be started because there is already another instance using the configured data directory. If you wish to run multiple instances, you must change the "path.data" setting."

But I don't want to run multiple instances, I just want the logstash start again and keep putting data into the original index where it drop off from, instead of starting a new index.

With Logstash shut down, delete the left-over lock file from the data directory.

This topic was automatically closed 28 days after the last reply. New replies are no longer allowed.