TL;DR - Removing Logstash CIDR Filter plugin solved the issue.

We've solved the issue (for now at least  ).

).

Here is a brief summary of the issue just in case someone runs into something similar.

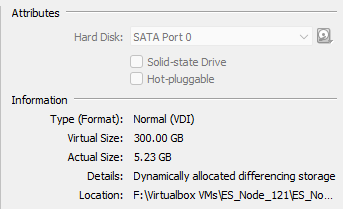

The initial setup (all VMs spread across multiple machines):

Filebeat -- Logstash -- ES (3 node cluster)

Logstash filters are in use for log enrichment based on source ip and other details.

Trouble was, the Kibana interface would become unresponsive and there were visible gaps in logs being ingested.

Assuming this was due to the ES stack could not keep up with the log volume, we added Redis to the mix. The revised setup:

Filebeat -- Redis -- Logstash -- ES (3 node cluster)

After Redis was added, logs were consistent. However, over time Redis's queue would get filled up to the point where all its RAM would be used up.

Clearly, this needed more than Redis to work! So we decided to move the ES cluster from VMs to physical machines. We moved 2 nodes off VMs, one remained a VM. (There was a lesson about adding new nodes to the cluster while retaining at least 2 existing nodes we learned here).

Things worked fine for a while, but then Redis's queue started getting filled up again. At this point we decided to look at other components in the stack. We decided to remove filters from Logstash one by one to see if it had any impact. Turns out, the bottleneck was the CIDR filter plugin.

We were adding some fields based on source IP using the CIDR filter plugin. The CIDR filter plugin was used for looking up the subnet to which the source IP belonged based on which logs were enriched.

The maximum output of logstash with the CIDR filter enabled hovered around ~800/s. When the log input exceeded this figure there would be log loss or Redis queue would start getting filled up (after Redis was deployed).

Things are normal now without the CIDR filter plugin being used. We are working on finding an alternate to it.

Thanks everyone!