Environment

| Item | Version / Detail |

|---|---|

| Security Onion | 2.4.160 |

| Host role | so-manager (10.10.0.13) Standalone Network install on Rocky Linux 9 |

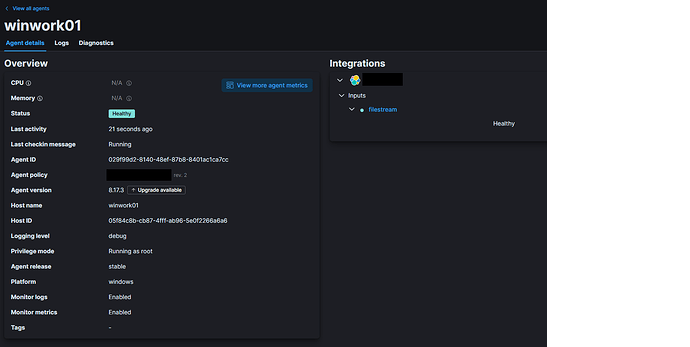

| Elastic Agent endpoint | Windows host winwork01 (10.10.0.4) |

| Beats/Elastic-Agent port | 5055/TCP (default from install) |

Issue Summary

I've developed a custom integration that collects logs that should be picked up by Elastic Agent and forwarded. I see the events being published from my Windows host, but they seem to be getting stuck inside Logstash. Even after trying to disable client-certificate verification and regenerating the CA, Logstash does not seem to forward events to my Fleet Server/Elasticsearch/Kibana. I get the following error inside the Logstash Docker container:

[2025-07-09T14:34:51,454][INFO ][org.logstash.beats.BeatsHandler] [local: 172.17.1.29:5055, remote: 10.10.0.4:55759] Handling exception: io.netty.handler.codec.DecoderException: javax.net.ssl.SSLHandshakeException: Received fatal alert: bad_certificate (caused by: javax.net.ssl.SSLHandshakeException: Received fatal alert: bad_certificate)

[2025-07-09T14:34:51,455][WARN ][io.netty.channel.DefaultChannelPipeline] An exceptionCaught() event was fired, and it reached at the tail of the pipeline. It usually means the last handler in the pipeline did not handle the exception.

io.netty.handler.codec.DecoderException: javax.net.ssl.SSLHandshakeException: Received fatal alert: bad_certificate

at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:500) ~[netty-codec-4.1.118.Final.jar:4.1.118.Final]

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:290) ~[netty-codec-4.1.118.Final.jar:4.1.118.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:444) ~[netty-transport-4.1.118.Final.jar:4.1.118.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420) ~[netty-transport-4.1.118.Final.jar:4.1.118.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:412) ~[netty-transport-4.1.118.Final.jar:4.1.118.Final]

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1357) ~[netty-transport-4.1.118.Final.jar:4.1.118.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:440) ~[netty-transport-4.1.118.Final.jar:4.1.118.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420) ~[netty-transport-4.1.118.Final.jar:4.1.118.Final]

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:868) ~[netty-transport-4.1.118.Final.jar:4.1.118.Final]

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166) ~[netty-transport-4.1.118.Final.jar:4.1.118.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:796) ~[netty-transport-4.1.118.Final.jar:4.1.118.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:732) ~[netty-transport-4.1.118.Final.jar:4.1.118.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:658) ~[netty-transport-4.1.118.Final.jar:4.1.118.Final]

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:562) ~[netty-transport-4.1.118.Final.jar:4.1.118.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:998) ~[netty-common-4.1.118.Final.jar:4.1.118.Final]

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74) ~[netty-common-4.1.118.Final.jar:4.1.118.Final]

at java.lang.Thread.run(Thread.java:1583) ~[?:?]

Caused by: javax.net.ssl.SSLHandshakeException: Received fatal alert: bad_certificate

at sun.security.ssl.Alert.createSSLException(Alert.java:130) ~[?:?]

at sun.security.ssl.Alert.createSSLException(Alert.java:117) ~[?:?]

at sun.security.ssl.TransportContext.fatal(TransportContext.java:370) ~[?:?]

at sun.security.ssl.Alert$AlertConsumer.consume(Alert.java:287) ~[?:?]

at sun.security.ssl.TransportContext.dispatch(TransportContext.java:209) ~[?:?]

at sun.security.ssl.SSLTransport.decode(SSLTransport.java:172) ~[?:?]

at sun.security.ssl.SSLEngineImpl.decode(SSLEngineImpl.java:736) ~[?:?]

at sun.security.ssl.SSLEngineImpl.readRecord(SSLEngineImpl.java:691) ~[?:?]

at sun.security.ssl.SSLEngineImpl.unwrap(SSLEngineImpl.java:506) ~[?:?]

at sun.security.ssl.SSLEngineImpl.unwrap(SSLEngineImpl.java:482) ~[?:?]

at javax.net.ssl.SSLEngine.unwrap(SSLEngine.java:679) ~[?:?]

at io.netty.handler.ssl.SslHandler$SslEngineType$3.unwrap(SslHandler.java:309) ~[netty-handler-4.1.118.Final.jar:4.1.118.Final]

at io.netty.handler.ssl.SslHandler.unwrap(SslHandler.java:1485) ~[netty-handler-4.1.118.Final.jar:4.1.118.Final]

at io.netty.handler.ssl.SslHandler.decodeJdkCompatible(SslHandler.java:1378) ~[netty-handler-4.1.118.Final.jar:4.1.118.Final]

at io.netty.handler.ssl.SslHandler.decode(SslHandler.java:1427) ~[netty-handler-4.1.118.Final.jar:4.1.118.Final]

at io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:530) ~[netty-codec-4.1.118.Final.jar:4.1.118.Final]

at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:469) ~[netty-codec-4.1.118.Final.jar:4.1.118.Final]

... 16 more

This prevents any data from reaching Elasticsearch/Kibana.

Things I've Tried...

-

Created custom Beats input

- Created:

/opt/so/saltstack/local/salt/logstash/pipelines/config/custom/0012_input_elastic_agent_noauth.conf - Key changes:

ssl_verify_mode => "none" # ssl_certificate_authorities removed

- Created:

-

Updated pipeline list (replaced

so/0012_input_elastic_agent.conf.jinja)- SOC → Administration → Configuration → logstash → defined_pipelines → manager:

so/0011_input_endgame.conf custom/0012_input_elastic_agent_noauth.conf so/0013_input_lumberjack_fleet.conf so/9999_output_redis.conf.jinja - Clicked Synchronize Grid (Salt redeployed & restarted Logstash).

- SOC → Administration → Configuration → logstash → defined_pipelines → manager:

-

Tried to regenerate certificates

sudo rm -f /etc/pki/*ssl*.{crt,key} sudo salt-call state.apply ssl,nginx,logstash -

Downloaded fresh Elastic-Agent installer from SOC → Downloads.

- Reinstalled agent on winwork01

Note that this is a lab environment and I'm not concerned about the security implications of disabling SSL verification if I have to. I just need to get the events forwarded somehow and I'm at my wits end.

Any ideas on what I'm doing wrong here?