Hi all,

I'm trying to load the data into ES. It seems like Logstash can read the files and loaded it into ES. But the doc_count in Kibana/ES show 0 and I cannot create the index pattern.

I'm using ES:7.6.1; Kibana:7.6.1

This is my conf file:

input{

file{

path => "/home/chanchowing/log-users_route_2/*.csv"

start_position => "beginning"

} }

filter{

csv{

separator => ","

columns => ["_id", "user", "lat", "lng", "alt", "createdAt", "updatedAt"]

}

mutate {

convert => {

"lat" => "float"

"lng" => "float"

"alt" => "float"

}

}

date {

match => ["createdAt", "yyyy-MM-dd HH:mm:ss,SSS Z", "ISO8601"]

}

date {

match => ["updatedAt", "yyyy-MM-dd HH:mm:ss,SSS Z", "ISO8601"]

}

}

output{

elasticsearch{

hosts => ["localhost:9200"]

index => "5g_1"

}

stdout { codec => rubydebug }

}

Logstash can read the file and loaded it into ES

But the doc_count is 0 in Kibana

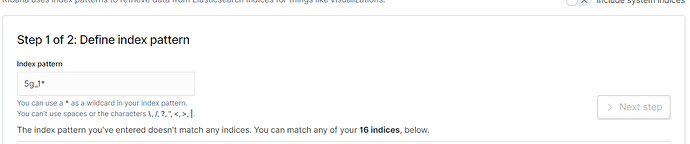

And I cannot find the indices in the index patterns. So I cannot create a index pattern.

the doc count is also 0 in ES

and also I need to restart my VM if i want to load another file even I changed the path.name. This is the error message:

[LogStash: Runner] runner - Logstash count not be started because there is already another instance using the configured data directory. If you wish to run multiple instances, you must change the “path.data” setting.

[LogStash: Runner] Logstash - java.lang.IllegalStateException: Logstash stopped processing because of an error : (SystemExit) exit

So my question is:

-

the doc count = 0 is because the data never load into ES? if yes, anything wrong in the conf file? or how can I fix this?

-

I cannot find the indices name(5g_1) in the index patterns because 5g_1: doc count =0?

-

why I need to restart my VM to load another file via logstash.

Thank's for your help.

Gladys