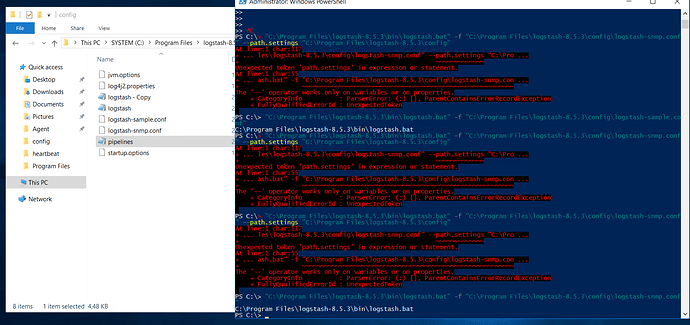

I re change the LS conf file, which i switch the community value to "private". And execute the command, getting this error :

PS C:\Program Files\logstash-8.5.3\bin> .\logstash.bat -f "C:\Program Files\logstash-8.5.3\config\logstash-snmp.conf" --path.settings "C:\Program Files\logstash-8.5.3\config"

"Using bundled JDK: C:\Program Files\logstash-8.5.3\jdk\bin\java.exe"

Sending Logstash logs to C:/Program Files/logstash-8.5.3/logs which is now configured via log4j2.properties

[2022-12-23T07:11:58,421][INFO ][logstash.runner ] Log4j configuration path used is: C:\Program Files\logstash-8.5.3\config\log4j2.properties

[2022-12-23T07:11:58,442][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"8.5.3", "jruby.version"=>"jruby 9.3.9.0 (2.6.8) 2022-10-24 537cd1f8bc OpenJDK 64-Bit Server VM 17.0.5+8 on 17.0

.5+8 +indy +jit [x86_64-mswin32]"}

[2022-12-23T07:11:58,455][INFO ][logstash.runner ] JVM bootstrap flags: [-Xms1g, -Xmx1g, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djruby.compile.invokedynamic=true, -Djruby.jit.threshold=0, -XX

:+HeapDumpOnOutOfMemoryError, -Djava.security.egd=file:/dev/urandom, -Dlog4j2.isThreadContextMapInheritable=true, -Djruby.regexp.interruptible=true, -Djdk.io.File.enableADS=true, --add-exports=jdk.compiler/com.s

un.tools.javac.api=ALL-UNNAMED, --add-exports=jdk.compiler/com.sun.tools.javac.file=ALL-UNNAMED, --add-exports=jdk.compiler/com.sun.tools.javac.parser=ALL-UNNAMED, --add-exports=jdk.compiler/com.sun.tools.javac.

tree=ALL-UNNAMED, --add-exports=jdk.compiler/com.sun.tools.javac.util=ALL-UNNAMED, --add-opens=java.base/java.security=ALL-UNNAMED, --add-opens=java.base/java.io=ALL-UNNAMED, --add-opens=java.base/java.nio.chann

els=ALL-UNNAMED, --add-opens=java.base/sun.nio.ch=ALL-UNNAMED, --add-opens=java.management/sun.management=ALL-UNNAMED]

[2022-12-23T07:11:58,640][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2022-12-23T07:12:02,349][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600, :ssl_enabled=>false}

[2022-12-23T07:12:06,077][INFO ][org.reflections.Reflections] Reflections took 336 ms to scan 1 urls, producing 125 keys and 438 values

[2022-12-23T07:12:08,372][INFO ][logstash.javapipeline ] Pipeline main is configured with pipeline.ecs_compatibility: v8 setting. All plugins in this pipeline will default to ecs_compatibility => v8 unl

ess explicitly configured otherwise.

[2022-12-23T07:12:08,466][INFO ][logstash.outputs.elasticsearch][main] New Elasticsearch output {:class=>"LogStash::Outputs::Elasticsearch", :hosts=>["//127.0.0.1"]}

[2022-12-23T07:12:09,158][INFO ][logstash.outputs.elasticsearch][main] Elasticsearch pool URLs updated {:changes=>{:removed=>, :added=>[https://elastic:xxxxxx@2d3c22daa3ed4e73bc78fc9ee717d3b6.us-central1.gcp.c

loud.es.io:443/]}}

[2022-12-23T07:12:10,555][WARN ][logstash.outputs.elasticsearch][main] Restored connection to ES instance {:url=>"https://elastic:xxxxxx@2d3c22daa3ed4e73bc78fc9ee717d3b6.us-central1.gcp.cloud.es.io:443/"}

[2022-12-23T07:12:10,689][INFO ][logstash.outputs.elasticsearch][main] Elasticsearch version determined (8.5.2) {:es_version=>8}

[2022-12-23T07:12:10,721][WARN ][logstash.outputs.elasticsearch][main] Detected a 6.x and above cluster: the type event field won't be used to determine the document _type {:es_version=>8}

[2022-12-23T07:12:10,966][INFO ][logstash.outputs.elasticsearch][main] Config is not compliant with data streams. data_stream => auto resolved to false

[2022-12-23T07:12:11,002][INFO ][logstash.outputs.elasticsearch][main] Config is not compliant with data streams. data_stream => auto resolved to false

[2022-12-23T07:12:11,011][WARN ][logstash.outputs.elasticsearch][main] Elasticsearch Output configured with ecs_compatibility => v8, which resolved to an UNRELEASED preview of version 8.0.0 of the Elastic Comm

on Schema. Once ECS v8 and an updated release of this plugin are publicly available, you will need to update this plugin to resolve this warning.

[2022-12-23T07:12:11,132][INFO ][logstash.outputs.elasticsearch][main] Using a default mapping template {:es_version=>8, :ecs_compatibility=>:v8}

[2022-12-23T07:12:11,527][INFO ][logstash.javapipeline ][main] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>

250, "pipeline.sources"=>["C:/Program Files/logstash-8.5.3/config/logstash-snmp.conf"], :thread=>"#<Thread:0x5ccdf5e7 run>"}

[2022-12-23T07:12:12,915][INFO ][logstash.javapipeline ][main] Pipeline Java execution initialization time {"seconds"=>1.38}

[2022-12-23T07:12:13,018][INFO ][logstash.inputs.snmp ][main] using plugin provided MIB path C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/mibs/logstash

[2022-12-23T07:12:13,065][INFO ][logstash.inputs.snmp ][main] using plugin provided MIB path C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/mibs/ietf

(eval):1426: warning: key "bfdSessDiag" is duplicated and overwritten on line 1423

(eval):1462: warning: key "bfdSessDiag" is duplicated and overwritten on line 1459

(eval):1658: warning: key "ipNetToMediaPhysAddress" is duplicated and overwritten on line 1663

(eval):2013: warning: key "mplsXCOperStatus" is duplicated and overwritten on line 2010

(eval):2049: warning: key "mplsXCOperStatus" is duplicated and overwritten on line 2046

(eval):1136: warning: key "ospfTrapControlGroup" is duplicated and overwritten on line 1133

(eval):2175: warning: key "pwOperStatus" is duplicated and overwritten on line 2172

(eval):2219: warning: key "pwOperStatus" is duplicated and overwritten on line 2216

(eval):1835: warning: key "rdbmsGroup" is duplicated and overwritten on line 1840

(eval):732: warning: key "rip2GlobalGroup" is duplicated and overwritten on line 729

(eval):732: warning: key "rip2IfStatGroup" is duplicated and overwritten on line 733

(eval):732: warning: key "rip2IfConfGroup" is duplicated and overwritten on line 737

(eval):732: warning: key "rip2PeerGroup" is duplicated and overwritten on line 741

(eval):3011: warning: key "slapmPolicyMonitorStatus" is duplicated and overwritten on line 3016

(eval):3063: warning: key "slapmPolicyMonitorStatus" is duplicated and overwritten on line 3068

(eval):3279: warning: key "slapmSubcomponentMonitorStatus" is duplicated and overwritten on line 3288

(eval):3337: warning: key "slapmSubcomponentMonitorStatus" is duplicated and overwritten on line 3346

(eval):3389: warning: key "slapmPRMonStatus" is duplicated and overwritten on line 3394

(eval):3441: warning: key "slapmPRMonStatus" is duplicated and overwritten on line 3446

(eval):3669: warning: key "slapmSubcomponentMonitorStatus" is duplicated and overwritten on line 3678

(eval):3727: warning: key "slapmSubcomponentMonitorStatus" is duplicated and overwritten on line 3736

[2022-12-23T07:12:18,211][INFO ][logstash.inputs.snmp ][main] ECS compatibility is enabled but target option was not specified. This may cause fields to be set at the top-level of the event where they are

likely to clash with the Elastic Common Schema. It is recommended to set the target option to avoid potential schema conflicts (if your data is ECS compliant or non-conflicting, feel free to ignore this messag

e)

[2022-12-23T07:12:18,227][INFO ][logstash.javapipeline ][main] Pipeline started {"pipeline.id"=>"main"}

[2022-12-23T07:12:18,373][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>}

[2022-12-23T07:12:21,330][ERROR][logstash.inputs.snmp ][main][0da1120beb9b72d1952f3f798864d3bb2f4d3505a9f4355ae2d543d8e0606dc9] error invoking get operation, ignoring {:host=>"10.1.133.250", :oids=>["1.3.6.1

.2.1.1.1.0", "1.3.6.1.2.1.1.3.0", "1.3.6.1.2.1.1.5.0"], :exception=>#<LogStash::SnmpClientError: timeout sending snmp get request to target 10.1.133.250/161>, :backtrace=>["C:/Program Files/logstash-8.5.3/vendor

/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp/base_client.rb:39:in get'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inp uts/snmp.rb:210:in block in poll_clients'", "org/jruby/RubyArray.java:1865:in each'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:202:i n poll_clients'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:197:in block in run'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jru by/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:380:in every'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:196:in run'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:411:ininputworker'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:402:in block in start_inpu t'"]} [2022-12-23T07:12:51,277][ERROR][logstash.inputs.snmp ][main][0da1120beb9b72d1952f3f798864d3bb2f4d3505a9f4355ae2d543d8e0606dc9] error invoking get operation, ignoring {:host=>"10.1.133.250", :oids=>["1.3.6.1 .2.1.1.1.0", "1.3.6.1.2.1.1.3.0", "1.3.6.1.2.1.1.5.0"], :exception=>#<LogStash::SnmpClientError: timeout sending snmp get request to target 10.1.133.250/161>, :backtrace=>["C:/Program Files/logstash-8.5.3/vendor /bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp/base_client.rb:39:in get'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inp

uts/snmp.rb:210:in block in poll_clients'", "org/jruby/RubyArray.java:1865:in each'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:202:i

n poll_clients'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:197:in block in run'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jru

by/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:380:in every'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:196:in

run'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:411:in inputworker'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:402:in block in start_inpu

t'"]}

[2022-12-23T07:13:21,279][ERROR][logstash.inputs.snmp ][main][0da1120beb9b72d1952f3f798864d3bb2f4d3505a9f4355ae2d543d8e0606dc9] error invoking get operation, ignoring {:host=>"10.1.133.250", :oids=>["1.3.6.1

.2.1.1.1.0", "1.3.6.1.2.1.1.3.0", "1.3.6.1.2.1.1.5.0"], :exception=>#<LogStash::SnmpClientError: timeout sending snmp get request to target 10.1.133.250/161>, :backtrace=>["C:/Program Files/logstash-8.5.3/vendor

/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp/base_client.rb:39:in get'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inp uts/snmp.rb:210:in block in poll_clients'", "org/jruby/RubyArray.java:1865:in each'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:202:i n poll_clients'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:197:in block in run'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jru by/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:380:in every'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:196:in run'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:411:ininputworker'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:402:in block in start_inpu t'"]} [2022-12-23T07:13:51,277][ERROR][logstash.inputs.snmp ][main][0da1120beb9b72d1952f3f798864d3bb2f4d3505a9f4355ae2d543d8e0606dc9] error invoking get operation, ignoring {:host=>"10.1.133.250", :oids=>["1.3.6.1 .2.1.1.1.0", "1.3.6.1.2.1.1.3.0", "1.3.6.1.2.1.1.5.0"], :exception=>#<LogStash::SnmpClientError: timeout sending snmp get request to target 10.1.133.250/161>, :backtrace=>["C:/Program Files/logstash-8.5.3/vendor /bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp/base_client.rb:39:in get'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inp

uts/snmp.rb:210:in block in poll_clients'", "org/jruby/RubyArray.java:1865:in each'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:202:i

n poll_clients'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:197:in block in run'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jru

by/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:380:in every'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:196:in

run'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:411:in inputworker'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:402:in block in start_inpu

t'"]}

[2022-12-23T07:14:21,278][ERROR][logstash.inputs.snmp ][main][0da1120beb9b72d1952f3f798864d3bb2f4d3505a9f4355ae2d543d8e0606dc9] error invoking get operation, ignoring {:host=>"10.1.133.250", :oids=>["1.3.6.1

.2.1.1.1.0", "1.3.6.1.2.1.1.3.0", "1.3.6.1.2.1.1.5.0"], :exception=>#<LogStash::SnmpClientError: timeout sending snmp get request to target 10.1.133.250/161>, :backtrace=>["C:/Program Files/logstash-8.5.3/vendor

/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp/base_client.rb:39:in get'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inp uts/snmp.rb:210:in block in poll_clients'", "org/jruby/RubyArray.java:1865:in each'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:202:i n poll_clients'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:197:in block in run'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jru by/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:380:in every'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:196:in run'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:411:ininputworker'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:402:in block in start_inpu t'"]} [2022-12-23T07:14:51,279][ERROR][logstash.inputs.snmp ][main][0da1120beb9b72d1952f3f798864d3bb2f4d3505a9f4355ae2d543d8e0606dc9] error invoking get operation, ignoring {:host=>"10.1.133.250", :oids=>["1.3.6.1 .2.1.1.1.0", "1.3.6.1.2.1.1.3.0", "1.3.6.1.2.1.1.5.0"], :exception=>#<LogStash::SnmpClientError: timeout sending snmp get request to target 10.1.133.250/161>, :backtrace=>["C:/Program Files/logstash-8.5.3/vendor /bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp/base_client.rb:39:in get'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inp

uts/snmp.rb:210:in block in poll_clients'", "org/jruby/RubyArray.java:1865:in each'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:202:i

n poll_clients'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:197:in block in run'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jru

by/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:380:in every'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:196:in

run'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:411:in inputworker'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:402:in block in start_inpu

[2022-12-23T07:18:21,284][ERROR][logstash.inputs.snmp ][main][0da1120beb9b72d1952f3f798864d3bb2f4d3505a9f4355ae2d543d8e0606dc9] error invoking get operation, ignoring {:host=>"10.1.133.250", :oids=>["1.3.6.1

.2.1.1.1.0", "1.3.6.1.2.1.1.3.0", "1.3.6.1.2.1.1.5.0"], :exception=>#<LogStash::SnmpClientError: timeout sending snmp get request to target 10.1.133.250/161>, :backtrace=>["C:/Program Files/logstash-8.5.3/vendor

/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp/base_client.rb:39:in get'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inp uts/snmp.rb:210:in block in poll_clients'", "org/jruby/RubyArray.java:1865:in each'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:202:i n poll_clients'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:197:in block in run'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jru by/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:380:in every'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:196:in run'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:411:ininputworker'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:402:in block in start_inpu t'"]} [2022-12-23T07:18:51,284][ERROR][logstash.inputs.snmp ][main][0da1120beb9b72d1952f3f798864d3bb2f4d3505a9f4355ae2d543d8e0606dc9] error invoking get operation, ignoring {:host=>"10.1.133.250", :oids=>["1.3.6.1 .2.1.1.1.0", "1.3.6.1.2.1.1.3.0", "1.3.6.1.2.1.1.5.0"], :exception=>#<LogStash::SnmpClientError: timeout sending snmp get request to target 10.1.133.250/161>, :backtrace=>["C:/Program Files/logstash-8.5.3/vendor /bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp/base_client.rb:39:in get'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inp

uts/snmp.rb:210:in block in poll_clients'", "org/jruby/RubyArray.java:1865:in each'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:202:i

n poll_clients'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:197:in block in run'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jru

by/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:380:in every'", "C:/Program Files/logstash-8.5.3/vendor/bundle/jruby/2.6.0/gems/logstash-input-snmp-1.3.1/lib/logstash/inputs/snmp.rb:196:in

run'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:411:in inputworker'", "C:/Program Files/logstash-8.5.3/logstash-core/lib/logstash/java_pipeline.rb:402:in block in start_inpu

t'"]}

error invoking get operation, ignoring {:host=>"10.1.133.250", :oids=>["1.3.6.1

.2.1.1.1.0", "1.3.6.1.2.1.1.3.0", "1.3.6.1.2.1.1.5.0"], :exception=>#<LogStash::SnmpClientError: timeout sending snmp get request to target 10.1.133.250/161>, :backtrace=>["C:/Program Files/logstash-8.5.3/vendor

/bundle/jruby/2.6.0/gems/logstash-input-

I see the error, time out sending SNMP get request to the device.

I checked internally and confirm the connection establish between device to Elastic cluster server.

Any advise? Thank you